Pressure Project 1: Circles

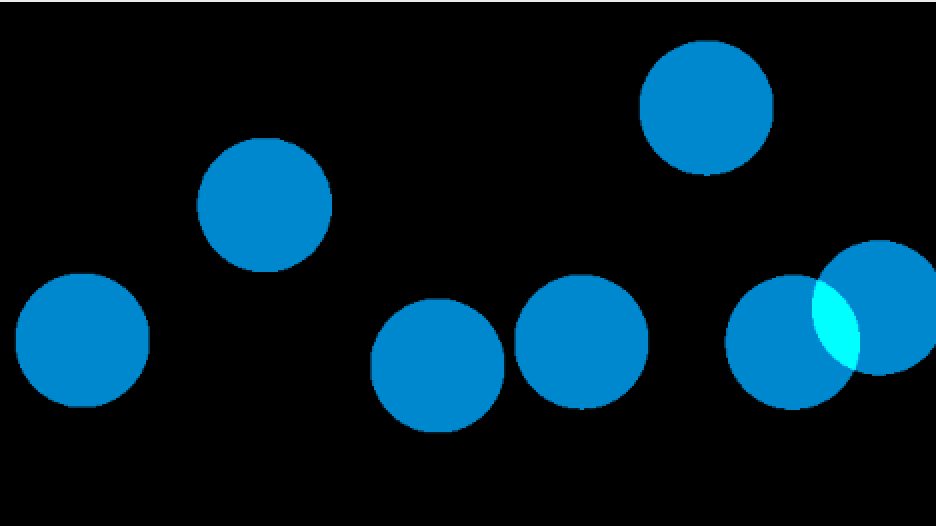

Posted: January 30, 2024 Filed under: Uncategorized Leave a comment »I approached this assignment without a specific goal in mind, focusing on actors that had caught my interest during our initial exposure to the software, such as the Movie Player, Shimmer, and Wave Generator. My aim was to experiment with these actors and explore the various variables I could manipulate. That served as my starting point.

Additionally, I considered actors recommended for experimentation, like the Shape actor and Video In Watcher. Before delving into the patch, I sought visual inspiration by looking at how designers and artists utilized patterns in their work and how they went about animating them. The images I explored influenced my creative process.

After gathering inspiration, I experimented with the Shapes tool and the Wave Generator to create and animate patterns in Isadora. Upon completing my first scene, I searched for a video to use with the Movie Player actor, choosing one supplied by a classmate featuring water and bubbles. To maintain engagement, I aimed for playing with the different perceptions of circles—moving from repetitive motion to free-flowing, organic visuals, and interactive movement.

While interactivity wasn’t a requirement, I implemented the Mouse In Watcher to grant users control over variables like shape dimensions, sound volume, and Shimmer effects intensity. The Movie In Watcher, paired with the Dots effect, facilitated circle movement using light, shadows, and colors captured from the camera input.

See screen recording below (does not include sound 🙁 ).

One aspect I wanted to incorporate was computer vision, intending for movement captured by the Video In Watcher to influence the music volume. Although I couldn’t achieve this before the presentation, I learned how to make it work afterward.

I’m pleased that viewers stayed engaged with the patch, and achieving interesting transitions was a success. A classmate even mentioned enjoying the evolution of circles from spacious and mobile to tight and grid-like in the final scene, a detail I hadn’t initially considered but found satisfying upon reflection.

While I don’t regret adding interactivity, my future goal is to master using actors to autonomously manipulate variables for a more self-generated patch, aligning with the requirements of the assignment.

Pressure Project 1 – Generative Dark Patterns

Posted: January 30, 2024 Filed under: Uncategorized Leave a comment »I wasn’t really sure what I wanted to do for this project at first. We were tasked with creating something self-generating and almost unpredictable, which was daunting to me in a new software. I figured the best way to create something was to just begin though.

HOUR 1

Within my first hour I started playing around with some of the different actors in Isadora. I wanted to see what would happen if I plugged the wave generator into various parts of the shapes actor. I noticed that when I put a sine wave into both the horizontal and vertical positions my default square kind of bounced around the screen, but not in a way that I felt was interesting. When I changed the wave type to a triangle though I noticed that the square started to bounce in a very familiar way, almost like an old screensaver (I was specifically reminded of the screensaver on my old family Gateway computer, both the bouncing logo and the pipes). Moreover, if I added multiple shapes on top of each other and messed with the phase there was a nice additive effect when shapes crossed that I liked.

HOUR 2

I wanted to randomize the different shapes going around my space. After looking through a few tutorials and posts I happened across this post talking about using a counter being used to select different timecodes: https://community.troikatronix.com/topic/6399/answered-using-a-list-of-timecodes-to-trigger-events-in-this-case-lights-through-midi. This was interesting to me, and I figured I could do something similar to create a loop that would activate different shapes at once. After trying for a little bit, I couldn’t get my loop to work, however I got very close. My logic kept short-circuiting.

I figured I would give up on this and just manually make my randomized entrances. I still had no clue what I wanted to do though, and I was getting a bit worried. Had I squandered all this time? Was I going in the right direction?

EPIPHONY

I started musing on the part of this project about grabbing people’s attention. What was it about Conway’s Game of Life or bouncing screen savers that had people staring at them for hours? For Game of Life surely there are emerging patterns and self-generating complexity, something obviously compelling to me, but why was a bouncing logo just as enthralling? I remembered that I used to try and predict when that logo would get caught perfectly in a corner of the screen. It never would, but it got so close so many times, and that’s why I kept watching. There’s something about being so close to perfection that is strangely alluring.

While we were discussing the second reading by Hari and Thanassis, I had a thought. We got on the topic of dark patterns, specifically within games like Candy Crush, and I was reminded of those horrible mobile ads that I would get occasionally. The idea is that they would set up what looked like really easy gameplay but whoever was playing would purposefully play badly and fail to get you to download the game. It’s a very sneaky tactic that acts as a gateway into games with even more dark patterns. I was also reminded of several trends a year ago on TikTok and YouTube where content creators started putting gameplay from popular games into the bottom or corner of their videos (Subway Surfers particularly became a huge meme). The idea was that to keep people from scrolling off of an otherwise dull video, more lively footage was added to retain peoples’ attention, which would in turn increase revenue since watch time was inflating.

These emerging patterns intrigued me, as they were real evolving trends built on the foundation of retaining attention. They were just as unpredictable to me as Conway’s Game of Life, just as captivating, and just like one of those tiles, the trend disappeared, as do many. I wanted to make something that was a commentary on this, tying it all together with the earliest and simplest attention grabber I remembered, that being the bouncing screen saver.

HOUR 3

I spent the next hour collating and integrating several different clips of gameplay from various titles I saw used in the trends I talked about earlier, and I will link those sources below:

- Mobile ads: Mobile game ads (youtube.com)

- Subway Surfers Gameplay: Subway Surfers Gameplay (PC UHD) [4K60FPS] (youtube.com)

- Minecraft Parkour: 11 Minutes Minecraft Parkour Gameplay [Free to Use] [Download] (youtube.com)

- Temple Run Gameplay: Temple Run 2 (2023) – Gameplay (PC UHD) [4K60FPS] (youtube.com)

- Fortnite Gameplay: Fortnite Gameplay 4K (No Commentary) (youtube.com)

I took each video and trimmed them down to 1 minute in Adobe Premiere (for the footage of different mobile game ads, I selected a few ads that I felt were most emblematic of my own experiences). After that I created separate scenes for each clip that I would load in below my screen saver squares using Activate Scene and Deactivate Scene.

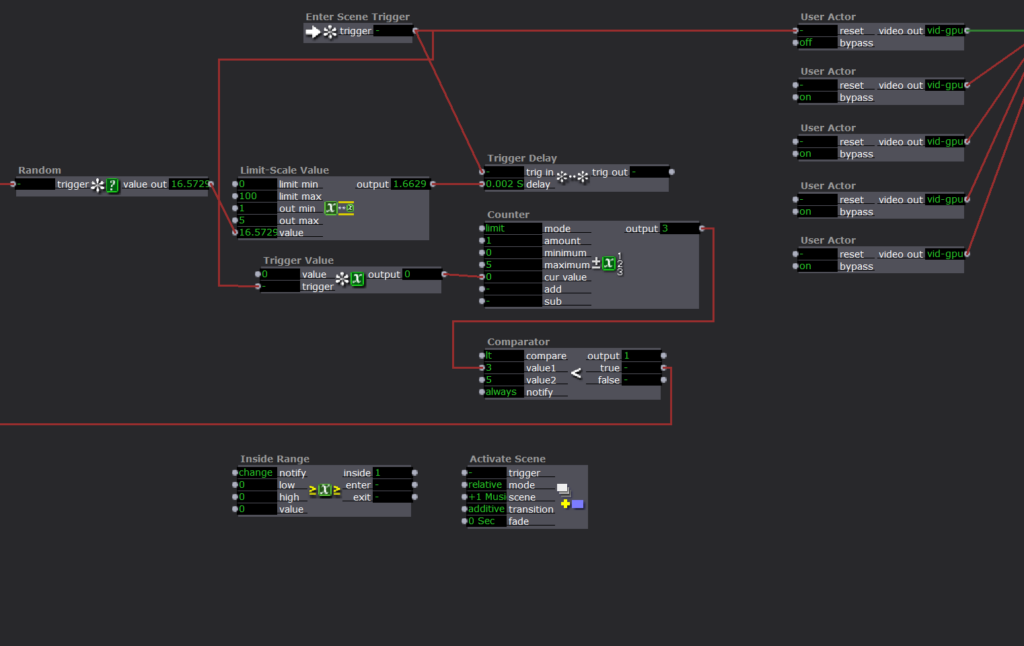

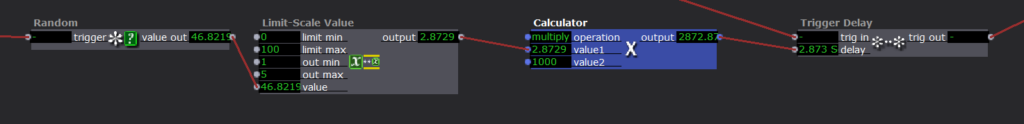

I also figured out that you have to multiply any randomized values by 1000 before inserting them into time-based inputs because of the way Isadora treats seconds versus normal floats.

HOUR 4

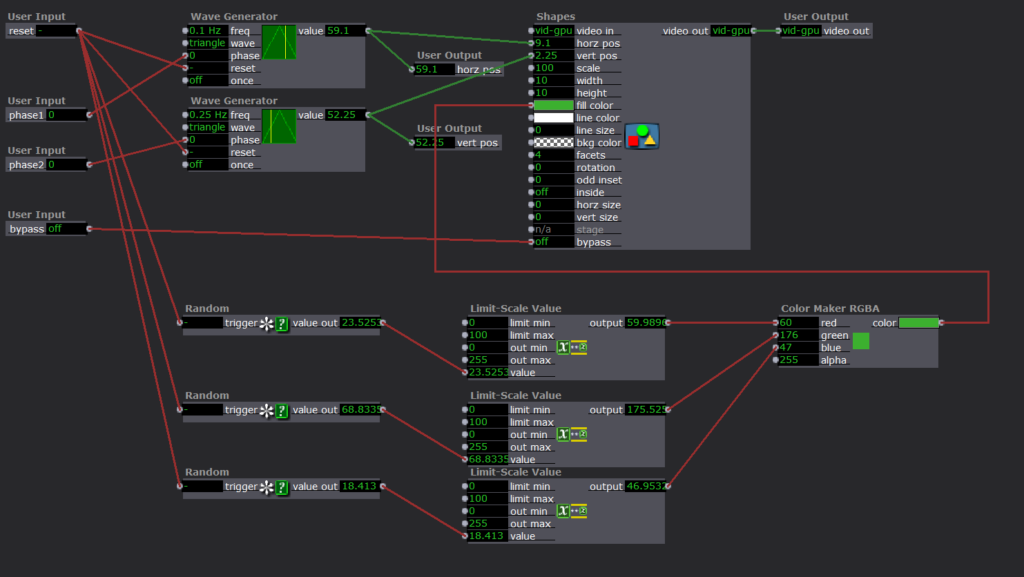

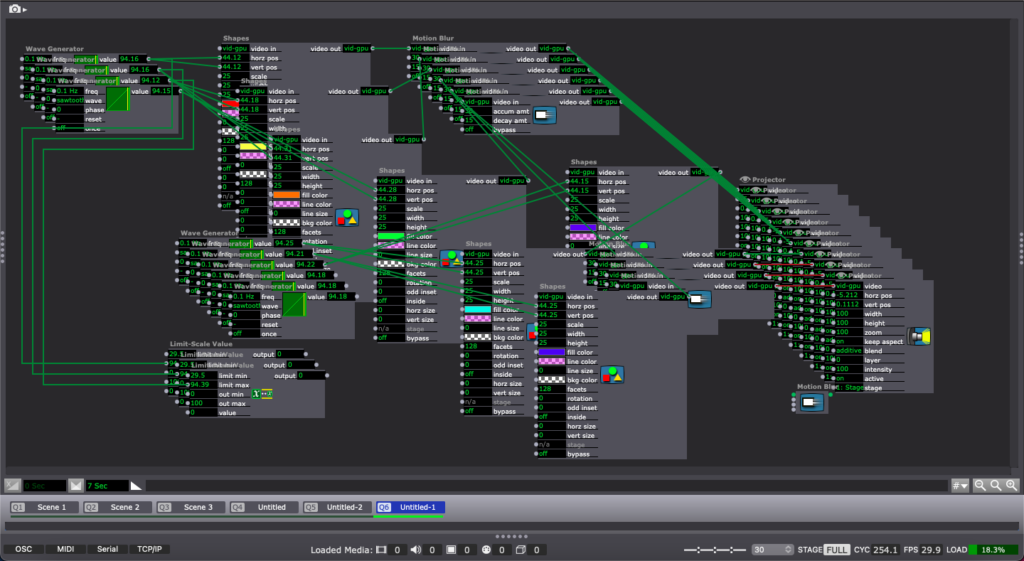

I created a user actor for each of my little bouncing shapes and for the instantiation of those shapes, as well as adding more randomized elements like the phase (which would affect where the shapes bounced), the color, and the timing at which they appeared.

HOUR 5

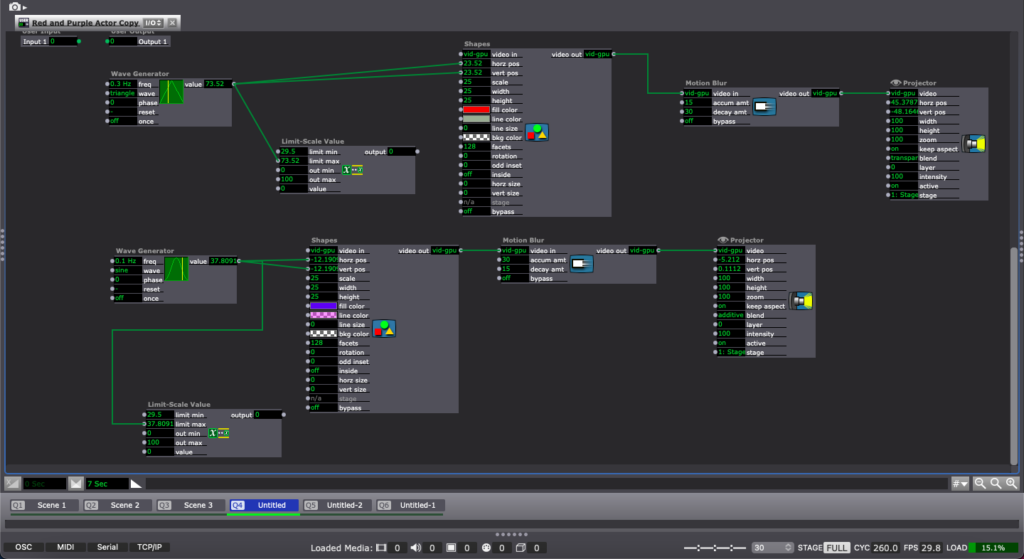

In the final hour I took time to polish and organize. I created some quick logic that would look at the vertical and horizontal positions of several shapes, compare them, and load/unload gameplay footage based on those values. I also took the time to add a piece of music that I felt captured the feel of my experience. The piece is called Cascades by Disasterpiece and it’s from one of my favorite games, Hyper Light Drifter: https://youtu.be/bKkl9iRB9-c?si=jy81Xwh1ZtegPbJJ.

SHOWTIME

People reacted differently from what I expected but I’m really glad they did. Everyone caught onto the fact that the experience was probably some critique or was representative of some trend, which I thought was great. Nathan immediately recognized the DVD screensaver-like bouncing of the shapes which I also appreciated, and Orlando said that the footage has YouTube tutorial vibes, which wasn’t a connection I drew at first but is incredibly apt. Nick made a comment about a moment where it appeared that the shapes filled in this black section of the screen and footage came up all around, which I thought was cool and pretty close to getting at how the footage is activated. There was a lot of talk about how the piece reflects on me as an artist which was great and people noted that music seemed like it wasn’t looping, which helped build anticipation because it made the cut harder to distinguish. There was also a question as to whether the music was diegetic or not, which is not something I considered at all, but I can’t stop thinking about it now.

Overall, I’m glad to have heard people’s reactions and what meanings they drew, as well as emotions. I’m also happy with what I got done in the amount of time I had. I was worried at first but once I figured out some intent behind what I wanted to do things went smoothly.

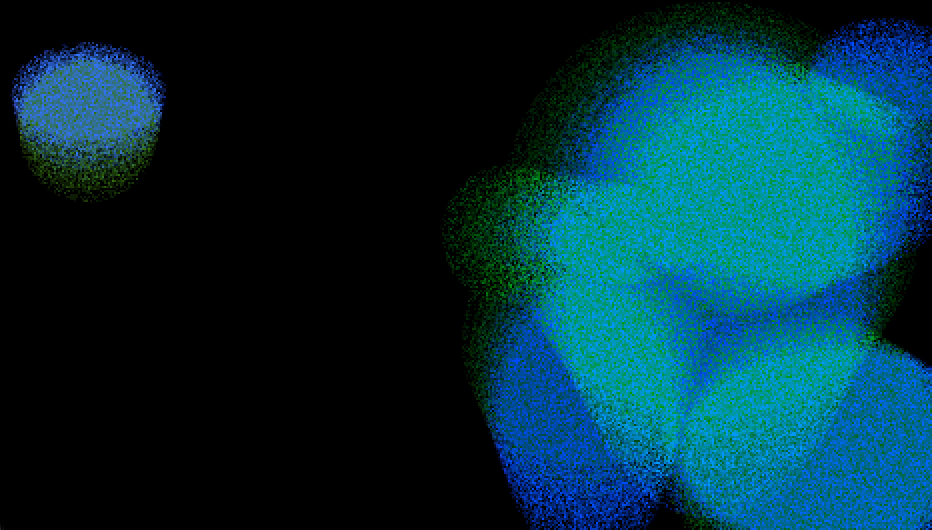

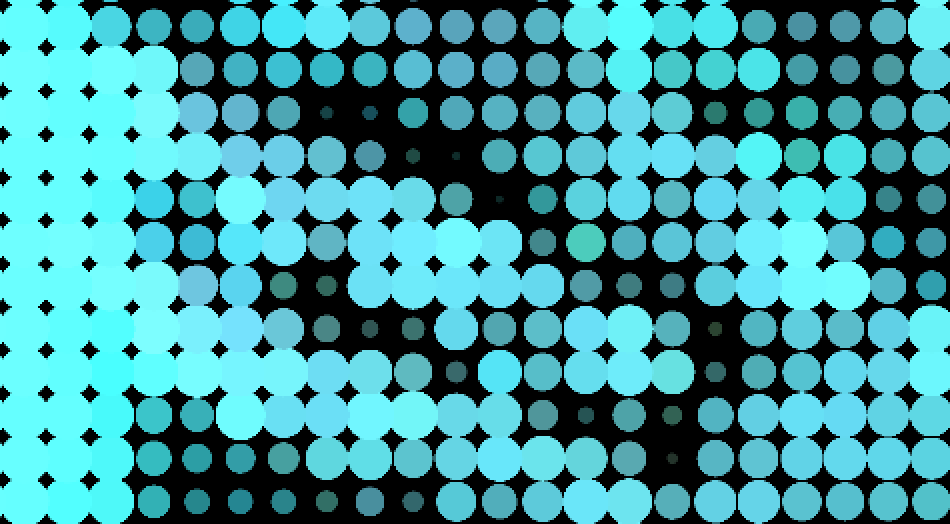

Pressure Project 1- The Aura of Circles

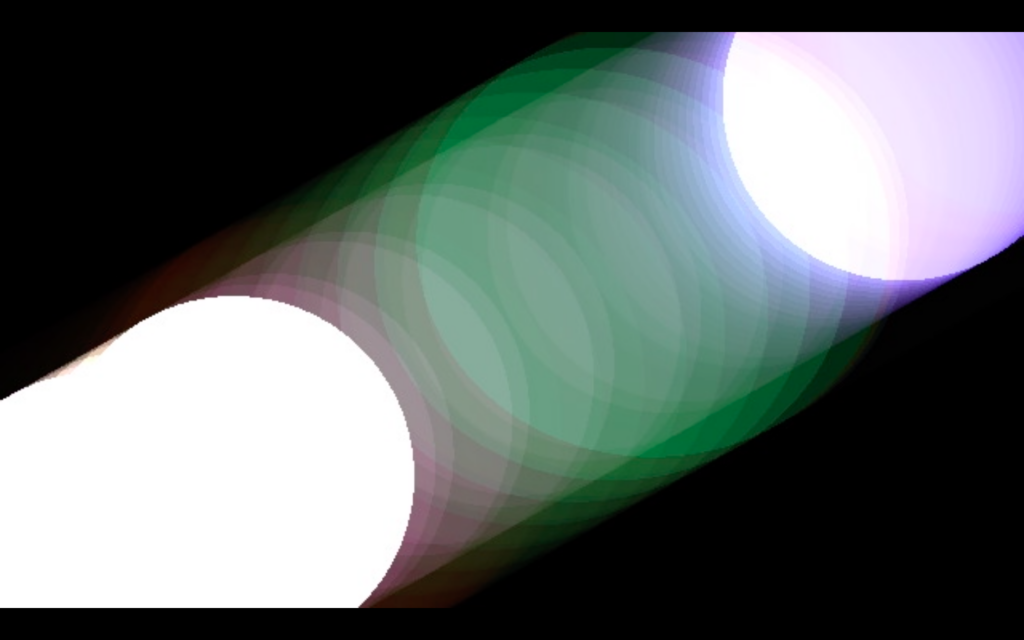

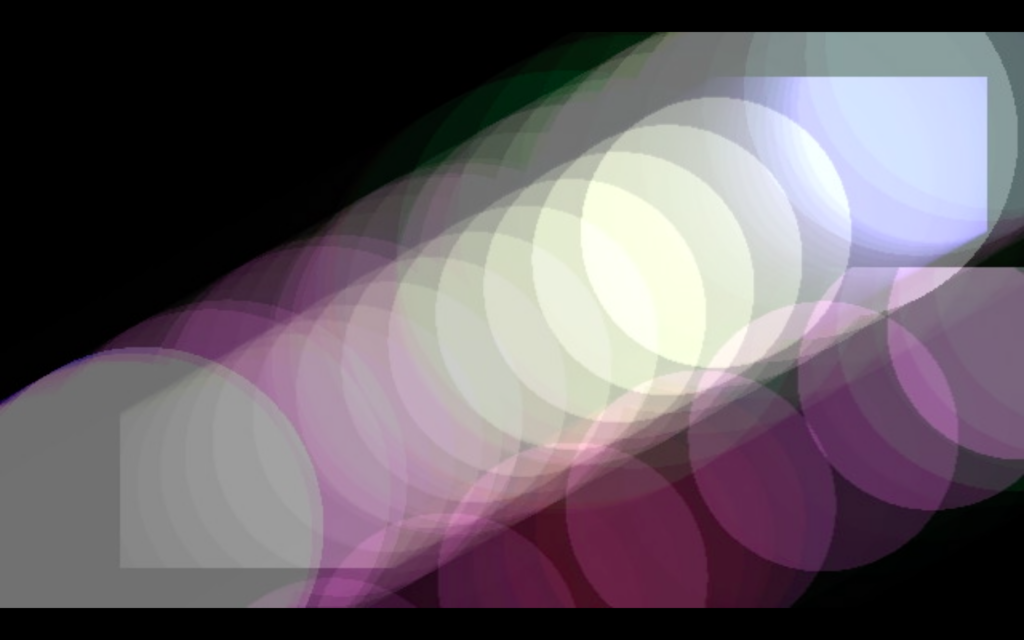

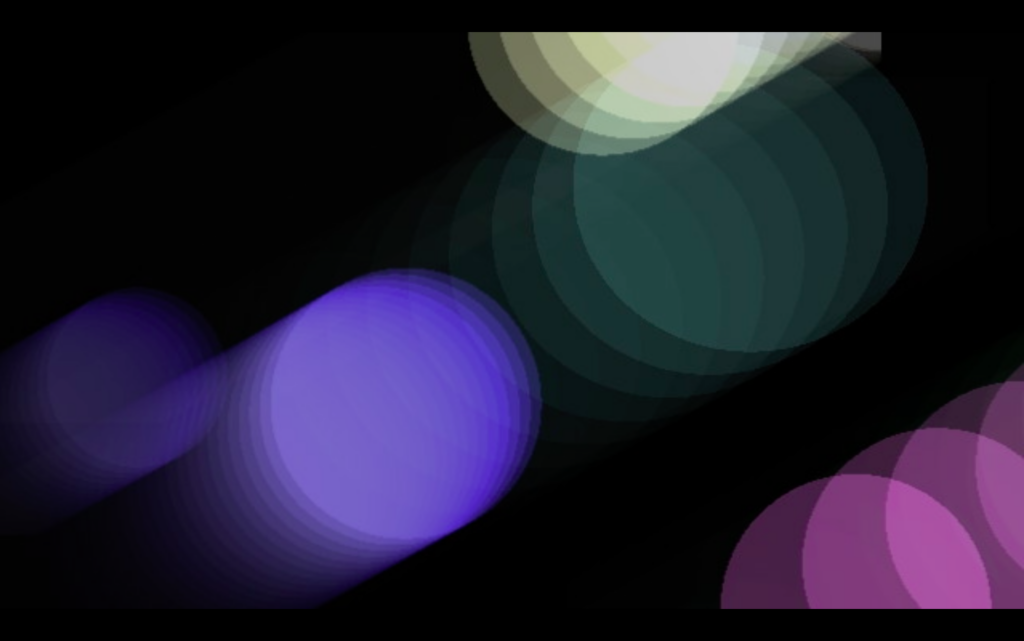

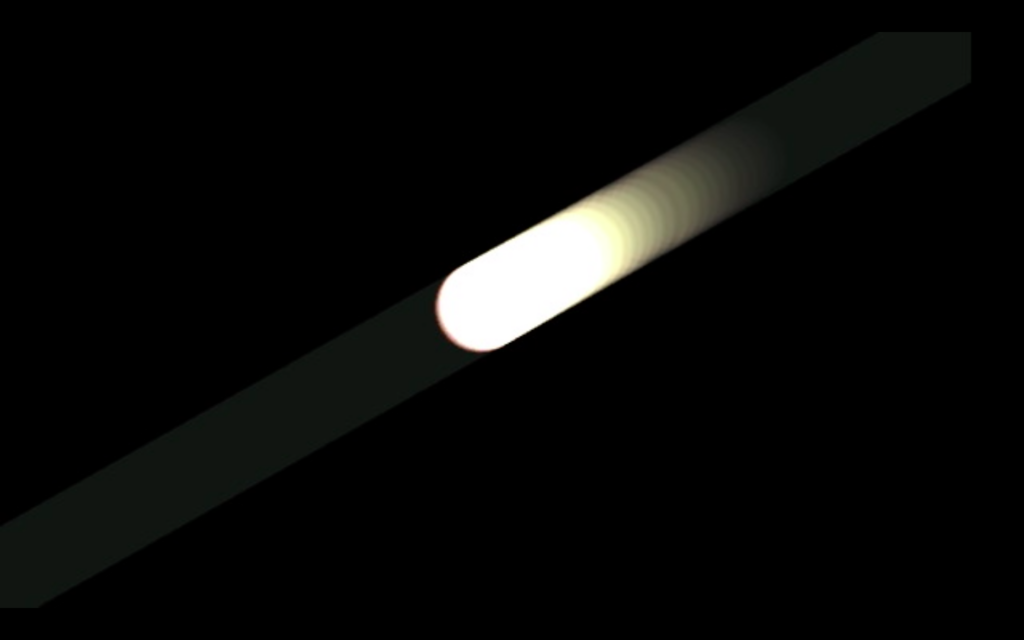

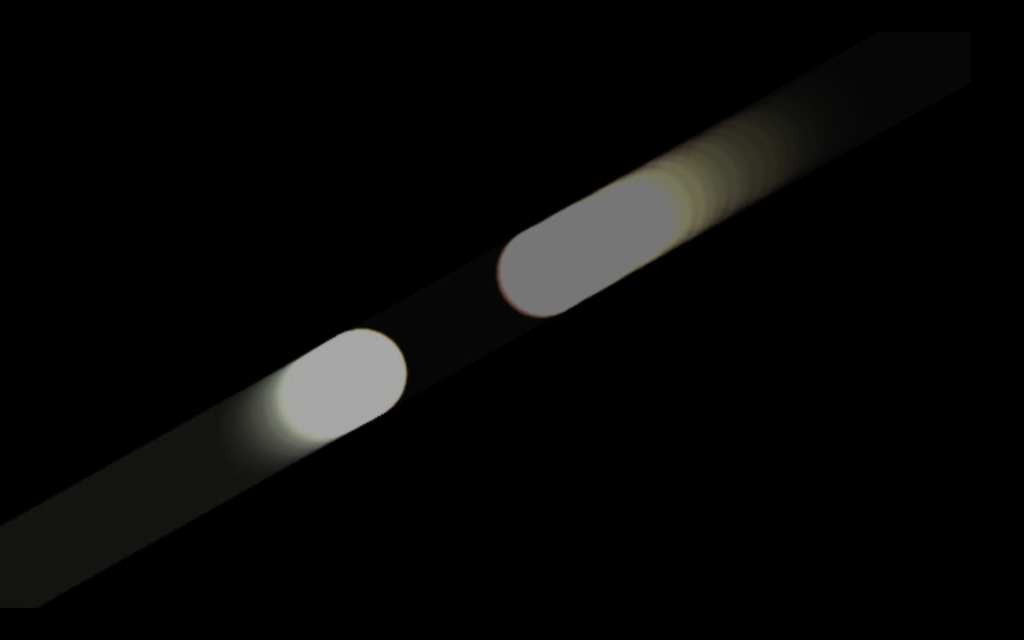

Posted: January 29, 2024 Filed under: Uncategorized Leave a comment »For this pressure project we were assigned to devise something that can hold your attention for as long as possible. For the first two hours of working on this project I was simply clicking through all of the options of what Isadora offered. I wanted to start simple and play with circles. As I continued to discover more things in Isadora I had an idea to create multiple circles with a trailing color of that shape as they move.

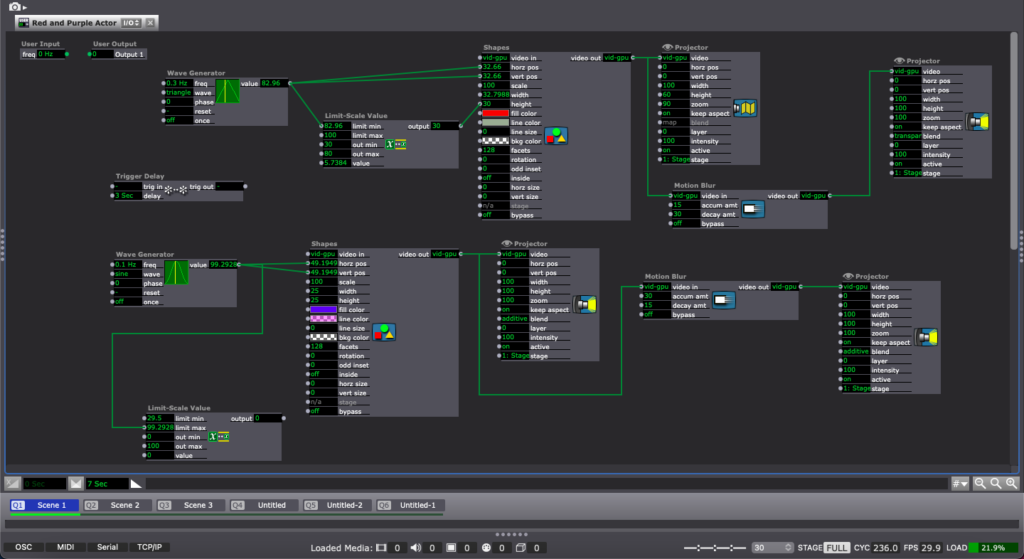

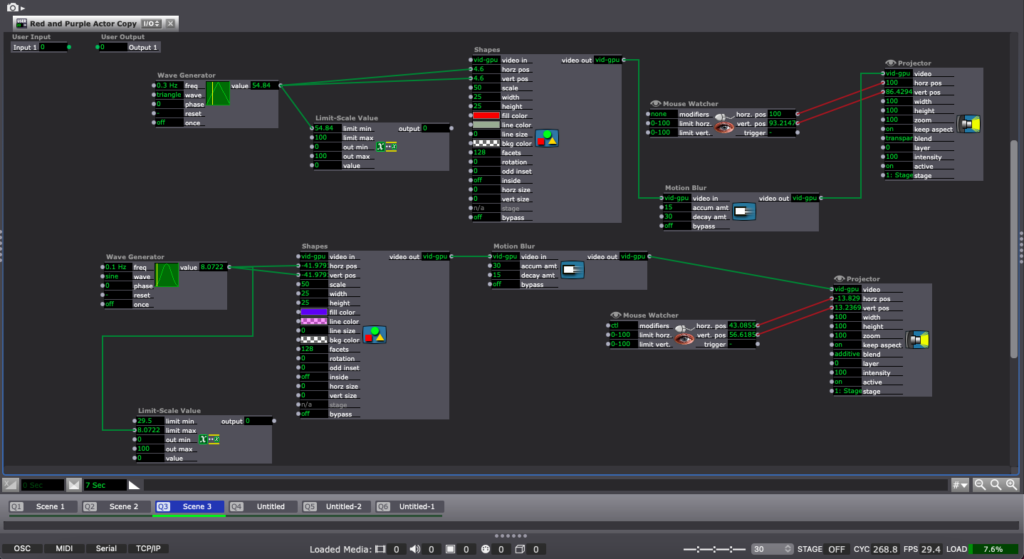

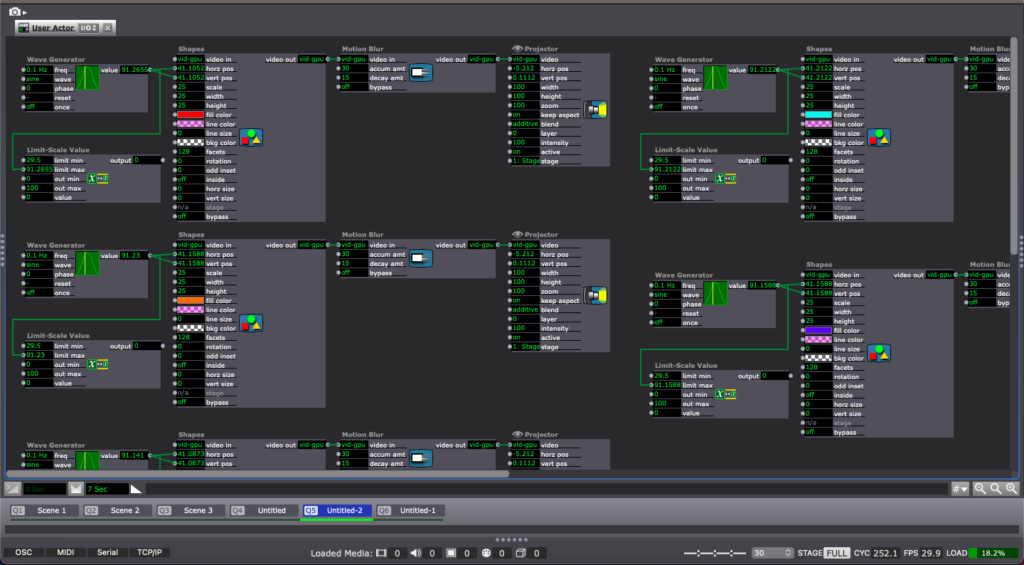

I was inspired by auras and the idea behind someone or something holding a distinctive atmosphere or quality. I grappled with what all can Isadora do and specifically how do I create a colorful trail. Thankfully I found motion blur and that’s what ignited my idea. I started to click through shapes and motion blur (specifically messing with color) and somehow created a colorful trail. Unfortunately I have no idea how I did that. I created 6 scenes and in each scene I used a “User Actor(s)” except for the last scene. I also had a 7 second transition between each scene.

SCENE 1 (4 user actors, different colors moving is a diagonal)

SCENE 2 & 3 (2 user actors, different colors and scale moving diagonally on the screen)

SCENE 4 I wanted the circles to start moving all over the screen and I discovered Mouse Watcher! Mouse Watcher allowed me to move the designated circles around wherever i dragged my mouse on the screen. I’m now curious about how I can get each circle to move in its own pattern without me dragging my mouse.

SCENE 5 I became intrigued about what happens when al of the color circles come together. What would it look like? What color trail would it leave?

SCENE 6

By the 5 hour mark this is what I settled on. I wasn’t able to incorporate a “trigger” or “jump++” actors, however I’m proud of what I was able to accomplish. I’m interested in how the circles or pathways of circles could be affected by audio or some kind of sound. I also see this idea going beyond shapes and possibly looking at body movement and having the aura of that body trail/ track the movements/ dancing.

Class Feedback:

My project reminded my classmates of:

- a lens flare

- movie intro logo

- rainy car crash scene in a movie

- someone shining a light in your face as you’re waking up

- moving particles

- the trail of the color gives the black background texture

Orlando’s Bumped Article

Posted: January 23, 2024 Filed under: Uncategorized Leave a comment »https://dems.asc.ohio-state.edu/?m=202212

Bump Post Sp 2024

Posted: January 23, 2024 Filed under: Uncategorized | Tags: bump Leave a comment »Bump: How to Make an Imaginary Friend

Posted: January 23, 2024 Filed under: Uncategorized Leave a comment »Edit Post “How To Make An Imaginary Friend” ‹ Devising EMS — WordPress (ohio-state.edu)

Bump: Pressure Project #2: Dancing Depths

Posted: January 23, 2024 Filed under: Uncategorized Leave a comment »I find this project to be technically stimulating and conveys an interesting message about humans. Familiar in anatomy, but there is almost a chameleon like skin tapering around giving an alien / post-human effect. It seems that Axel used a kinect device which I know uses infrared technology to track movement. In this way, the computer vision filters out the projected light spectrum which is interesting. As opposed to the way we see the these ethereal neon figures, the computer just sees boundaries. Meaning the camera’s are unaffected by these artificial skins or personas mapped onto humans. It makes sense that this IR tech was chosen because I imagine it to be more accurate in low light conditions and less computationally expensive than visible light computer vision algorithms.

However, I still think it interesting to experiment with this project using a camera or a mix of IR and visible light processing. This allows for the interactive system to experience humans in both their anatomy and artificial skin. From a psychological level, this tradeoff in accuracy speaks to confusion between what we are and what we want to be. I think a bit of messiness in the tracking human motion and projecting onto account for the unclean boundaries between the two. The camera will have to contend with analyzing both the accentuated projected light and its faint shadow of physical form.

BUMP: Pressure Project 1- Congestion- Min Liu

Posted: January 22, 2024 Filed under: Uncategorized Leave a comment »Bump: Tamryn McDermott’s “PP1: Breathing the text”

Posted: January 22, 2024 Filed under: Uncategorized Leave a comment »This is pressure project 1 from Tamryn McDermott, it sparks two thoughts in me:

– how to integrate kinesthetic sensation with audio-visual (in her case, the breath); i think in her project, this works well with manipulating the pace of the book’s contracting motion in accordance with the breathing rhythm. Also, i am especially interested in integrating haptic interaction.

– in the last paragraph, she mentioned the desire to introduce “more randomness”, which makes me ponder about the relationship between randomness, complexity, and the range/scale of control.