PP2 – Stanford

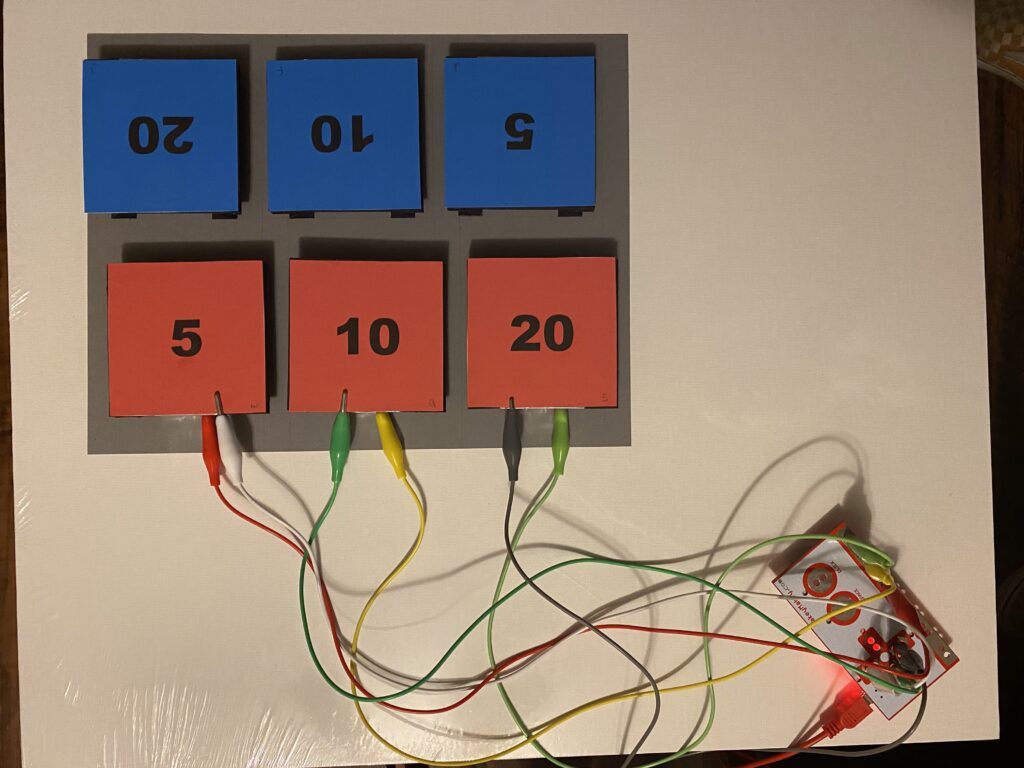

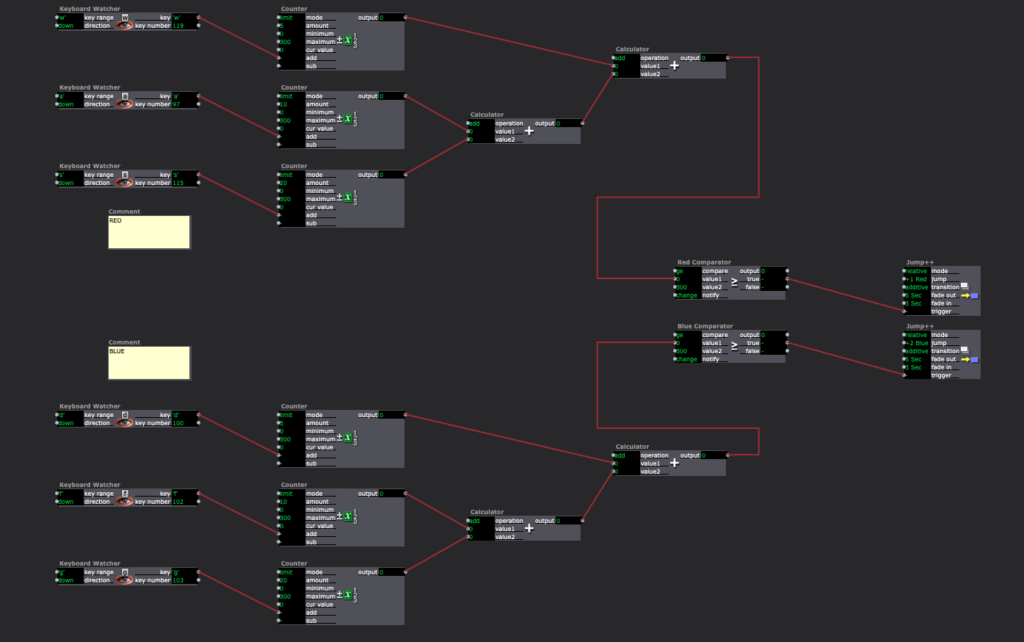

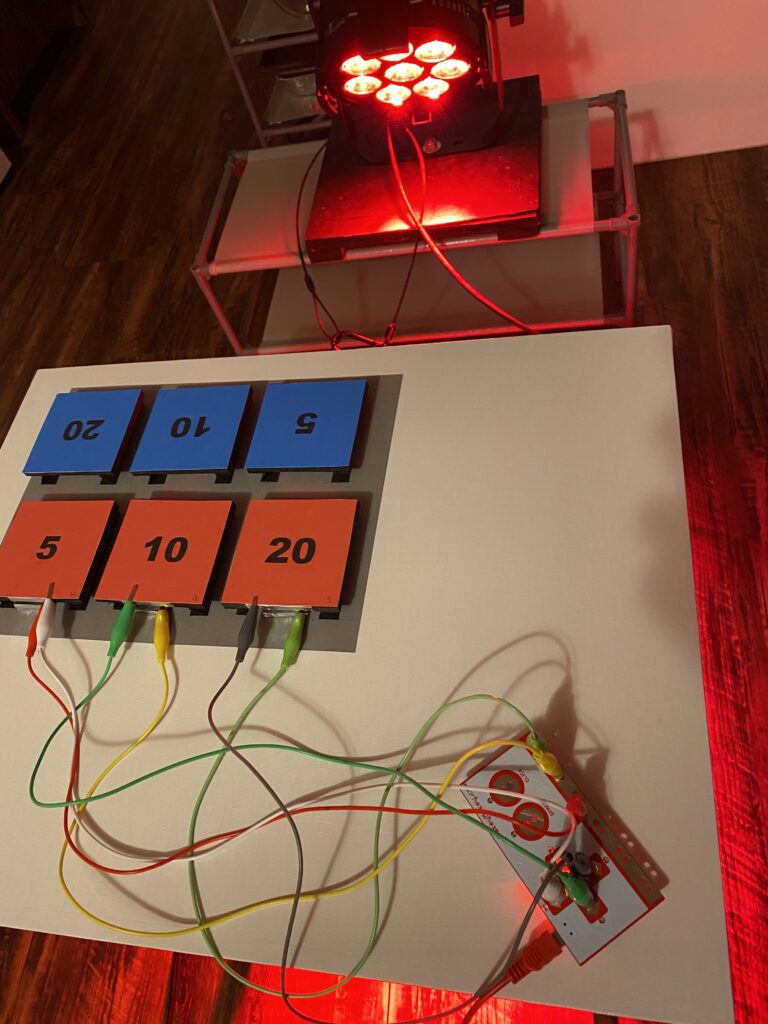

Posted: November 30, 2020 Filed under: Uncategorized Leave a comment »For this assignment, I used the Makey Makey to count the points of a card game. I created 3 buttons for each team with the labels 5, 10, and 20. These are the point values of the cards in the game. My goal was to have Isadora count the points for each team and when one reached the winning amount, a light would light up in the winning team’s color.

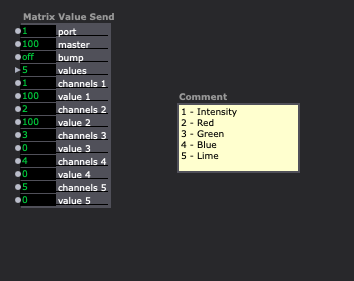

In addition to the Makey Makey, I used an ENTTEC Pro. This allowed me to send a signal to an LED fixture from my computer.

Each of the buttons were assigned a different letter on the Makey Makey. My patch used each letter to count by the value of the button it was associated with. It then added each team’s values together and a comparator triggered a cue to turn on the light fixture in either red or blue when a team reached 300 points or more.

Tara Burns – Cycle Two

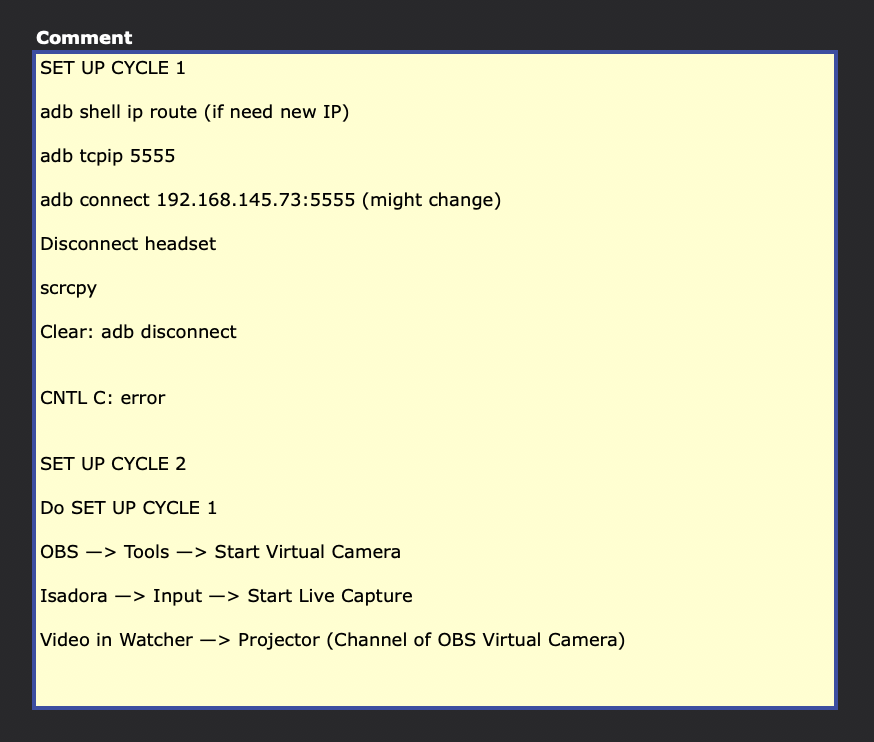

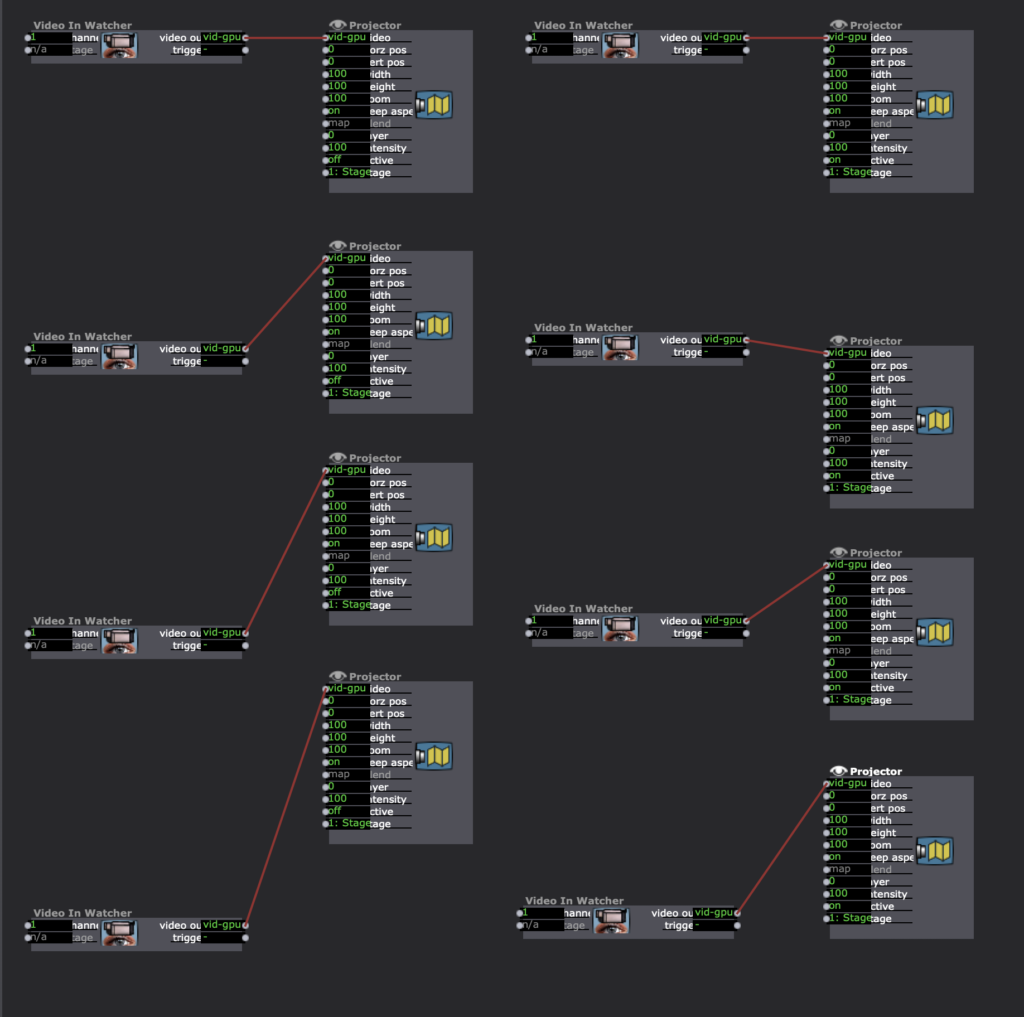

Posted: November 27, 2020 Filed under: Uncategorized Leave a comment »Goals

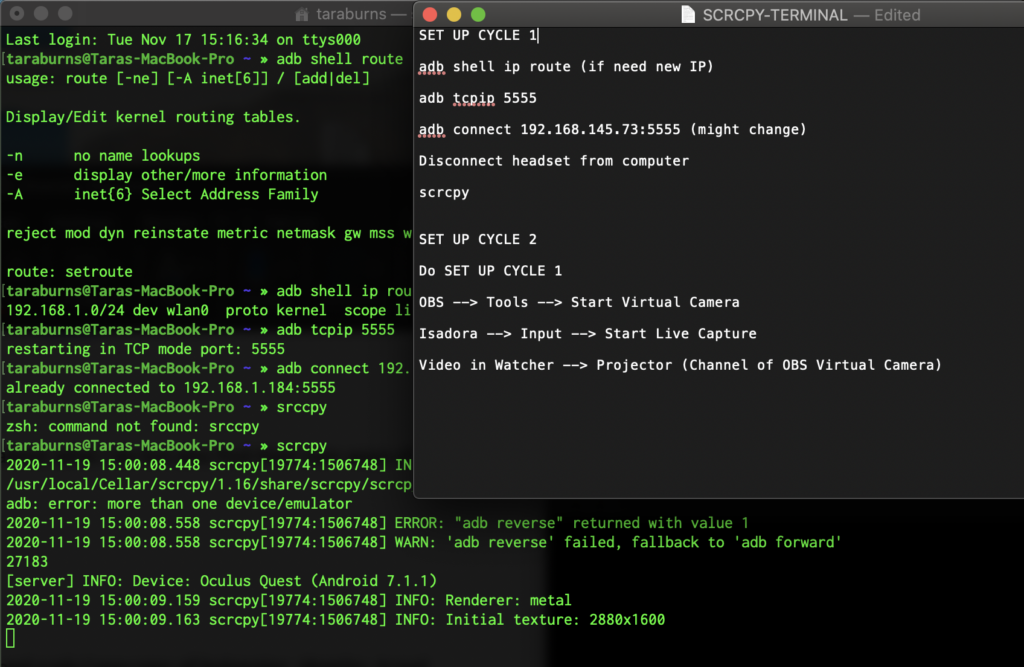

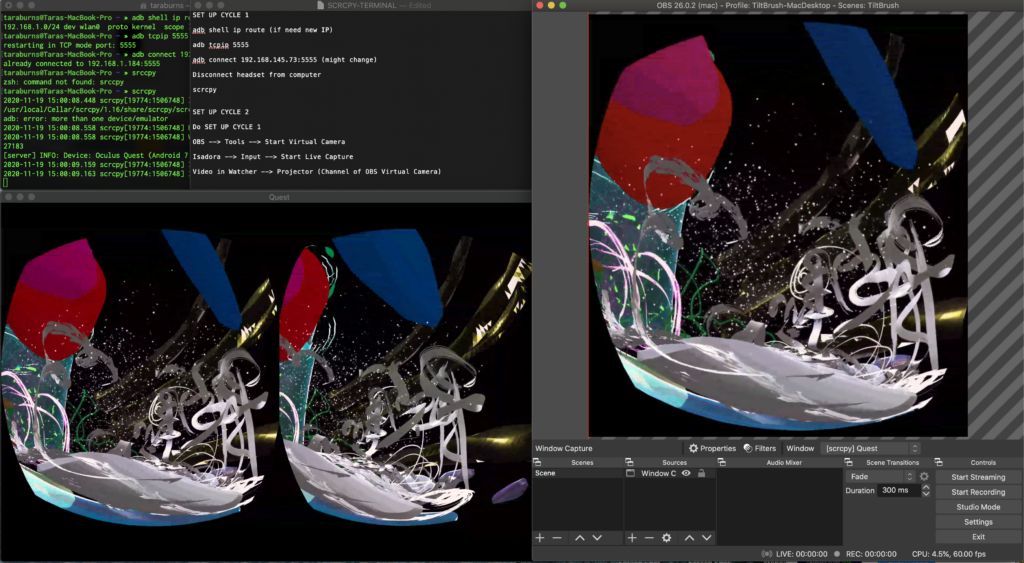

– Using Cycle 1‘s set up and extending it into Isadora for manipulation

– Testing and understanding the connection between Isadora and OBS Virtual Camera

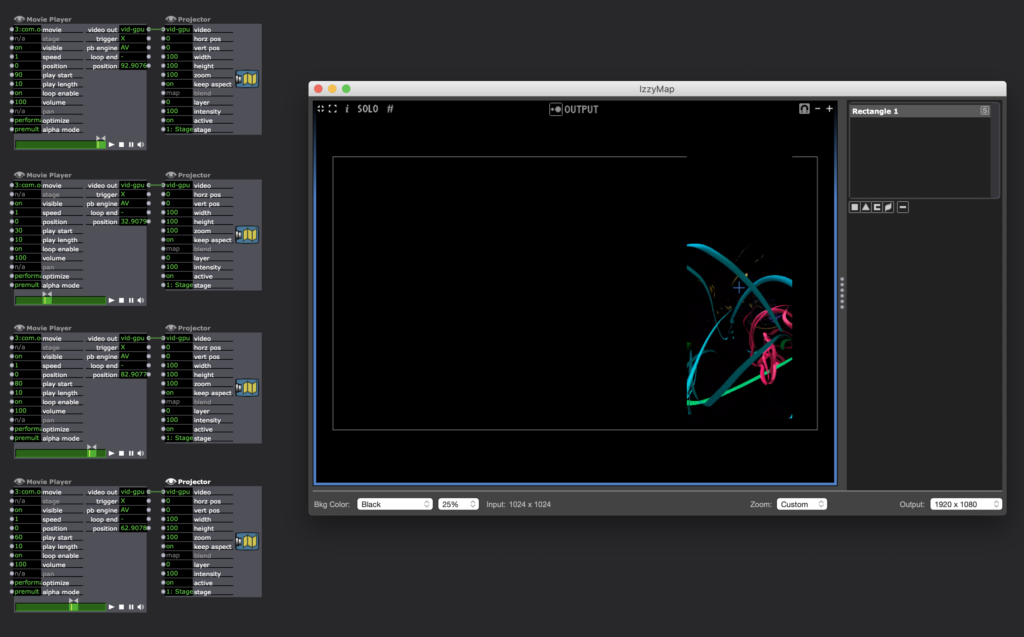

– Testing prerecorded video of paintings and live streamed Tilt Brush paintings in Isadora

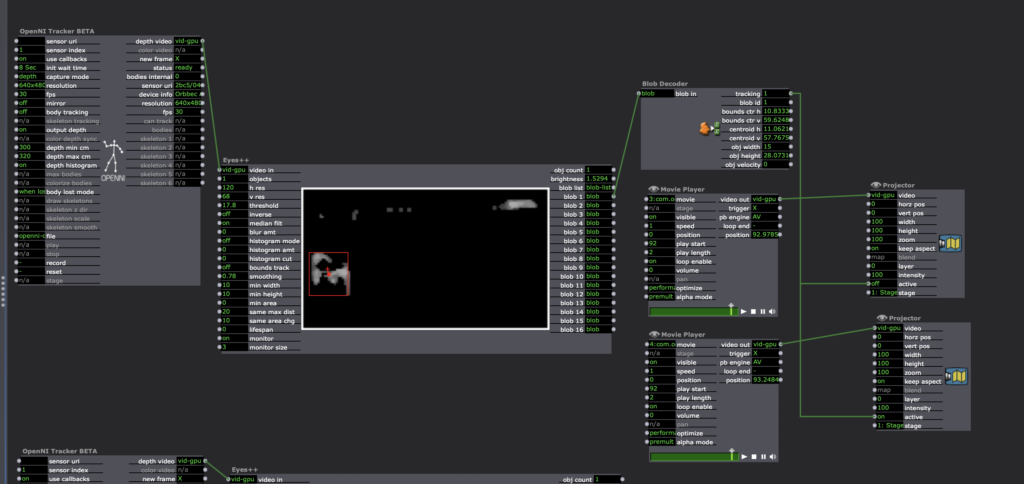

– Moving to a larger space for position sensitive tracking through Isadora Open NDI Tracker

– Projection mapping

Challenges and Solutions

– Catalina Mac OS doesn’t function with Syphon so I had to use OBS Virtual Camera in Isadora

– Not having a live body to test motion tracking and pin pointing specific locations required going back and forth. I wouldn’t be able to do this in a really large space but for my smaller space I put my Isadora patch on the projection and showed half the product and half the patch so I could see what was firing and what the projection looked like at the same time.

– Understanding the difference between the blob and skeleton trackers and what exactly I was going for took a while. I spent a lot of time on the blob tracker and then finally realized the skeleton tracker was probably what I actually needed in the end.

– I realized the headset will need more light to track if I’m to use it live.

Looking Ahead

The final product of this goal wasn’t finished for my presentation but I finished it this week which really brought about some really important choices I need to make. In my small space, if I’m standing in front of the projection it is very hard to see if I’m affecting it because of my shadow, so either the projection needs to be large enough to see over my head or my costume needs to be able to show the projection.

I am also considering a reveal, where the feed is mixed up (pre-recorded or live or a mix – I haven’t decided yet) and as I traverse from left to right the paintings begin to show up in the right order (possibly right to left/reverse of what I’m doing). Instead of audience participation, I’m thinking of having this performer triggered; my own position tracking and triggering the shift in content perhaps 3-4 times and then it stays in the live feed. Once I get to the other side, it is a full reveal of the live feed coming from my headset. This will be tricky as the headset needs light to work (more than projection provides), which is a reason I switched to using movies in my testing as I didn’t have the proper lights to light me so the headset could track and you could see the projection. I also was considering triggering the height of the mapped projection panel (like Kenny’s animation from class) and revealing what is behind that way. Although I do want to keep the fade in and out.

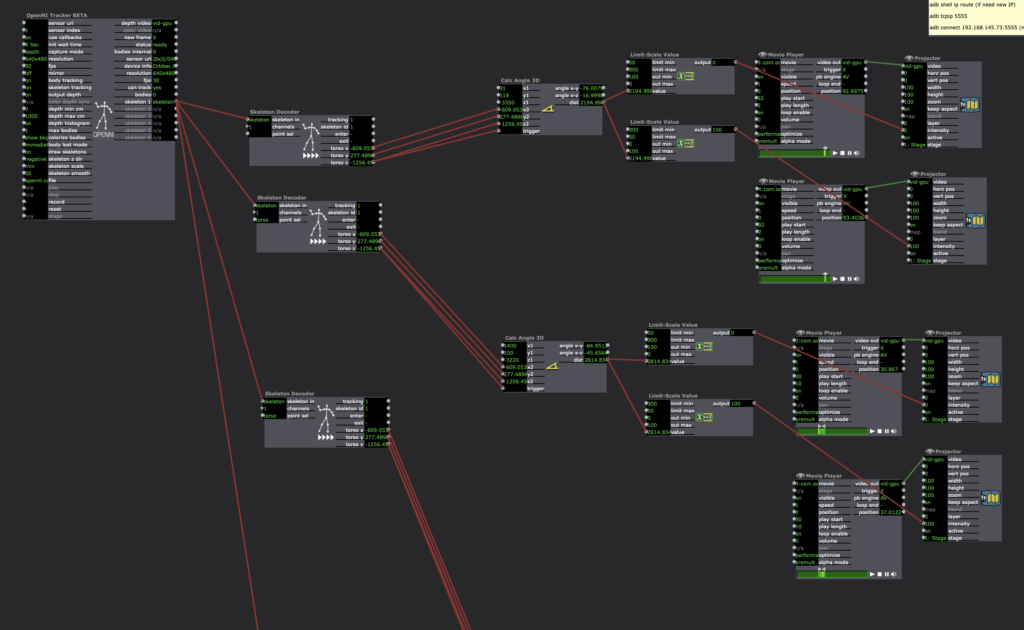

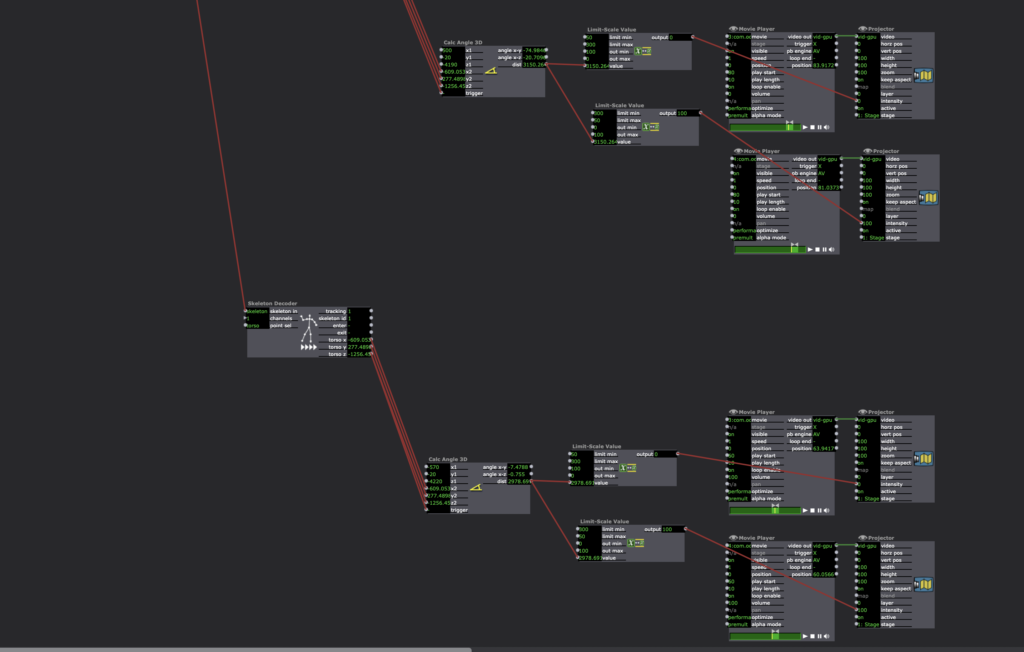

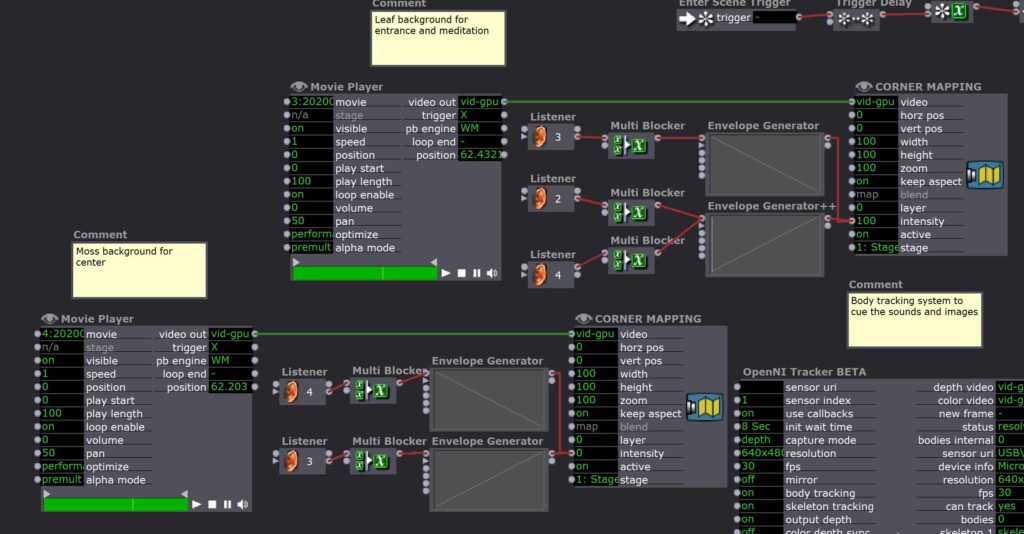

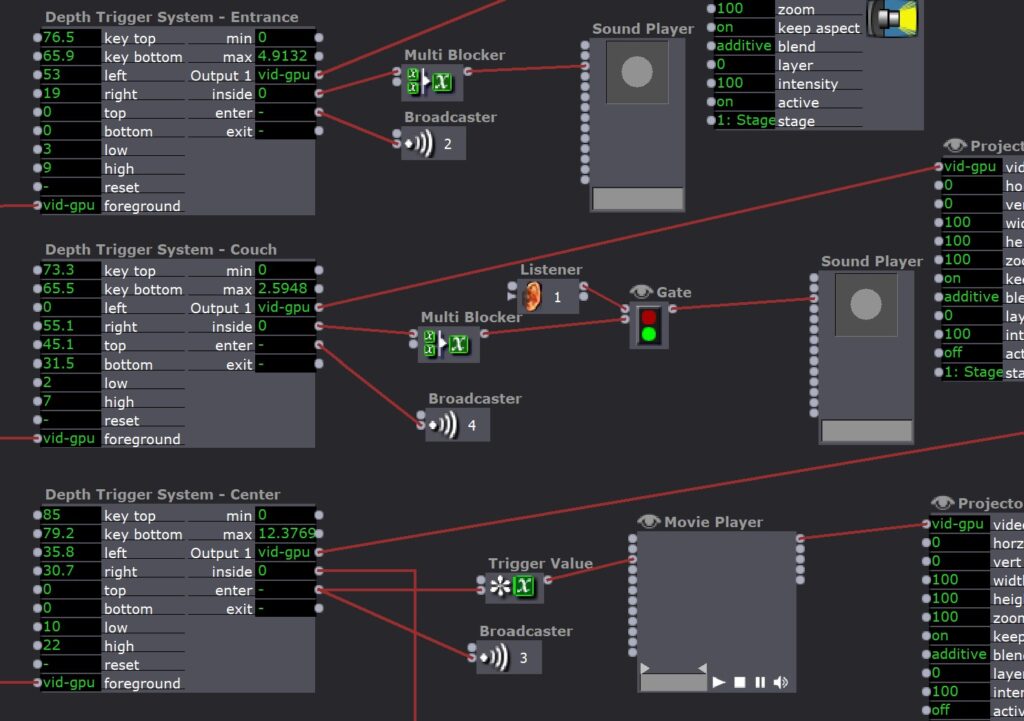

Movement Meditation Room Cycle 2

Posted: November 24, 2020 Filed under: Uncategorized Leave a comment »Cycle 2 of this project was to layer the projections onto the mapping of each location in the room. I started with the backgrounds which would fade in and out as the user moved around the room. A gentle view of leaves would greet them upon entering and when they were meditating, and when they walked to the center of the room it shifted to a soft mossy ground. This was pretty easy because I already had triggers built for each location so all I had to do was connect the intensity of the backgrounds to a listener that was used for each location. The multiblockers were added so that it wouldn’t keep triggering itself when the user stayed in the location, they are timed for the duration of the sound that occurs at each place.

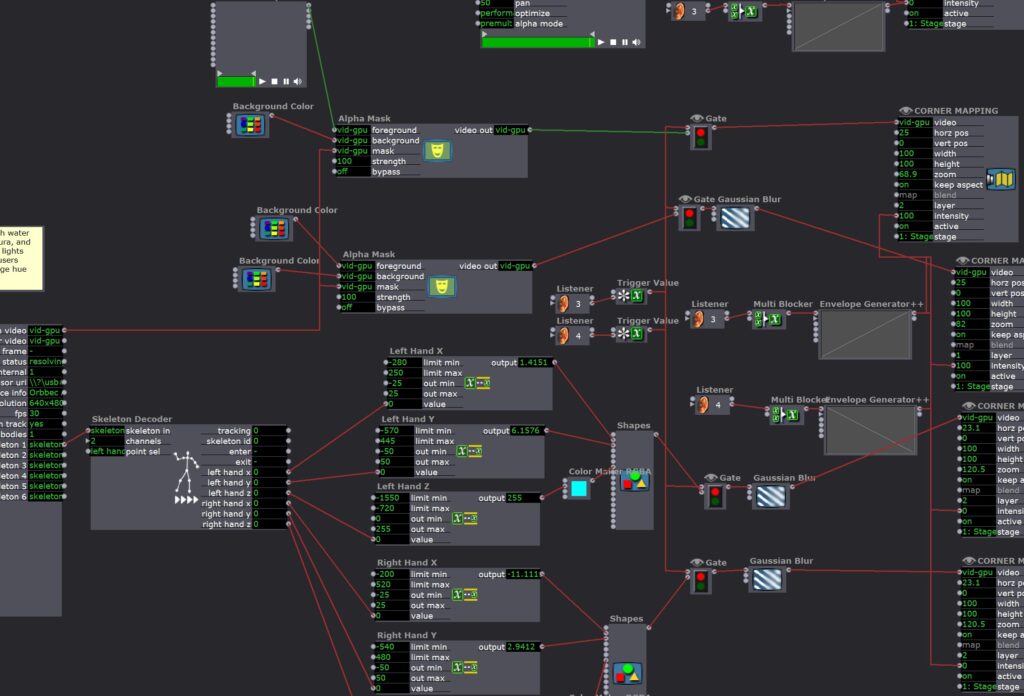

The part that was more complicated was what I wanted for the experience in the center. I wanted the use to be able to see themselves as a “body of water” and to be able to interact with visual elements on the projection that made them open up. I wanted an experience that was exciting, imaginative, open-ended, and fun so that the user would be inspired to move their body and be brought into the moment exploring the possibilities of the room at this location. My lab day with Oded in the Motion Lab is where I got all of the tools for this part of the project.

I rigged up a second depth sensor so that the user could turn toward the projection and still interact with it, then I created an alpha mask out of that sensor data which allowed me to fill the user’s body outline with a video of moving water. I then created an “aura” of glowing orange light around the person and two glowing globes of light that tracked their hand movements. The colors change based on the z-axis of the hands so there’s a little bit to explore there. All of these fade in using the same trigger for when the user enters the center location of the room.

I am really proud of all of this! It took me a long time to get all of the kinks out and it all runs really smoothly. Watching Erin (my roommate) go through it I really felt like it was landing exactly how I wanted it to.

Next steps from here mean primarily developing a guide for the user. It could be something written on the door or possibly an audio guide that would happen while the user walks through the room. I also want to figure out how to attach a contact mic to the system so that the user might be able to hear their own heartbeat during the experience.

Here is a link to watch my roommate go through the room: https://osu.box.com/s/hzz8lp5s97qw5q47ar32cgblh5hus8rs

Here is the sound file for the meditation in case you want to do it on your own:

Tara Burns – Iteration X: Extending the Body – Cycle 1 Fall 2020

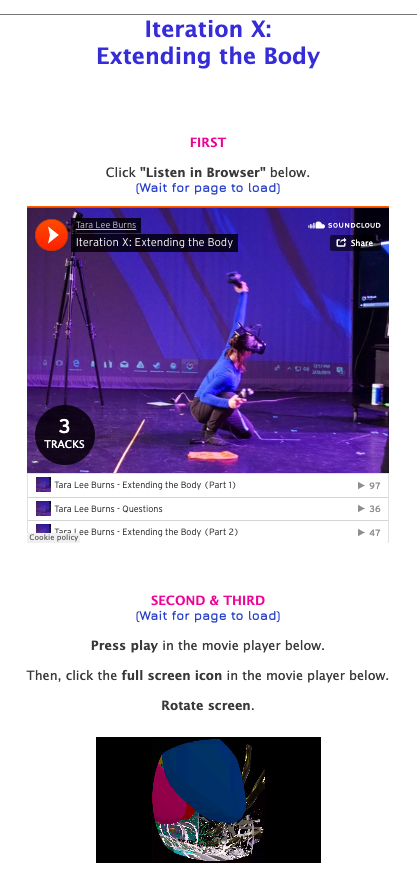

Posted: November 19, 2020 Filed under: Uncategorized Leave a comment »I began this class with the plan to make space to build the elements of my thesis and this first cycle was the first iteration of my MFA Thesis.

I envision my thesis as a three part process (or more). This first component was part of an evening walk around the OSU Arboretum with my MFA 2021 Cohort. To see the full event around the lake and other projects click here: https://dance.osu.edu/news/tethering-iteration-1-ohio-state-dance-mfa-project

In response to Covid, the OSU Dance MFA 2021 Cohort held a collaborative outdoor event. I placed my first cycle (Iteration X: Extending the Body) in this space. Five scheduled and timed groups were directed through a cultivated experience while simultaneously acting as docents to view sites of art. You see John Cartwright in the video above, directing a small audience toward my work.

In this outdoor space wifi and power were not available. I used a hotspot on my phone to transmit from both my computer and VR headset. I also used a battery to power my phone and computer for the duration.

Iteration X: Extendting the Body asked the audience to follow the directions on the screen to listen to the soundscape, view the perspective of the performer, and imagine alternate and simultaneous worlds and bodies as forms of resistance.

Meditation Room Cycle 1

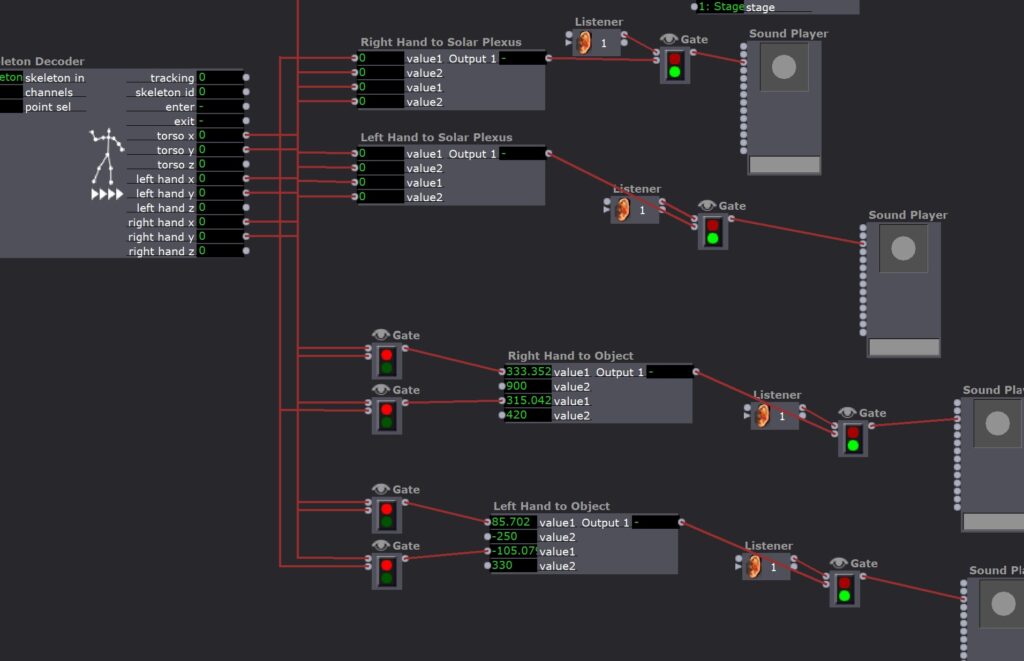

Posted: November 10, 2020 Filed under: Uncategorized Leave a comment »The goal for my final project is to create an interactive meditation room that responds to the users choices about what they need and allows them to feel grounded in the present moment. The first cycle was just to map out the room and the different activities that happen at different points in the space. There were two ways that the user can interact with the system: their location in space and relationship of their hands to various points.

There were three locations in the room that triggered a response. The first was just upon entering, the system would play a short clip of birdsong to welcome the user into the room. From there the user has two choices. I am not sure as of right now if I should dictate which experience comes first or if that should be left for the user to decide. I think that I can make the program work either way. One option they could choose was to sit on the couch which would start an 8 minute guided meditation and focuses on the breath, heartbeat, and stillness. The other option is to move to the center of the room which is more of a movement experience where the user is invited to move as they like (with a projection across them and responding to their movement to come in later cycles). As they enter this location there is a 4 minute track of ambient sound that creates the atmosphere of reflection and might inspire soft movement. This location is primarily where the body tracking triggers are used.

Two of the body tracking triggers are used throughout the room, they trigger a heartbeat and a sound of calm breathing when the user’s arms are near the center of their body. This isn’t always reliable and seemed like too much sometimes with all of the other sounds layered on top so I am thinking of shifting to just work upon entry and maybe just the heartbeat in the center using gates the same way that I did with the triggers used in the center of the room. The other two body tracking triggers use props in the room that the user can touch. There is a rock hanging from the ceiling that will trigger the sounds of water when touched and there is a flower attached to the wall that will trigger the sound of wind when touched. These both will only have an on-gate when the user is in the correct space at the center of the room.

Overall I feel good about this cycle. I was able to overcome some of the technical challenges of body tracking and depth tracking as well as timing on all of the sound files. I was able to prove the concept of triggering events based on the user’s interaction with the space which was my initial goal.

The next steps from here are to incorporate the projections and possibly a biofeedback system for the heartbeat. I also need to think about how I am going to guide the experience. I think I will have some instructions on the door that help users understand what the space is for and how to engage with it and what choices they may make throughout. I also am not really sure how to end it. Technically I have the timers set up so that if someone finished the guided meditation, got up and played with the center space, and the wanted to do the guided meditation again, they totally could. So maybe that is up to the user as well?

Here is a link to me interacting with the space so you can see each of the locations and the possible events that happen as well as some of my thoughts and descriptions throughout the room (the sound is also a lot easier to hear): https://osu.box.com/s/iq2idk432jfn2yzbzre91i2gp3y4bu9d

Pressure Project 3

Posted: October 29, 2020 Filed under: Uncategorized Leave a comment »

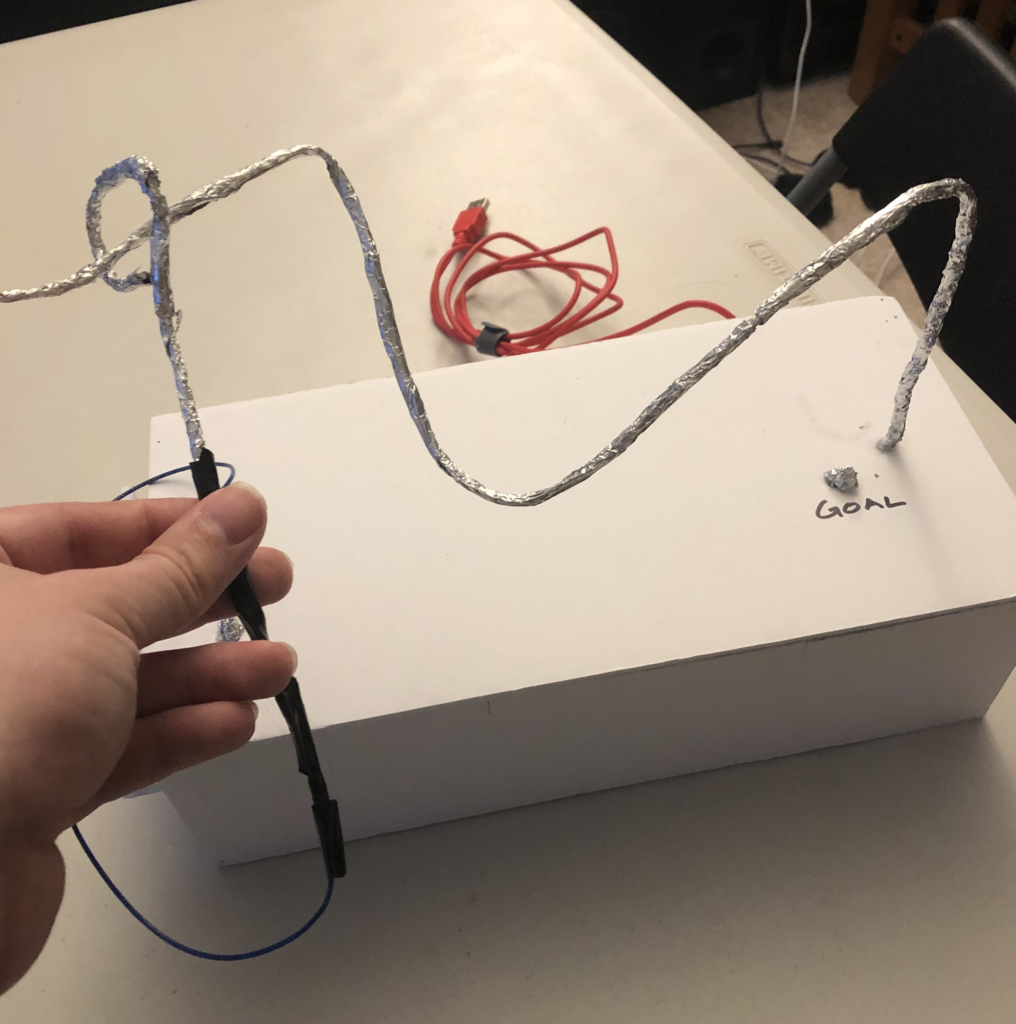

For this pressure project, I wanted to create a game where the player would attempt to move a conductive ring through an obstacle without contacting the obstacle (similar to the board game Operation). The object is to move the wand through the obstacle and touch the piece of foil labeled goal. This would trigger a victory scene. However, if any of the obstacle was touched with the wand, it would trigger a failure screen and the player would need to remove the wand from the obstacle and touch the reset button to go back into the game.

One thing I realized while designing the game was that it would be incredibly easy to cheat, for example moving the wand directly to the goal without going over the obstacle at all after starting the game. I decided to keep this feature in the game without resolving it because it made it very easy to test the game.

When I was building my box I expected I bent a coat metal coat hanger to create the wand and the obstacle. I assumed that the metal coat hanger would be conductive, however, the paint on the obstacle prevented that from happening. I could have sanded down the hanger, but instead, I glued and wrapped foil around the hanger. This created a few places where the wand was not conductive.

Another challenge I ran into was the physical construction of the box. I did not anticipate the need for room for the alligator clips to connect to the makey makey device. I ended up having to make a much taller box so the clips could stand up without strain or being bent at an angle inside of the box.

If I had more time to work on this project, I would have liked to have added another button to bring up a scene that would allow the player to play a “challenge mode.” This mode would force the player to complete the course within a certain amount of time. I also thought it would be interesting to force the player to engage with the Leap Motion controller with their other hand while trying to complete the game.

Download game files:

https://1drv.ms/u/s!Ai2N4YhYaKTvgbM4H-iN0GAvYMls6Q?e=nLweiY

Pressure Project #3

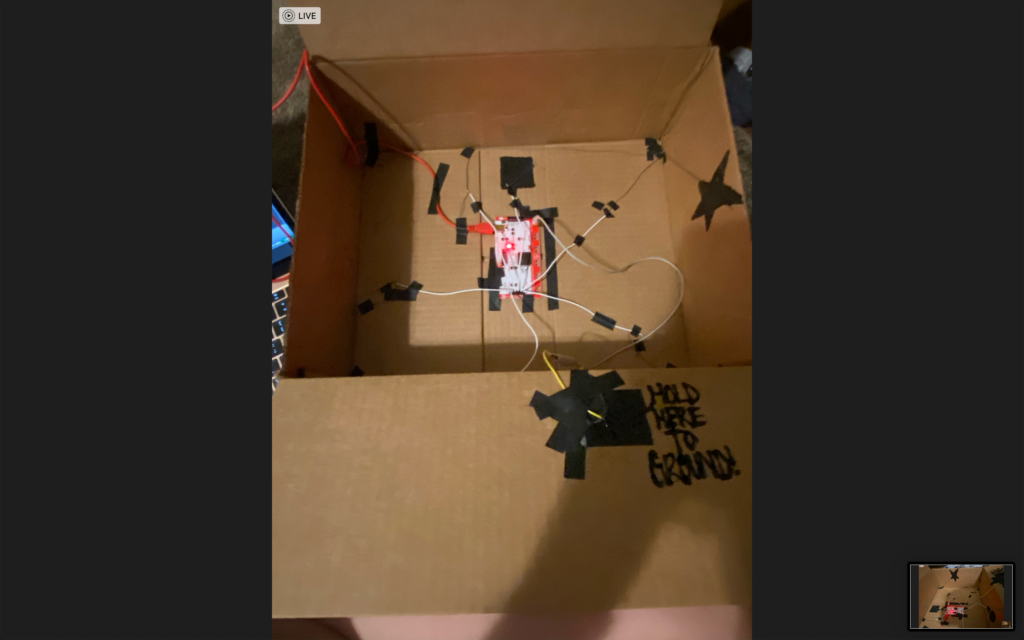

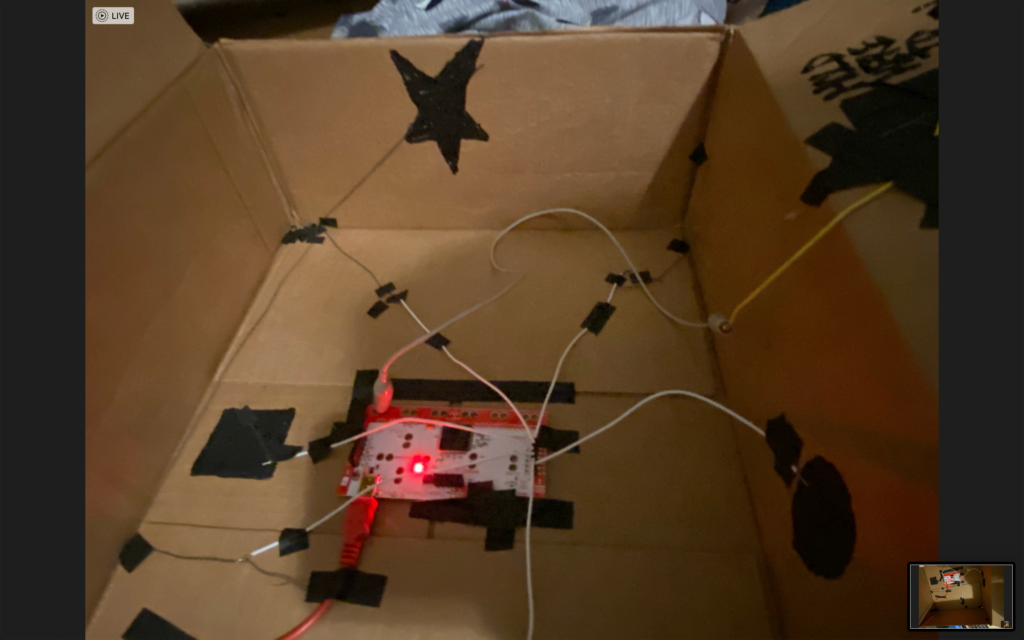

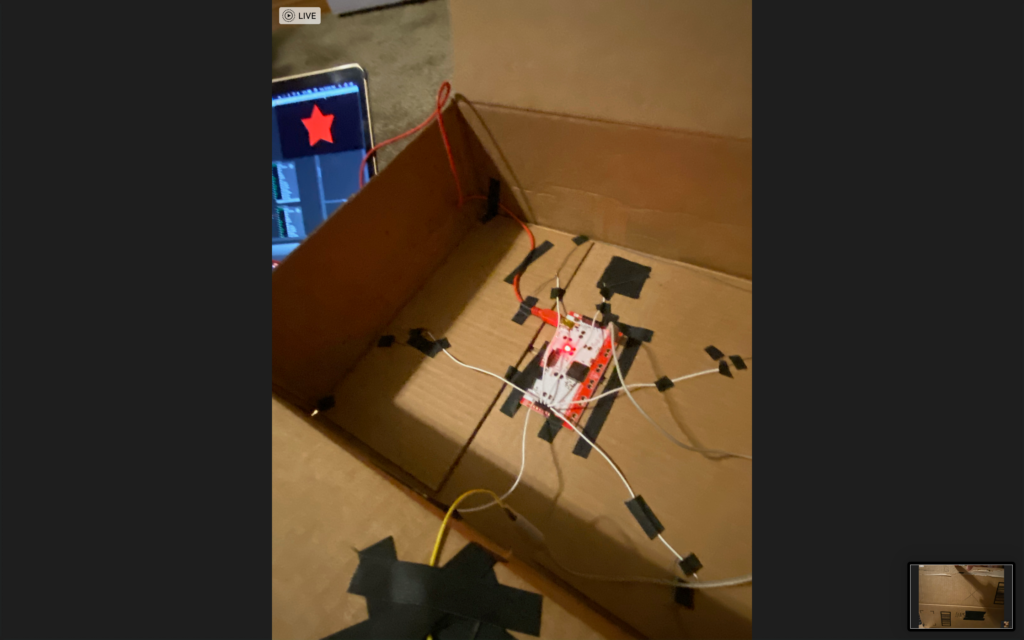

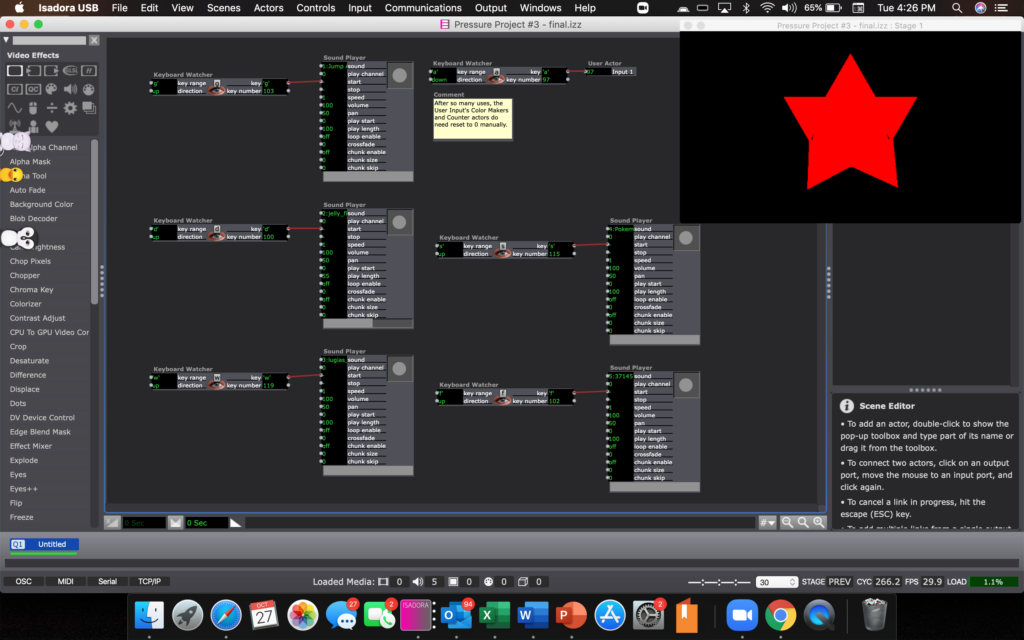

Posted: October 27, 2020 Filed under: Uncategorized Leave a comment »For this project, I wanted to create a mystery box, that depending on the spot touched, a different sound would play.

There are designated spots throughout the box, to trigger different sounds, but because I used the conductive thread, there were many spots that could be touched for the same sound, and sometimes without realizing. There is also randomness to the box at first, since the spots are not labelled it takes a moment to memorize what triggers what sound.

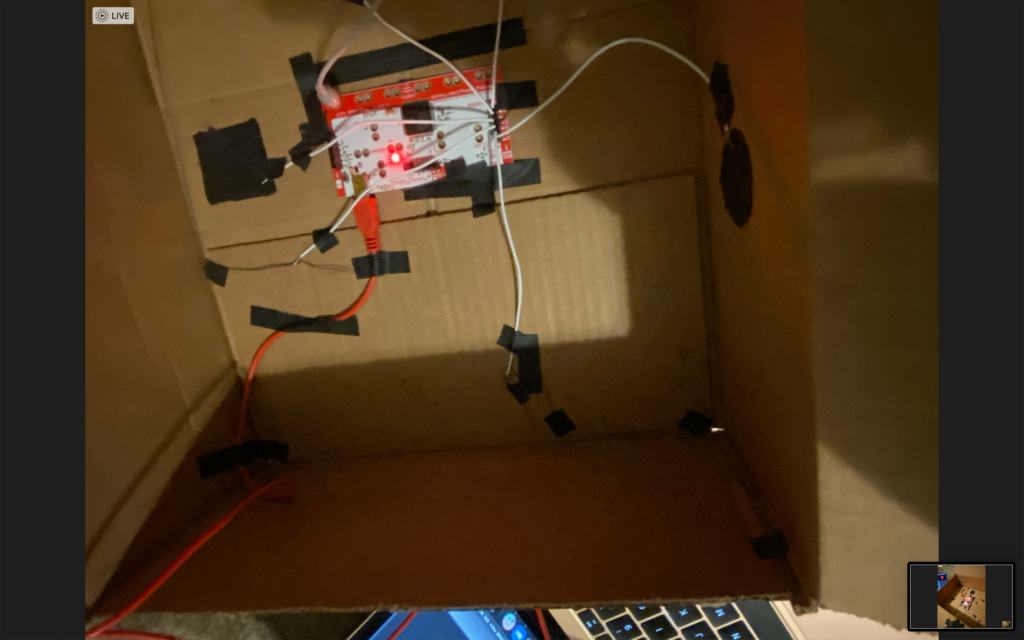

This spot triggers a colored star.

This photo shows the top of the box, including the spot where it grounds, and another cue.

Picking out different sounds, some are random effects, some are songs that may be recognized. My personal favorite is the “Jellyfish Jam” from Spongebob as one of the cues (I knew I wanted to use this going in).

This could be easily adaptable to a different device, as long as the device has six or less touchable spots that can be triggered.

I also left a few notes of instruction, just saying to ground the box then touch any spot with thread or colored black, and that the star does need manually reset after so many touches.

Everything except for the star is an up key, so that it does not trigger until the spot touched is released. Below is the Zip file for the patch.

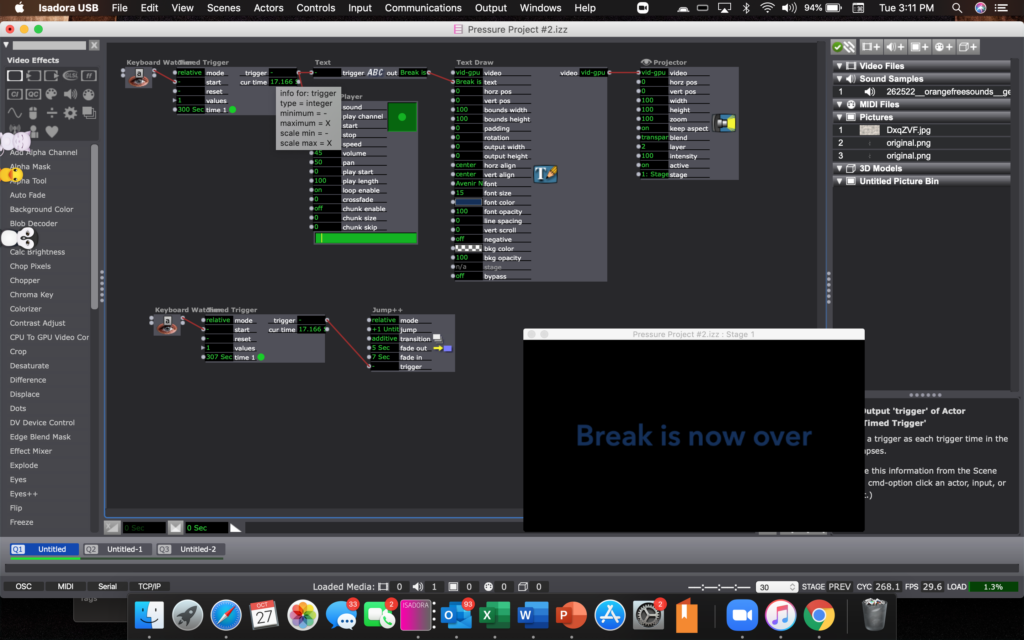

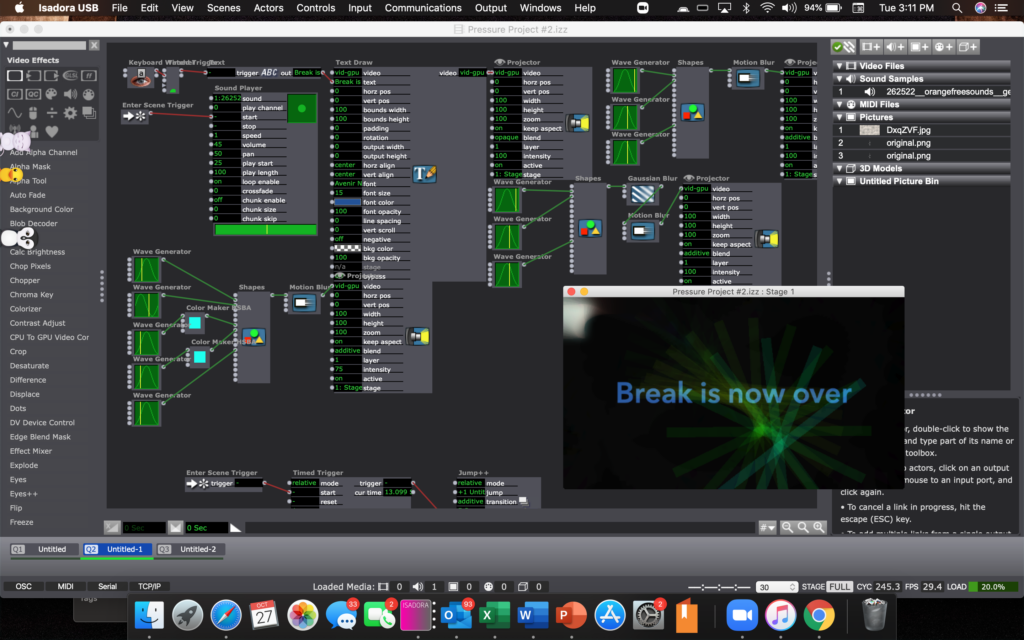

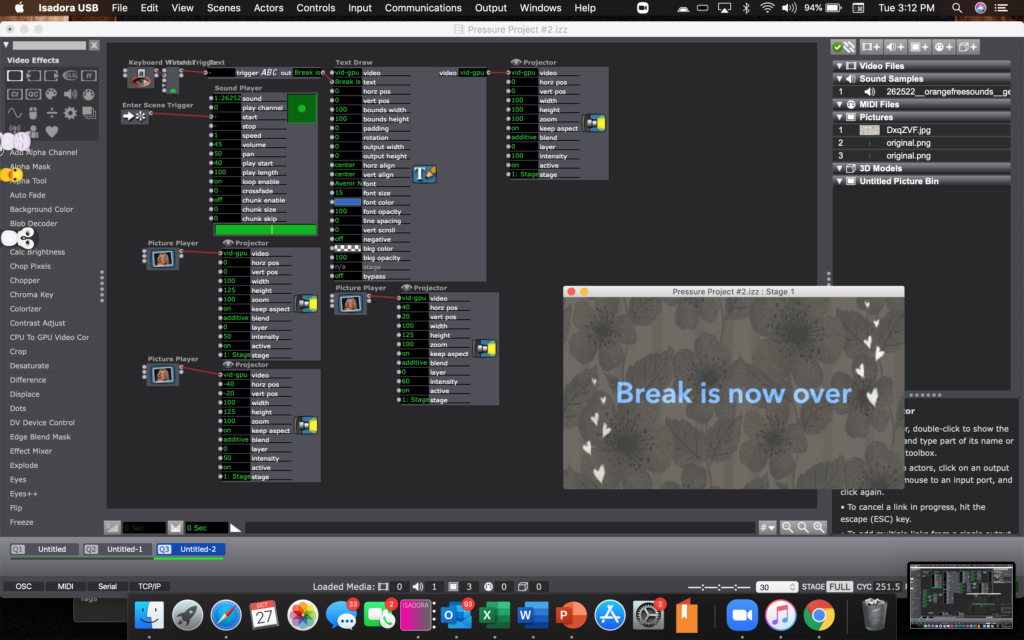

Pressure Project #2

Posted: October 27, 2020 Filed under: Uncategorized Leave a comment »In this pressure project, I wanted to make a system that I could use during rehearsals, in order to count time during a 5 minute break, as I usually can lose track of a few minutes.

Using a keyboard watcher, I set it up to count 300 seconds as soon as a button is hit, grounded through my script’s binder that I always have with me, by attaching some alligator clips and a spot of aluminum foil between my binder and the MakeyMakey, allowing me to both ground and hit go at the same time.

Above is the zip file to the Isadora patch, and the files used.

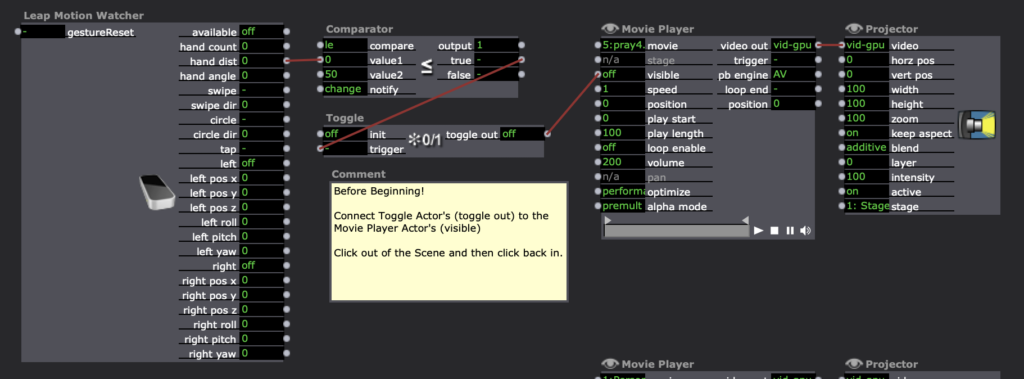

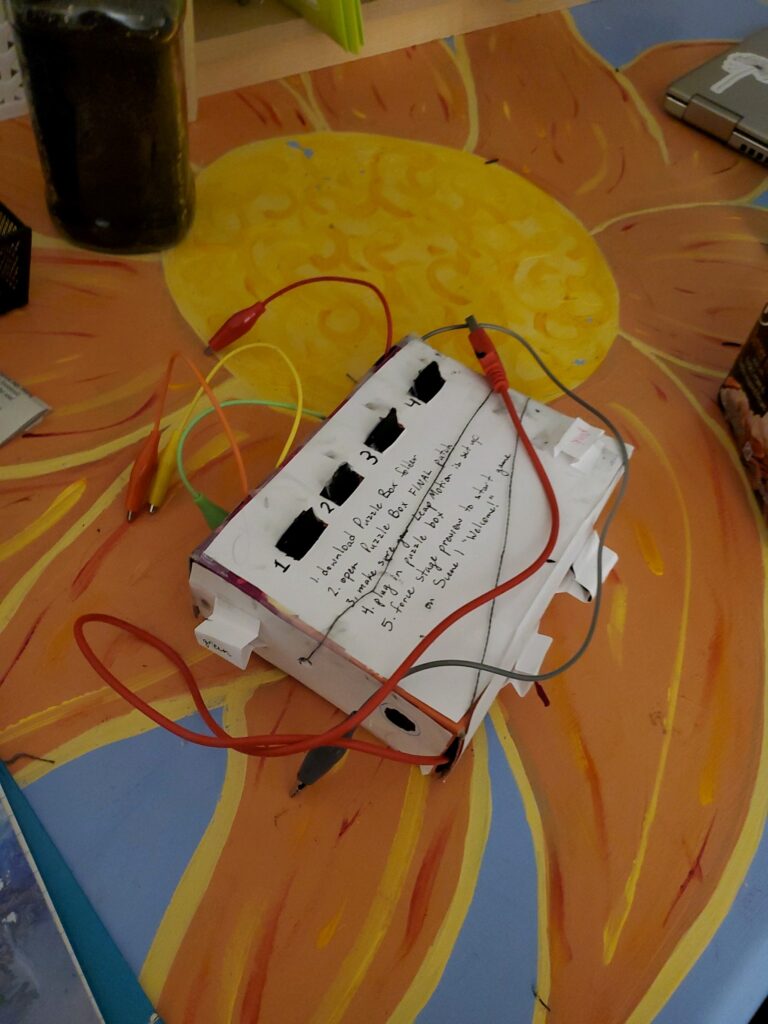

Pressure Project 3

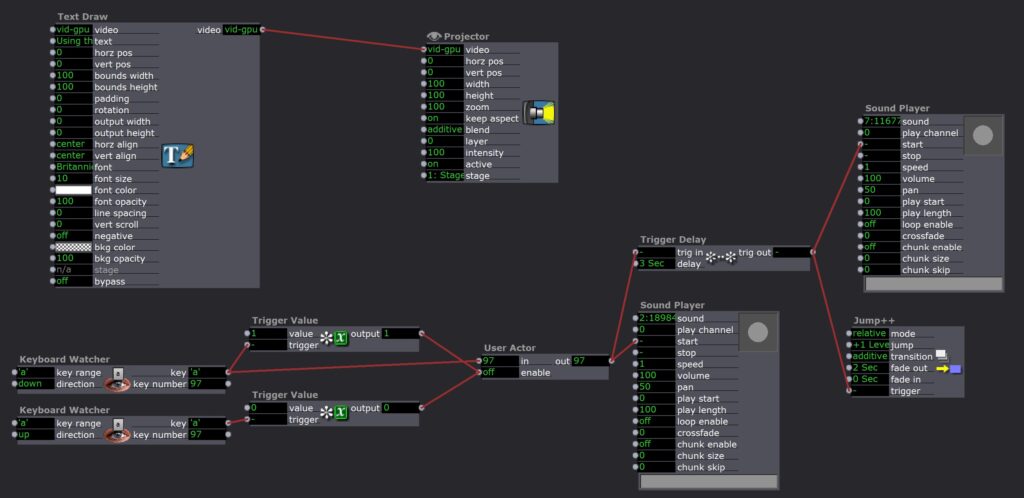

Posted: October 18, 2020 Filed under: Uncategorized Leave a comment »I really focused on the word “puzzle” within this prompt so I created a box that required physical connections in response to prompts by text on the Isadora screen. The box itself was a challenge to make because of how small it was (I used a Kroger mac and cheese box) so it honestly took me hours to poke the holes in the sides of the box and thread all of the wires through them without messing something up and having to redo it. I wanted to make the workings of the box unclear so that the person would have to solve the riddle/puzzle/task in order to complete the level, rather than being able to see where the connections lead.

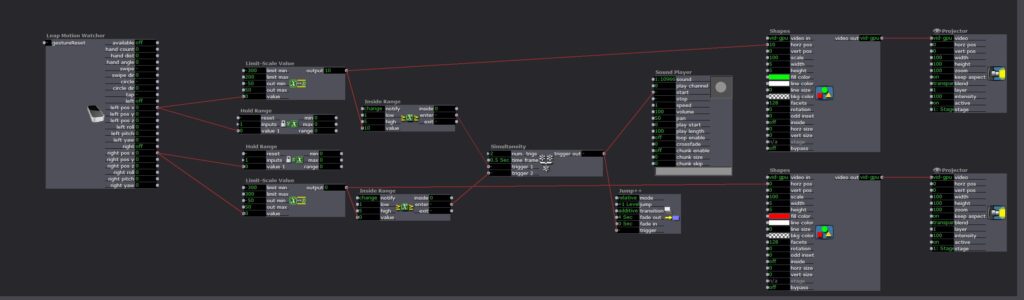

The prompts for each level appeared on the stage of the Isadora patch while everything else supposedly remained hidden. Each level consisted of a task that had to be completed on the box and then a series of hand movements over the LEAP. Over time the difficulty of the puzzles increased and the number of gestures that you had to do also increased, so in a way each level was cumulative.

While the first part of the level was all done on the box, the second part of the levels involving the LEAP used the Isadora stage to help guide the hand movements of the user. There were circles to represent the hands and color coordinated boxes that I hoped would help guide the user to do the required action. Something that I had assumed here was that people would hold their hands flat over the LEAP and move slowly so that is how I tested everything. When I handed it over to Tara that isn’t what happened so sometimes it would register her movement before she was even able to see what the screen was which made the levels unclear.

One of the challenges that I had when I was working on my patch was that I was trying to make a puzzle where people had to put a series of tones in ascending order (like a piano). This puzzle used alligator clips and tags on the box so I had the issue of constant triggers being sent. However, I couldn’t just use multiblocker because I assumed people would try to test the different alligator clips so I also needed the system to be able to reset once someone had tested the sound. I also needed the system to be able to recognize that the tones had been played in a specific order, from left to right on the tabs on the box.

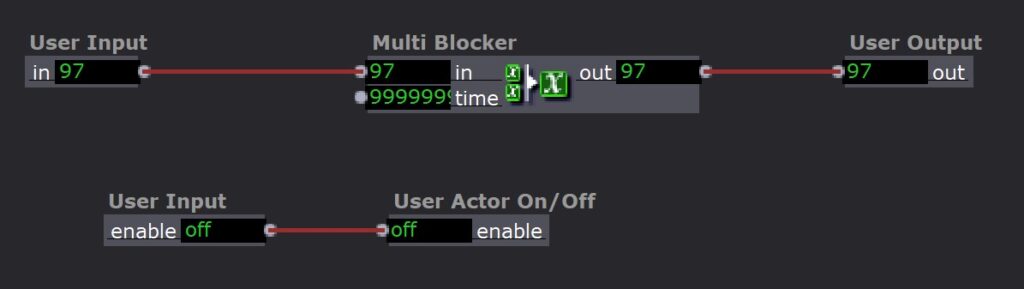

The first challenge was solved with a user actor that contained a multiblocker but also allowed me to reset the time on it whenever the alligator clip was removed from the tab.

The second challenge was solved using a different scene for every tone. So once the user found the correct tone, it would jump to the next scene and they would get a prompt telling them that they got it right and could now move on to figuring out what sound went with the second tab on the box.

For the most part I was really proud of my puzzle box. There were some issues with the LEAP tracking so I would need to either be more specific in my instructions or make the system more able to adjust to different users. The last puzzle with the tones was also one where the instructions could have been more clear, Tara said that it wasn’t clear to her if she had got it right or not so I could have found a more clear way to indicate when each step had been successful. I learned a lot about how to use scenes and user actors when overcoming my struggles with the last puzzle. I also realized that I need to write out a list of the assumptions I am making about the user so that I can either instruct the user more clearly to match those assumptions or adjust to fit behavior outside of those assumptions.

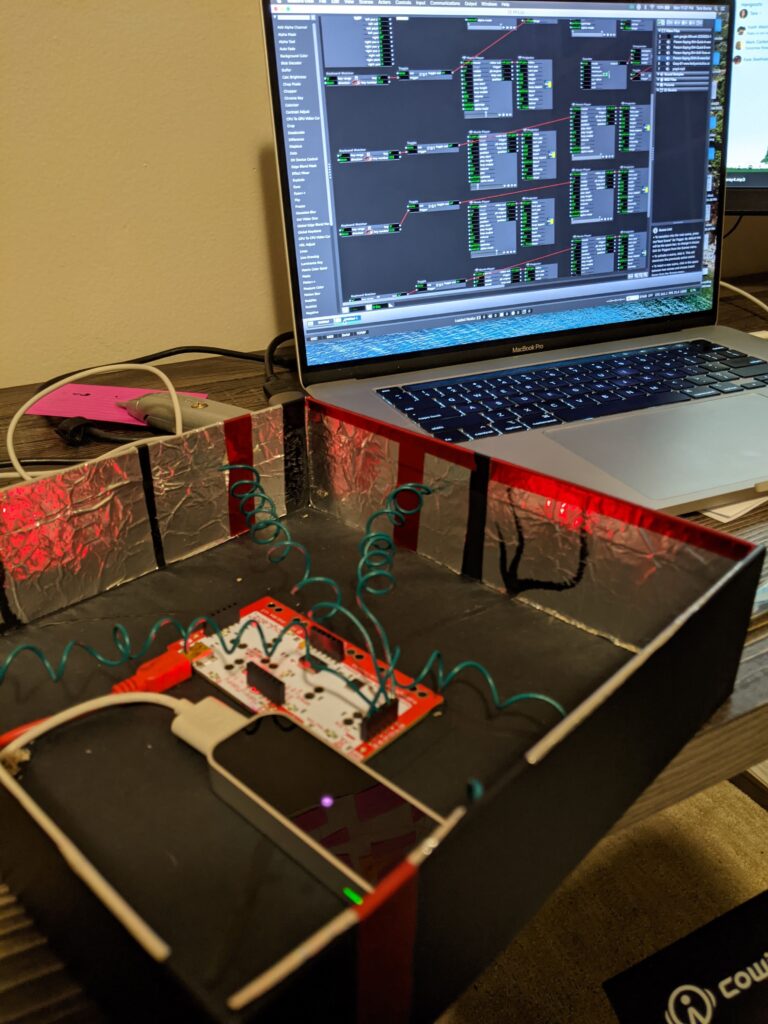

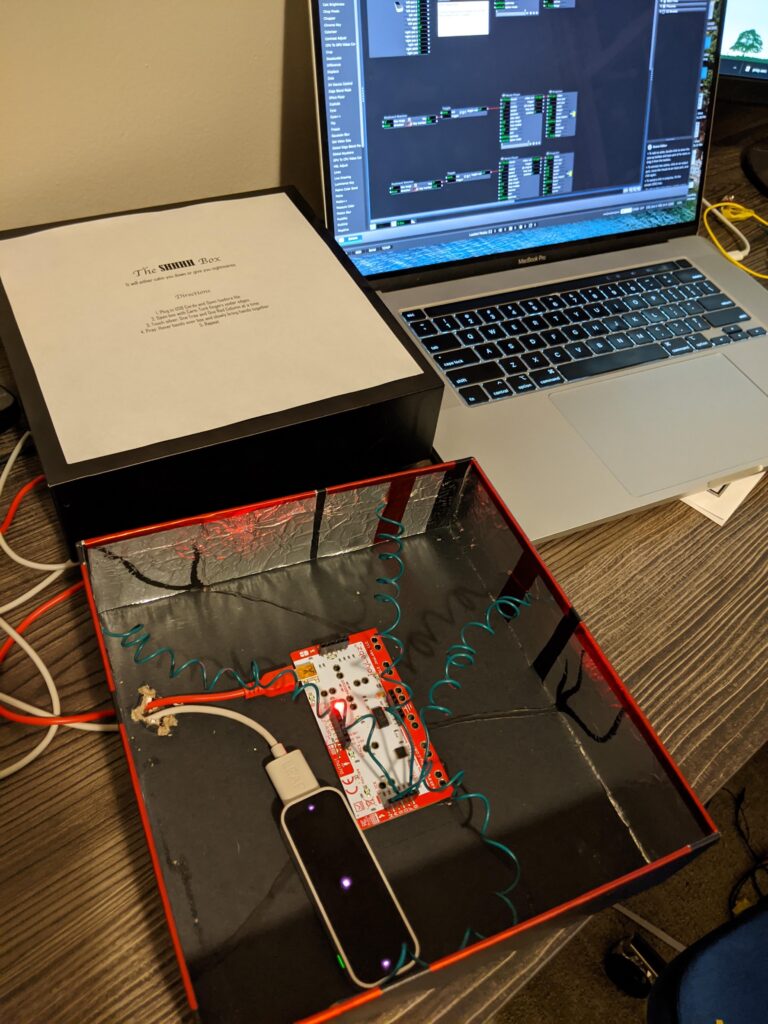

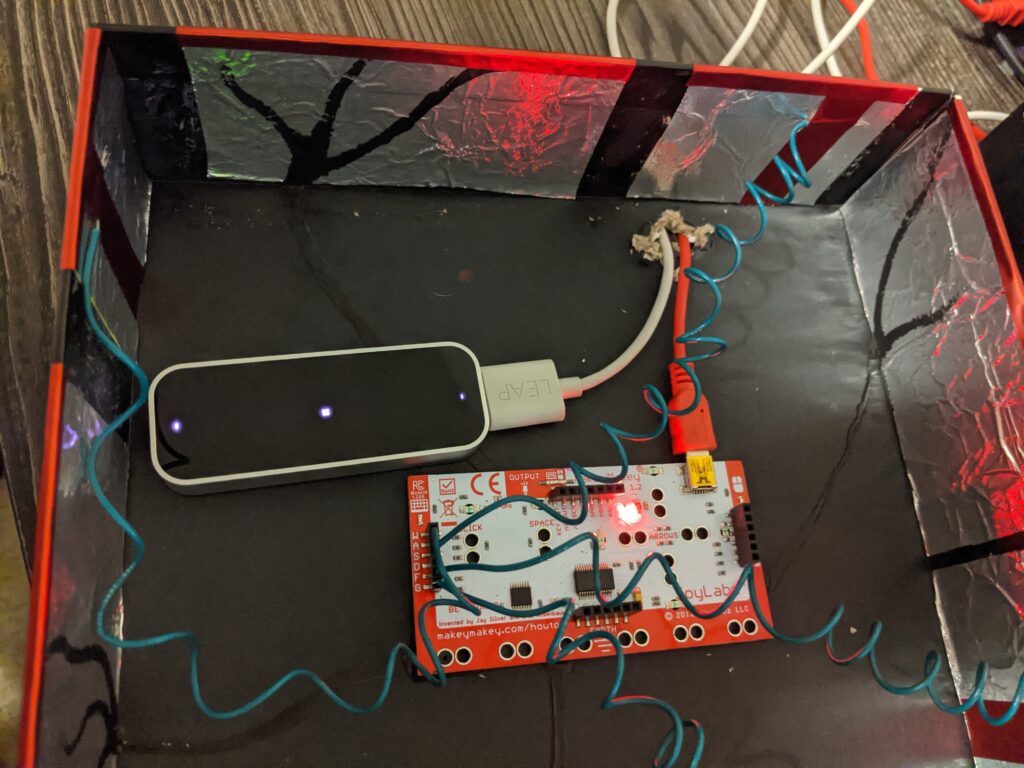

Tara Burns – PP3

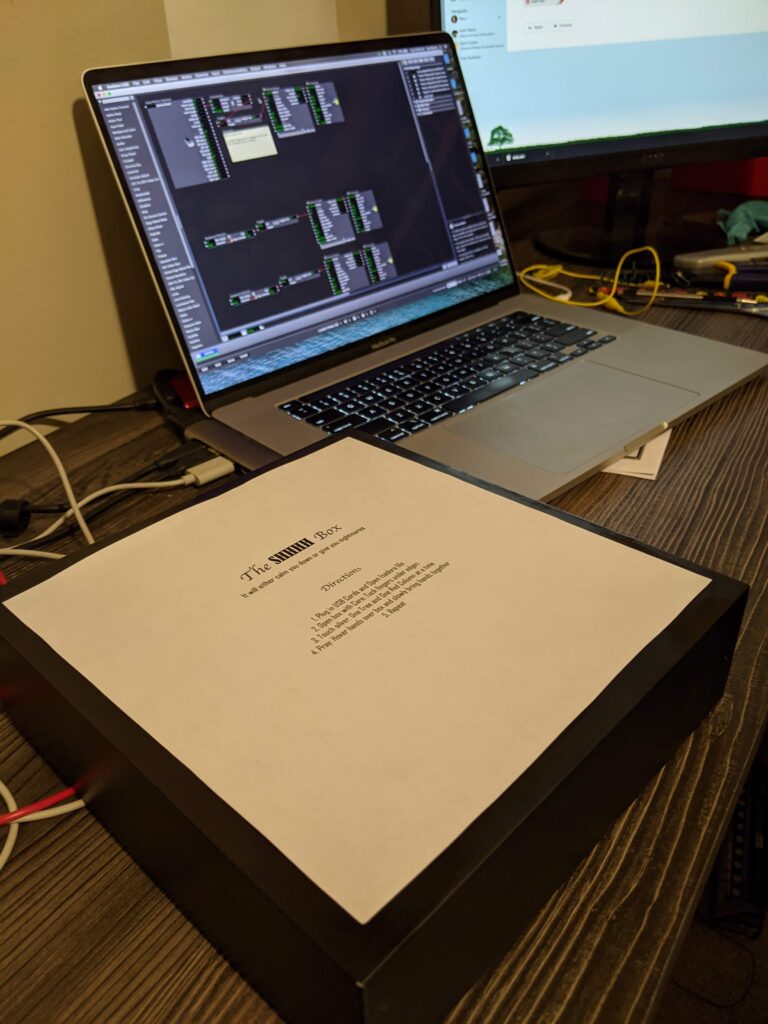

Posted: October 16, 2020 Filed under: Uncategorized Leave a comment »Goal

To expand my idea of the installation/performance sound box into a very small version while creating a surprise for my user

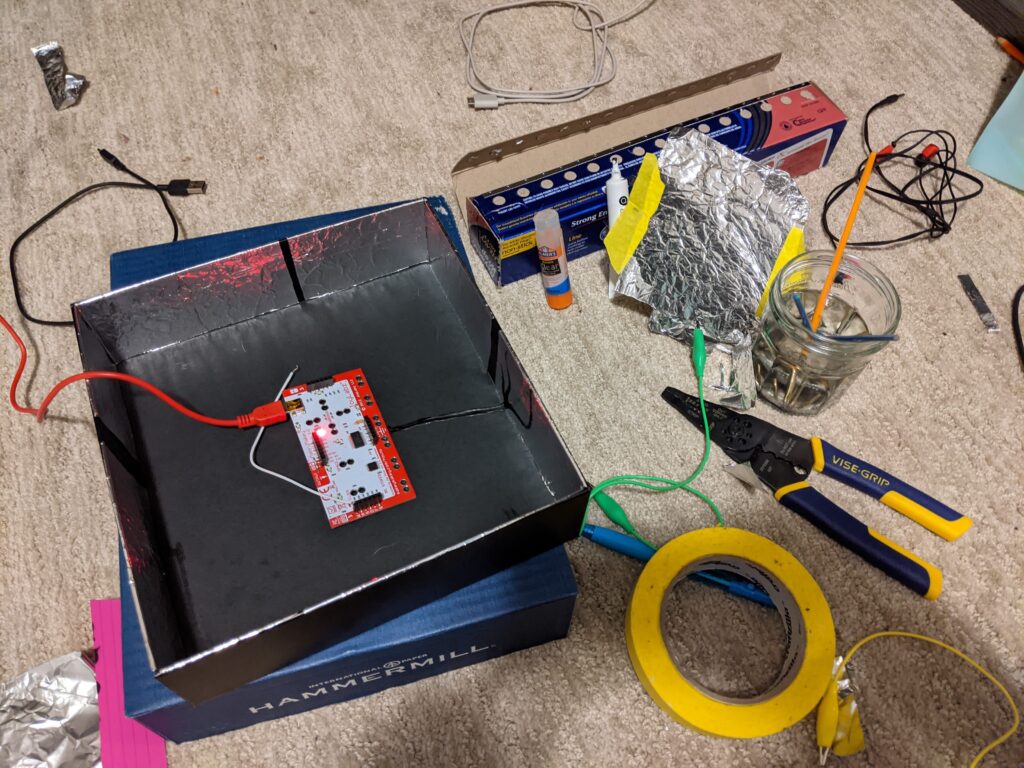

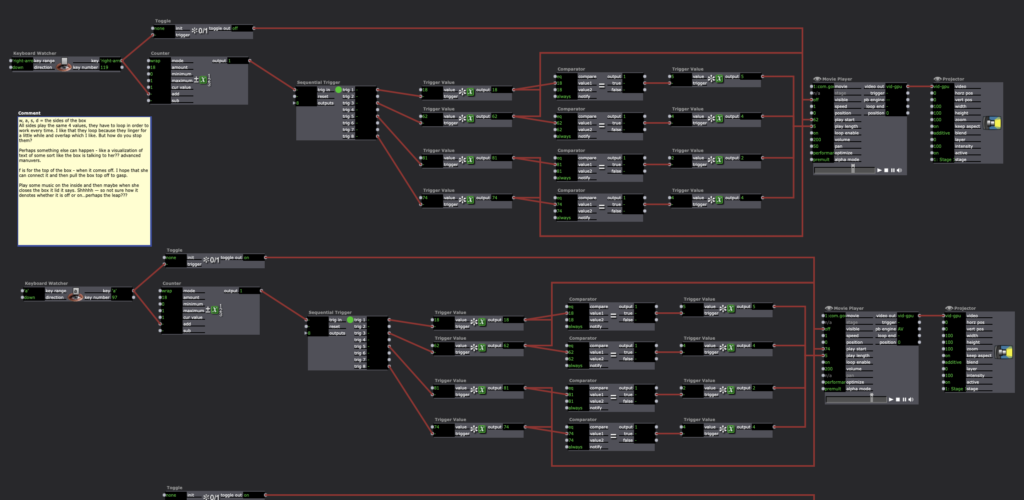

I feel I did get an opportunity to research the installation more in my first patch, but I aborted mission to create a cohesive experience switching the sounds to “shhhs,” labeling the box “The SHHHH Box,” and adding the prayer.

Challenges

I had a problem with one of my touch points continually completing the circuit. I remedied this by putting a piece of paper under the aluminum. I decided that either glue is conductive (I don’t think so), I accidentally connected the circuit with messy electric finger paint, or the box is recycled and might have bits of metal in that one part.

On my computer when I opened the patch, it would blurt out the final prayer. So, I added an instruction to Kara to connect the toggle to the movie player so the sound wouldn’t play and ruin the creepy surprise. Update: Alex showed us a remedy for this: 1) Create a snapshot of exactly how it “should” be (even though it keeps reverting to something other), then Enter Scene Trigger –> Recall Snapshot.

I also wish I had connected all the sounds properly, in haste, I put them in a new folder but forgot to connect the sounds and then Kara had to do that which made it more difficult for her in the beginning. If the paint goes to the aluminum my assumption is that since the aluminum is conductive that it should be trigger enough. But when Kara tested it, she seemed to have trouble triggering it.

It will calm you down or give you nightmares.

1. Plug in USB Cords and Open Isadora file