Pressure Project#1: Pitch, Please.

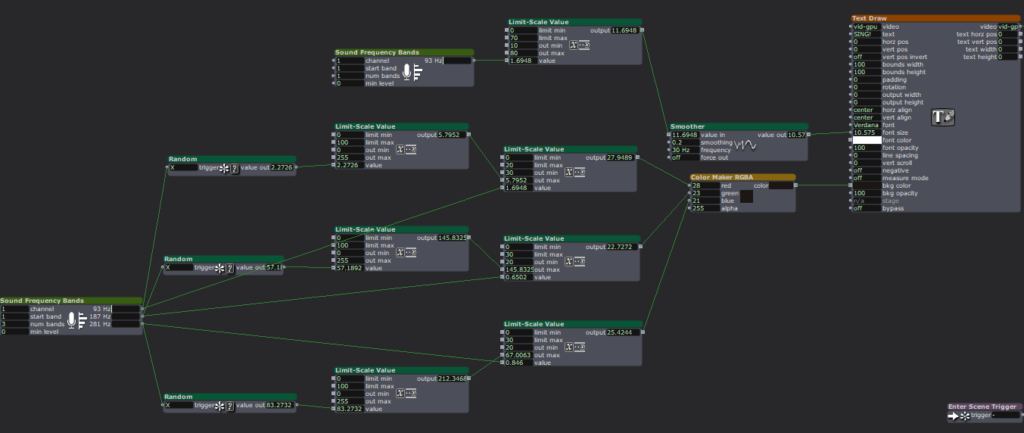

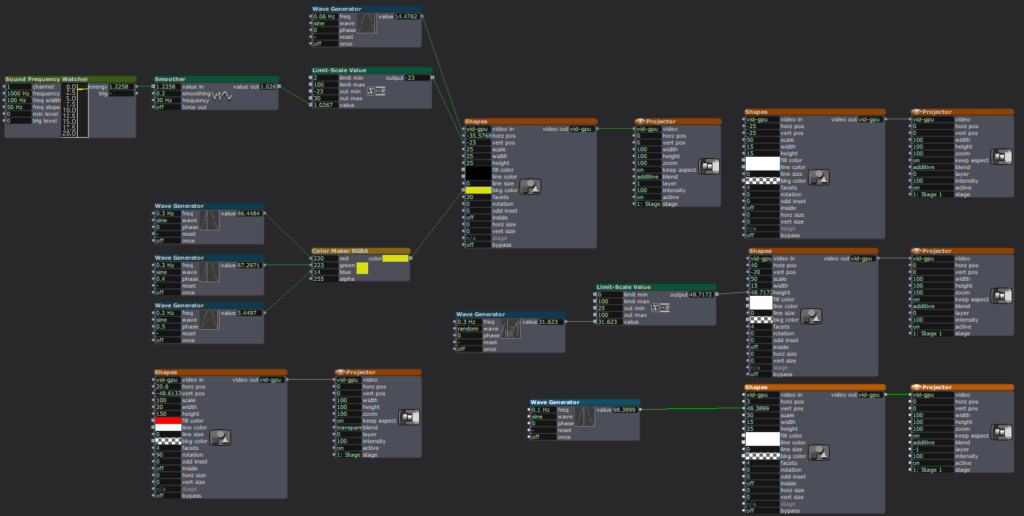

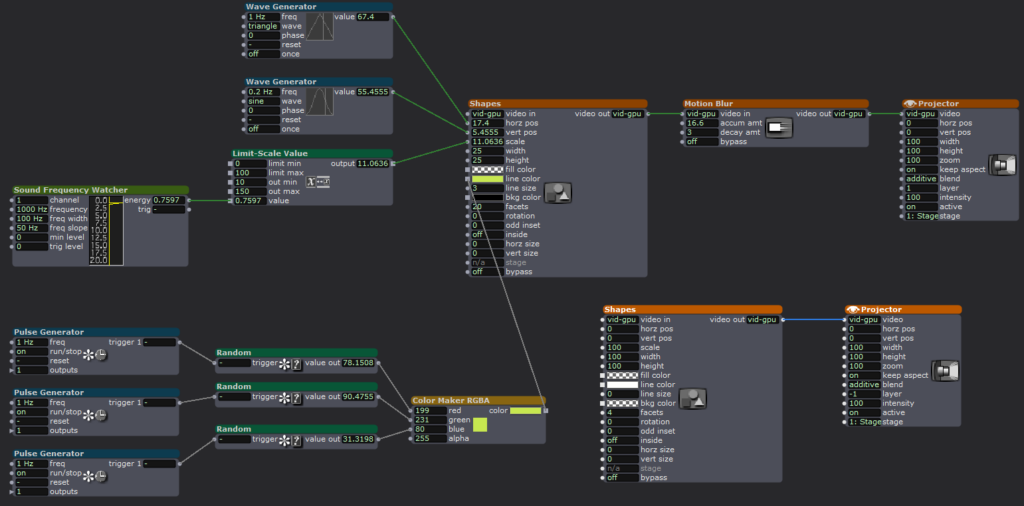

Posted: February 10, 2026 Filed under: Pressure Project I | Tags: Interactive Media, Isadora, Pressure Project, Pressure Project One Leave a comment »Description: Pitch, Please is a voice-activated, self-generating patch where your voice runs the entire experience. The patch unfolds across three interactive sequences, each translating the frequency from audio input into something you can see and play with. No keyboard, no mouse, just whatever sounds you’re willing to make in public.

Reflection

I did not exactly know what I wanted for this project, but I knew I wanted something light, colorful, interactive, and fun. While I believe I got what I intended out of this project, I also did get some nice surprises!

The patch starts super simple. The first sequence is a screen that says SING! That’s it. And the moment someone makes a sound, the system responds. Font size grows and shrinks, and background colors shift depending on frequency. It worked as both onboarding and instruction, and made everyone realize their voice was doing something.

The second sequence is a Flappy Bird-esque game where a ball has to dodge hurdles. The environment was pretty simple and bare-bones, with moving hurdles and a color-changing background. You just have to sing a note, and make the ball jump. This is where things got fun. Everyone had gotten comfortable at this point. There was a lot more experimentation, and a lot more freedom.

The final sequence is a soothing black screen, with a trail of rings moving across the screen like those old screensavers. Again, audio input controls the ring size and color. Honestly, this one was just made as an afterthought because three sequences sounded about right in my head. So, I was pretty surprised when majority of the class enjoyed this one the best. It’s just something about old-school screensaver aesthetic. Hard to beat.

What surprised me most was how social it became. I was alone at home when I made this and I didn’t have anyone test it so, it wasn’t really made with collaboration in mind, but it happened anyway. I thought people would interact one at a time. Instead, it turned into a group activity. There was whistling, clapping and even opera singing. (Michael sang an Aria!) At one point people were even teaming up, and giving instructions to each other on what to do.

When I started this project, I had a very different idea in my mind. I couldn’t figure it out though, and just wasted a couple hours. I then moved on to this idea of a voice controlled flappy-duck game, and started thinking about the execution it in the most minimal way possible (because again, time). This one took me a while, but I reused the code for the other two sequences and managed to get decent results within the timeframe. There’s something about knowing there is a time limit. It just awakens a primal instinct in me that kind of died after the era of formal timed exams in my life ended. In short, I pretty much went into hyperdrive and delivered. I’m sure I would’ve wasted a lot more time on the same project if there was no time limit. I’m glad there was.

That said, could it be more polished? Yes. Was this the best I could do in this timeframe? I don’t know, but it is what it is. If I HAD to work on it further, I’d add a buffer at the start so the stage doesn’t just start playing all of a sudden. I would also smooth out the hypersensitivity of the first sequence which makes it look very glitchy and headache-inducing. But honestly, with the resources that I had, Pitch, Please turned out decent. I mean, I got people to play, loudly, badly, collaboratively, and with zero shame, using nothing but their voice. Which was kind of the whole point.

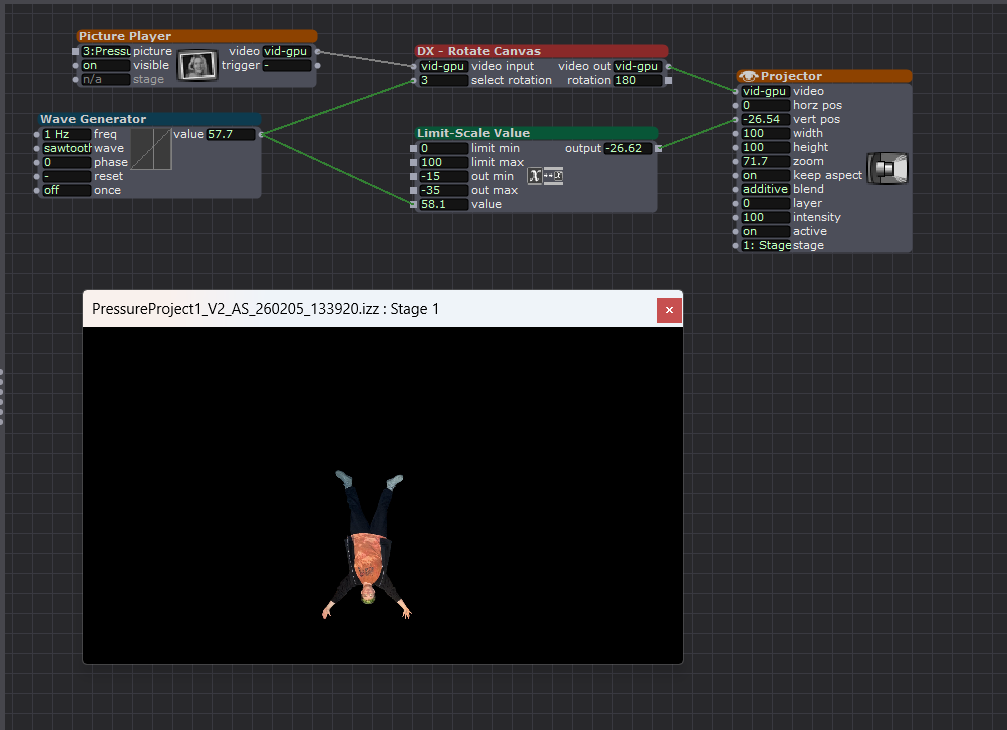

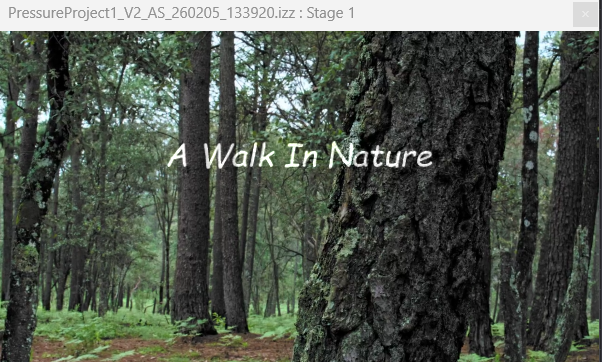

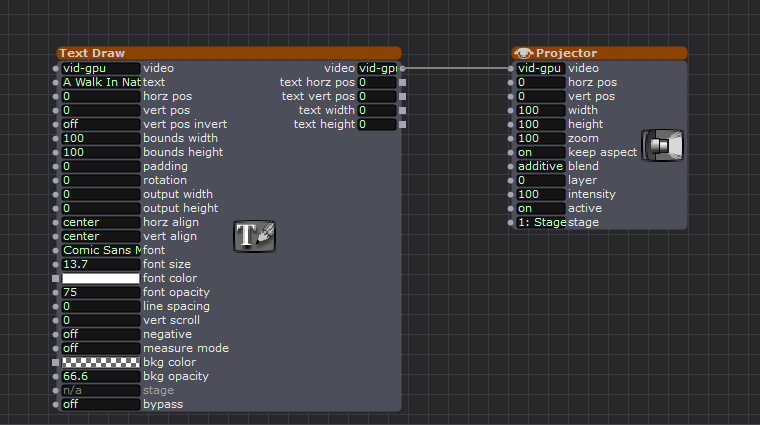

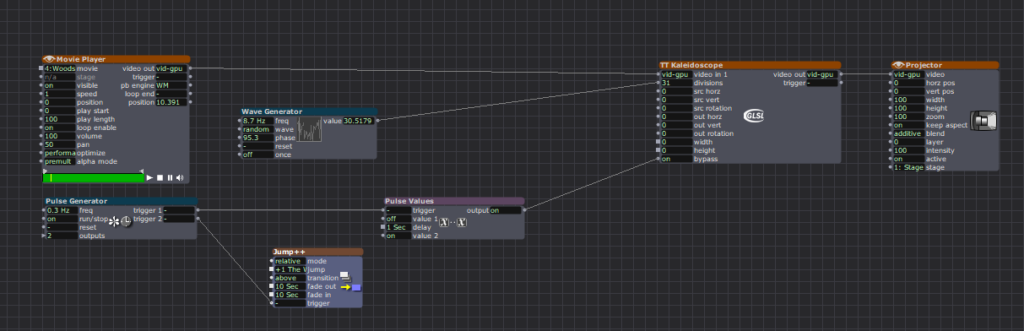

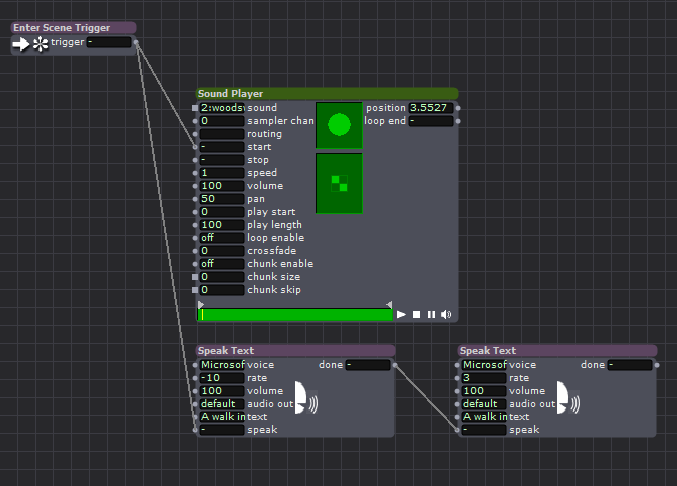

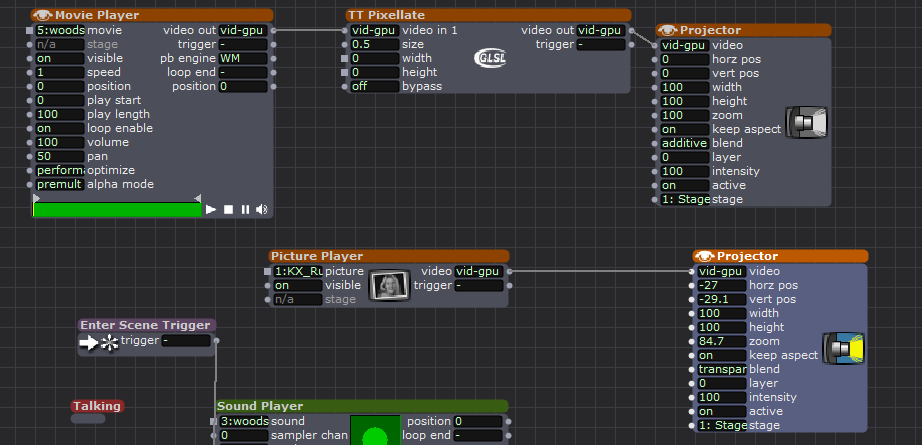

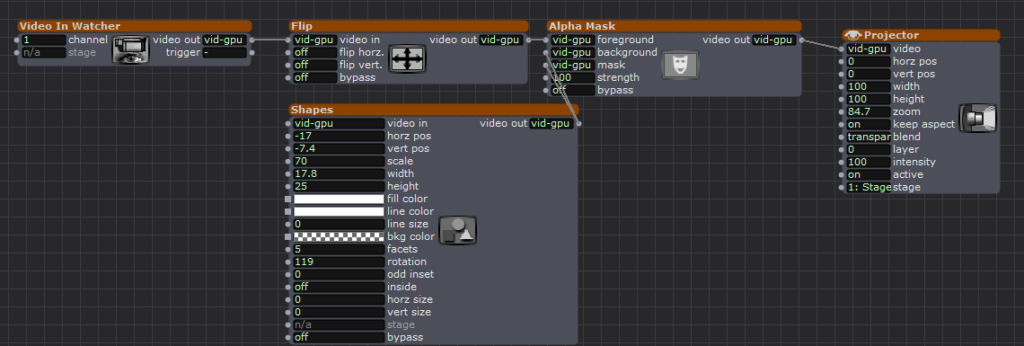

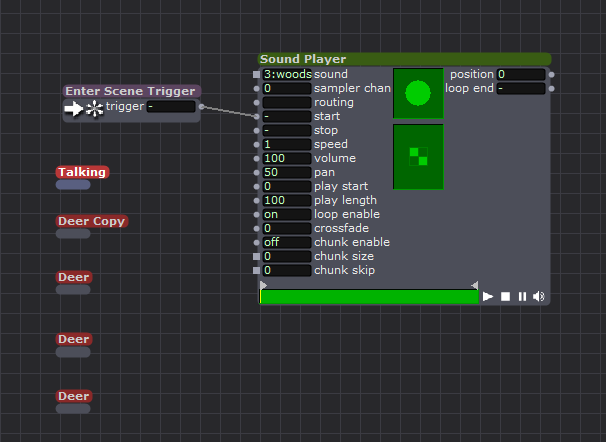

Pressure Project #1 – A Walk In Nature

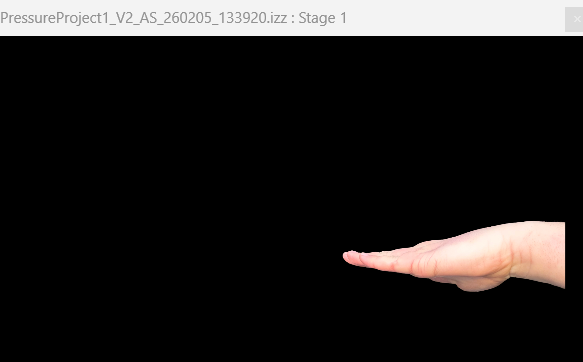

Posted: February 9, 2026 Filed under: Uncategorized Leave a comment »Description: “A Walk In Nature” is a self-generating experience that documents two individuals’ time together deep in the woods.

The Meat and Bones (view captions for descriptions):

Photos I took before production (I had no real clue what I was going to do)

The Reactions:

I am very thankful for Zarmeen’s presence, as I don’t know if I would’ve achieved all the bonus points without her. While I received relatively affirming verbal feedback at the end, without her talent of reacting physically, I would have felt way more awkward showing this messed-up video.

Reflections:

I was actually extremely relieved to have a time limit on the project, as I am very limited on time as a grad student with a GTA and part-time job (it’s rough out here). I loved the idea of throwing something at the wall and seeing what sticks. I chose to do the majority of the work in one setting, figuratively locking oneself in a room for five hours and leaving with a thing felt correct. I did note ideas that popped up throughout the week, but I didn’t end up doing any of them anyway.

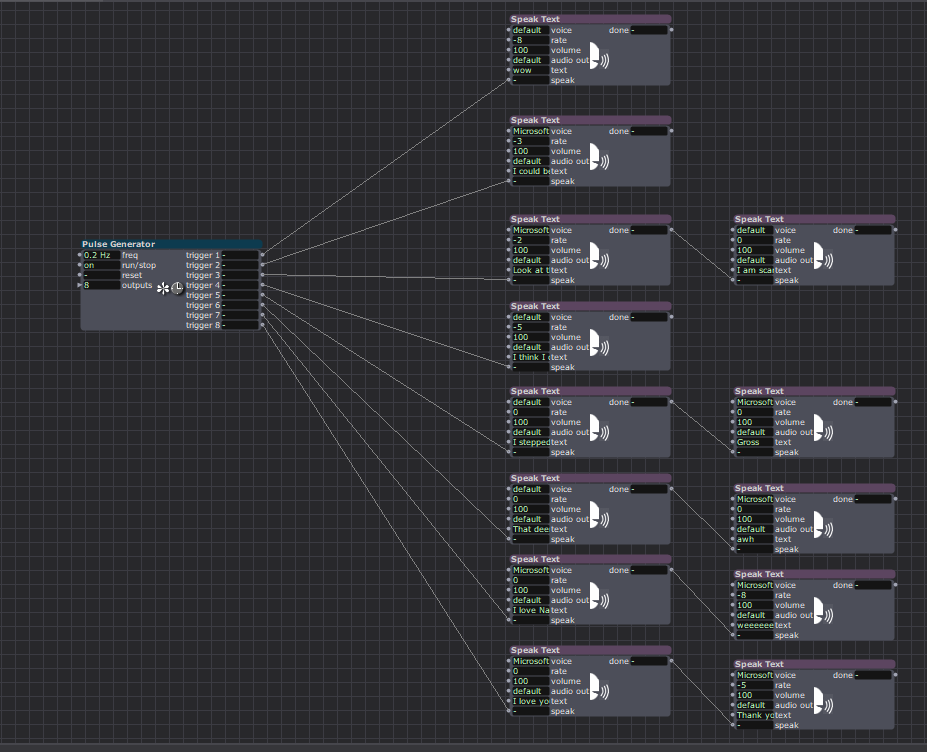

I was far too hung up on the idea of making sure people pay attention; original ideas had the machine barking orders at the viewers to “not look away”, but that felt mean. So I went with the idea of making everyone so uncomfortable that they forget to look away, like how I feel watching Fantastic Planet. Towards the last hour, I realized that aside from robots talking, I needed user interaction to make this feel whole. However, the cartwheel and petting action didn’t work out as pictured above. So what if the audience could be the deer?

The last hour was me messing with an app to use my camera as the webcam (Eduroam ruined my dreams there). So I grabbed a webcam from the computer lab the day of. (sorry Michael) I knew I was going to choose one lucky viewer to hold the camera, and choosing Alex was improvised I just thought he would be most excited to hold it. I was pleasantly surprised that there were expressions of joy while watching, as when I showed my partner, she was scared and mad at me. I am glad my stupid sense of humor worked out. 🙂

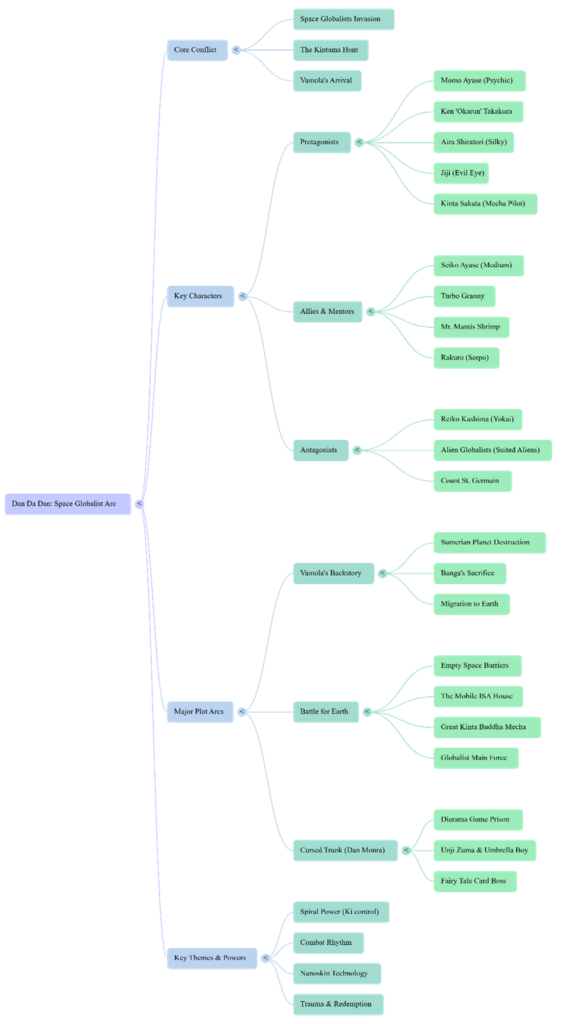

AI EXPERT AUDIT – DANDADAN

Posted: February 5, 2026 Filed under: Uncategorized Leave a comment »I chose the anime DanDaDan as my topic. I believe I am an expert in a lot of anime/manga related topics because I have been reading manga and watching anime for more than a decade now. I love DanDaDan especially because it’s one of the few series lately that’s a little different in a world of overly saturated genres like the leveling-up games. DanDaDan is a breath of fresh air and super weird and fun filled with all sorts of absurdity. So, in order to train notebook LM about this topic, I used some YouTube videos. The videos focused on the storyline, major arcs, characters, and why is it such a hit.

1. Accuracy Check

I wasn’t so surprised that it got the gist of the story correct. I did give it sources where the youtubers summarized the whole storyline and talked about its characters, arcs and resolutions. So, it wasn’t a bad generic overview, I would even say it was good for a summary. It’s only when you’ve been thoroughly into a certain subject area that you start understanding the nuances and tiny details of it. I think it didn’t say something outright absurd if we were to talk about what it got wrong. It’s just that it sometimes mispronounced some names. With the names being Japanese, I am not surprised that they might be mispronounced, but the AI used a range of mis-pronunciations for the same name.

One of the voices in the podcast was too hung up on making the story what it is not. I mean sure it was justified at some points but it insisted that the real ideas behind this absurd adventure-comedy are deeper themes like teenage loneliness, and that it’s actually a romance story while it’s not. (It’s a blend of scifiXhorror) Sure there are sub-themes like in all anime, but it’s not the main theme. The other voice sometimes did agree with this idea. The podcast was not focused enough on just keeping it fun and light- which is what DanDaDan really is.

2. Usefulness for Learning

If I was listening to this topic for the first time, I feel like this podcast wouldn’t be a bad starter. Like I mentioned earlier, it gave a pretty decent summary of the whole plot. I think it definitely gets you started if you need a quick explanation of a subject area. I found the mindmap to be pretty decent too. It was a decent overview of the characters and the arcs. The infographic on the other hand… so bad. The design is super cringe and again, a lot of emphasis is on the romance and how it drives the action. Which I disagree with.

3. The Aesthetic of AI

Overall, the conversation was SO very cringe, and it was very difficult to get used to it in the beginning. I used the debate mode and they were talking so intensely about a topic that’s just nowhere as serious as the AI made it out to be. I had to just stop and remind myself it’s just a weird, fun anime they’re talking about. AI has this tendency to make everything sound intense, I guess.

4. Trust & Limitations

I would recommend AI to someone who wants a quick summary or overview of a topic. It’s what the AI is good at. What I wouldn’t recommend is to dwell on the details that the AI talks about. If anyone wants details or wants to form an opinion about a topic, they should look into it themselves.

Link to the podcast:

AI-Generated Visuals:

Sources:

https://youtu.be/8XdTF5tnMVU?list=TLGG7J2IoA7cY1QwNTAyMjAyNg

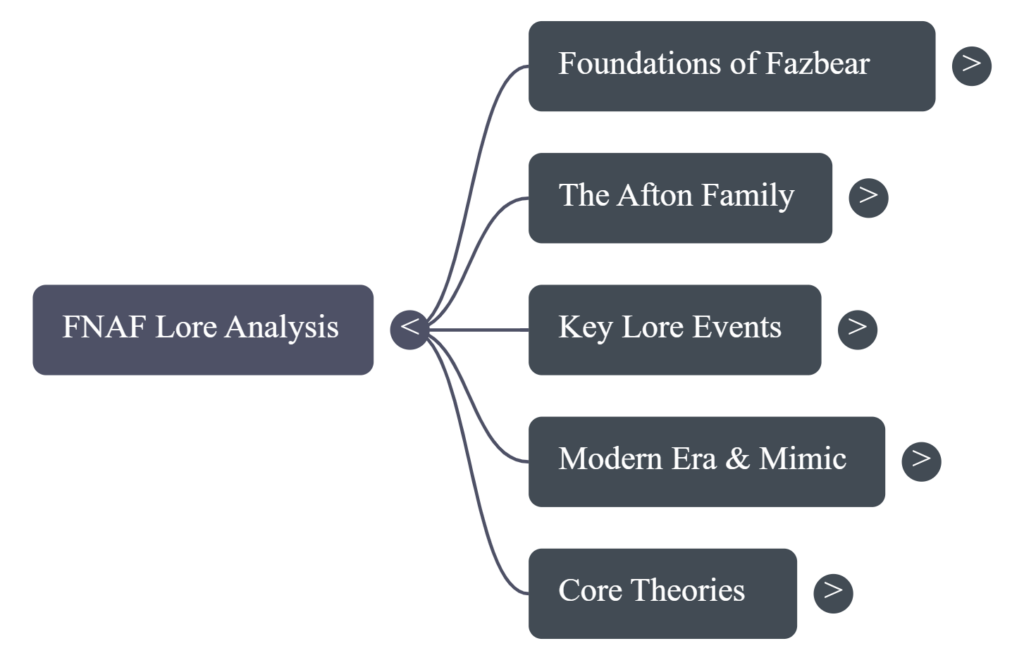

AI Expert Audit – The Elder Scrolls

Posted: February 5, 2026 Filed under: Uncategorized Leave a comment »Source Material

Since for my topic, I chose to pick a game which already has a wealth of in-universe literature written, my primary source was a pdf of every book that exists in the series, found at LibraryofCodexes.com. I also chose to upload a small document I found giving a general timeline of the series and its history, as well as a short video covering the history of the world.

I chose this topic as over the course of the last 10 years, I’ve likely played up to (or over) 1000 hours of these games over 3 different games. Even more so, I’ve listened to countless hours of videos doing deep-dives on the world’s lore as background videos while working or driving. I think the reason I find myself so drawn to it is the relationship between world-building and experience in RPGs. As I learn more about the world, the characters I play can have more thought out backgrounds and motivations, improving my experience, which makes me want to learn more about the universe. I was also interested to see how the AI would handle sources not about the game itself, but rather about a range of topics that exist *inside* the game.

The AI-Generated Materials

Podcast

Prompt: Cover a broad history, honing focus on the conflict between men and elves

Infographic

Prompt: Make an infographic about the Oblivion Crisis and how the High Elves capitalized on it.

Mind Map

Audit

1. Accuracy Check

Overall, the AI got a lot right about the historical origins and monumental events in the world of the game. There are some topics that are somewhat confusing that I was surprised it got mostly right. It didn’t get much wrong, but it did make a few strange or even incorrect over-generalizations. For example, in the podcast it said that the difference between the two types of “gods” in this world is “the core takeaway for how magic works”, which it is not. Even weirder, it got the actual origin of magic in the games correct later on.

2. Usefulness for Learning

I do think that these sources would be incredibly useful for someone with no prior knowledge of this series to easily learn about the world they exist in. The podcast does a good job at simplifying the most important events for understanding what’s happening and the motivations of different factions. However there are a lot of nuanced ideas that it completely misses, which could be due to the length being set to normal. The mind map does a really good job at connecting important ideas of the universe together. However, it also places too much importance on certain topics, such as a handful of weapons, only one of which has any real importance to the larger plot. Lastly I thought that the infographic did a nice job at laying out the events that I prompted it to, but there were a few spelling errors.

3. Aesthetics of AI

One of the strangest things I encountered doing this was the ways that the AI would try to make itself sound more human during the podcast. For instance, it would stutter, become exasperated at certain abstract topics, and even make references to memes not found in the sources. The AI definitely has a certain voice to it. I don’t know how to exactly describe it, but in the podcast it seems to talk like everything it mentions is the most important thing ever, and the other AI “voice” always seems to be surprised at what the other one is saying. I actually thought that the AI did a pretty good job at emphasizing the same things a human expert would. However it somewhat glosses over the actions of the player characters during the games, which I think a person would focus a bit more on.

4. Trust and Limitations

From this, I would probably warn a person against trusting the importance the AI might place on certain topics as well as the connections it makes between topics in generated educational materials. It also seems to avoid any sort of speculative ideas whatsoever, which I found odd since there were books in the sources which do theorize on certain unknown events or topics. I’d say the AI seems the most reliable in taking the information you give it and organizing it into easily consumable chunks. However, this only seems to be at a surface level, and when it tries to draw conclusions about topics, it tends to fall flat or make incorrect assumptions. I think in this case, you’d be better off just watching a video someone has already made on the games.

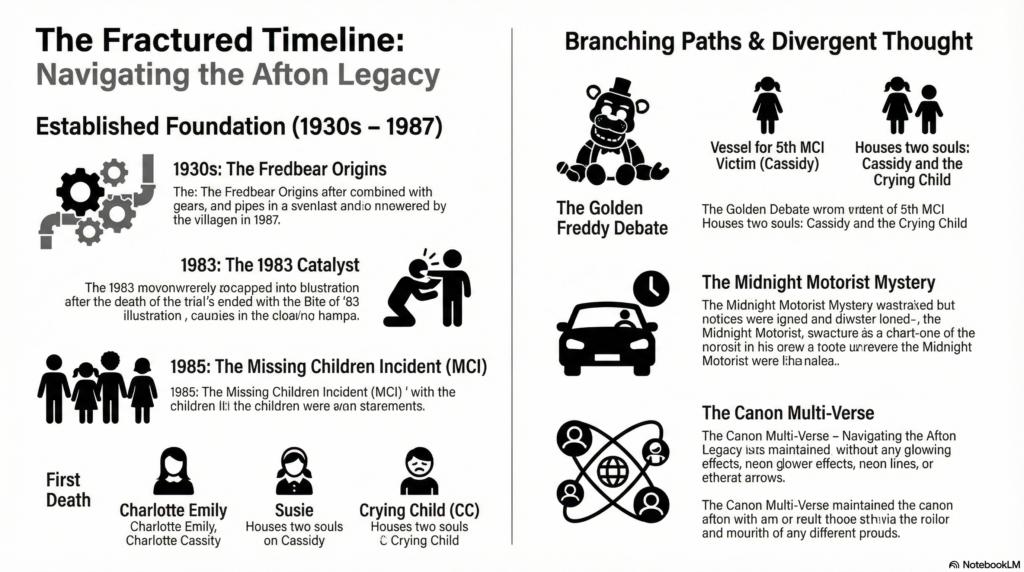

AI Expert Audit: I made Notebook LM theorize about Five Nights of Freddy’s

Posted: February 4, 2026 Filed under: Uncategorized Leave a comment »The Source Material (I kind of went too far here):

we solved fnaf and we’re Not Kidding

https://www.reddit.com/r/GAMETHEORY/

How Scott Cawthon Ruind the FNAF Lore

https://freddy-fazbears-pizza.fandom.com/wiki/Five_Nights_at_Freddy%27s_Wiki

https://www.reddit.com/r/fivenightsatfreddys/

GT Live react to we solved fnaf and we’re Not Kidding

My Source Material, Why Did I Choose This?:

I actually chose materials that weren’t important to me, but they were when I was younger. I love listening to video essays and theories on various media. Whenever I was animating or doing a mundane art task in my undergrad, I would have that genre of video in the background to take a break from listening to the news (real important shit). It’s super silly stuff, but when I was a teenager, Game Theory first started getting BIG; seeing a huge channel discussing my favorite IPs, subverting and contextualizing their narratives felt very important. It really validated my feelings that video games were art.

However I am now grown, and I care far less about Five Nights of Freddy’s, now it feels like fun junk food for my brain. (Although teens and kiddos still care about the spooky animatronics, so it’s been a clutch move when bonding with the youths when I was a nanny.) I also hate AI, I hate it. I don’t hate automation; it makes life way better when done currently. I don’t think “AI” is done correctly; it’s mostly bullshit even down to the name. It’s a marketing strategy giving excuses to companies to fire workers and build giant databases that poison the land. I did not want to give Notebook LM anything “meaningful”. I didn’t want to let it in on the worlds I care about on my own volition. So, I gave it the silly spooky bear game that I know way too much about.

The AI Generated Materials

“Create an info graph of the official Five Night of Freddy’s Timeline with the information presented. Creating branches of diverging thought alongside widely agreed upon information.”

“Form a debate on what Timeline is the canon for FNAF.

Each host has to make their own original timeline.

Both hosts should sound like charismatic youtubers with dedicated channels to the video game and it’s lore.

Both Youtubers should use the words often associated with the Fandom and culture of FNAF.

Both hosts you have distinct personalities and opinions from one another.

Both hosts will have different opinions on whether the books should be used in lore making.”

1. Accuracy Check

What did the AI get right?

The basics. It was able to categorize the general hot topics (e.g., MCI or the Missing Child Incident, The Bite of 83’ and 87’, The Aftons…). It sometimes would match what theory goes with what Youtuber. It’s pretty efficient in barfing out information in bullet point fashion.

What did it get wrong, oversimplify, or miss entirely?

The transcripts from the videos aren’t great; they don’t separate who is saying what, so when trying to describe the multiple popular theories out there and how they conflict, it struggles. When I had it made an audio debate where two personalities choose a stance to argue about from the materials I provided. It was pretty much mincemeat. Yes, both were referencing actual game elements but in ways to make no sense to the actual theories provided, the “hosts” argued about points no real person would argue about. In the prompt, I instructed one personality to use the books as reference while the other did not, and it took that and made 70% of the podcast arguing about the books. The mind map struggles to clarify what theory is and what is a canon fact. The info graph was illegible.

Were there any subtle distortions or misrepresentations that a non-expert might not catch?

Going back to the mind map, and in other words it doesn’t cite its sources well. It does provide the transcript it referred to, but the transcripts aren’t very useful as described above. It flips flopped between stated what as a theory and what was canon to the game (confirmed by the creators). If someone were to read it without much knowledge, they would be bombarded with information that conflicts, isn’t organized narratively, and stated in context of its origin.

2. Usefulness for Learning

If you were encountering this material for the first time, would these AI-generated resources help you understand it?

Semi-informative but not at all engaging.

What do the podcast, mind map, and infographic each do well (or poorly) as learning tools?

Both podcast and mind map were at least comprehensible; the info graph was not.

Which format was most/least effective? Why?

The podcast is the most effective; there was some generated personality to distinguish the motivation behind certain theories, not great distinctions but more than nothing.

3. The Aesthetic of AI

It’s safe to say Youtubers and podcasters are still safe job wise. Hearing theories about haunted animatronics in the format and aesthetics of an NPR podcast was deeply embarrassing. Hearing a generated voice call me a “Fazz-head” was demoralizing to say the least.

They made pretty bad debaters too. The one who was presumably assigned the role of “I will only use the games as references” at one point waved away their opponent’s claim with the response, “yeah but that’s if you seriously take a mini game from 10 years ago”.

It took out all of the fun; there were no longer cheeky remarks of self-depreciating jokes about the silliness of the topic and efforts. Often theorists will acknowledge Scott Cawthon did not think these implications fully out, that this effort may be rooted in retcons and wishful thinking, but it’s still fun. The hosts and mind map acted like they were categorizing religious text, and it was remarkably unenjoyable to sit through.

4. Trust & Limitations

AI is good at taking (proven) information and organizing it in a way that is nice to look at. It’s great for schedules or breakdowns. It sucks at just about everything else. I only really have benefitted from AI when it comes to programming; it’s really nice to have an answer to what is wrong with your code (even if it’s not always right; it usually leads you past the point of being stumped).

When it comes to art, interpretation, and comprehension, I wouldn’t recommend AI to anyone. If you are making a quiz, make it yourself. The act of making a quiz based off study topics will increase your comprehension far more than memorizing questions barfed out to you. If you don’t have the time to produce something, then produce something you can with the time you have or collaborate with someone who can produce with you. Use AI to fix your grammar (language or code), use AI to make a schedule if you suffer from task paralysis, but aside from accommodations and quick questions, leave it alone.

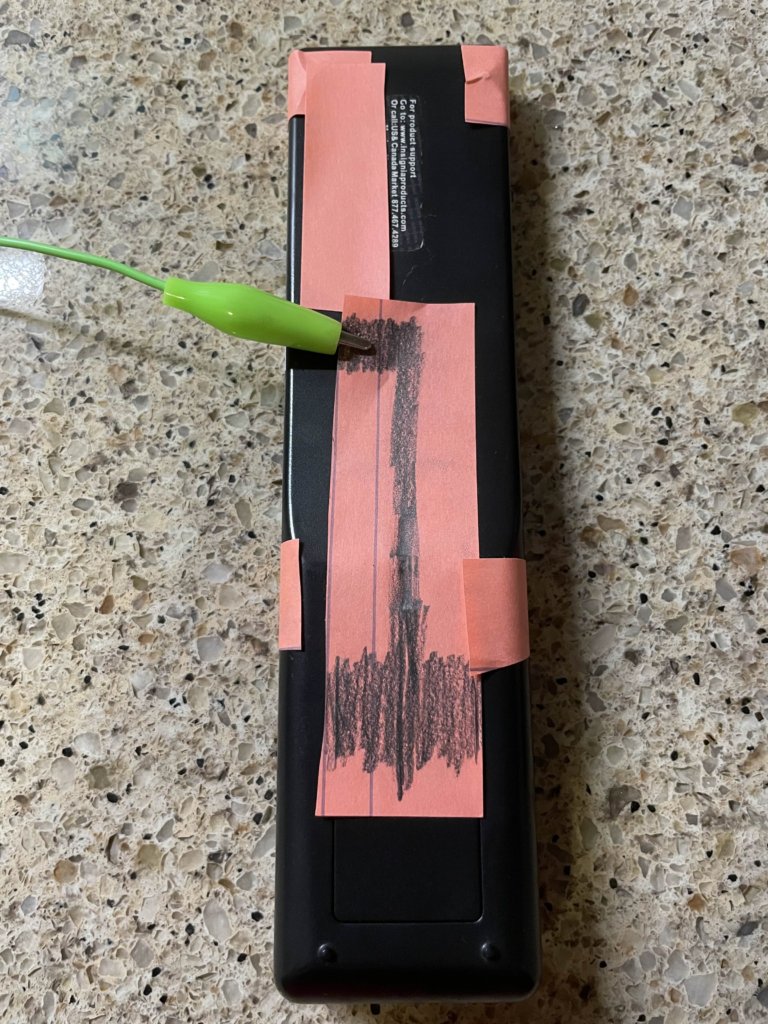

Pressure Project 3: Expanded Television

Posted: December 18, 2025 Filed under: Uncategorized Leave a comment »I had a lot of ideas for this pressure project but ended up going with an expanded version of my first pressure project. I thought it would be really fun to use a Makey Makey to an actual TV remote into something that can control my Isadora TV. If I used sticky notes and conductive pencil drawings, I could fake pressable buttons to change the channel and turn it on and off.

To me, the hardest part of using a Makey Makey is always finding a way to ground it all. But I had the perfect idea of how to do this on the remote: because people usually have to hold a remote in their hand when they use it, I can try to hide the ground connection on the back! See below.

This worked somewhat well but because not everyone holds a TV remote with their hand flat against the back, it may not work for all people. You could use more paper and pencil to get more possible contact points, but this got the job done.

For the front buttons, I originally also wanted the alligator clips to be on the back on the remote, but I was struggling to get a consistent connection when I tried it. I think the creases at the paper bends around to the back of the remote cause issues. I’m pretty happy with the end result, however. See below.

For Isadora, I created a new scene that was the TV turning on so that people could have the experience of both turning on and off the TV using the remote. The channel buttons also work as you would expect. The one odd behavior is that turning on the TV always starts at the same channel, unlike a real TV which remembers the last channel that it was on.

I also added several new channels, including better static, a trans pride channel 🏳️⚧️, and a channel with a secret, a warning channel, and a weird filter glitchy channel. Unfortunately, I cannot get Isadora to open on my laptop anymore! I had to downgrade my drivers to get it to work and at some point the drivers updated again. I cannot find the old drivers I used anymore! It’s a shame cause I liked the new channels I added… 🙁

The static channel just moves between 4 or so images to achieve a much better static effect than before and the trans pride channel just replaces the colors of the color bar channel with the colors of the trans flag.

The main “secret revealed” I had was a channel that started as regular static but actually had a webcam on and showed the viewers back on the TV! The picture very slowly faded in and it was almost missed, which is exactly what I wanted! I even covered the light on my laptop so that nobody would have any warning that the webcam was on.

There was also a weird glitchy filter channel that I added. This was inconsistently displayed and was very flashy sometimes but other times it looked really cool. Because of this, I added a warning channel before this channel so that anyone that can’t look at intense things could look away. When I did the presentation, it was not very glitch at all and gave a very cool effect that even used the webcam a little bit (even though the webcam wasn’t used anywhere in that scene…)

The class loved the progression of the TV for this project. One person immediate became excited when they saw the TV was back. They also like the secret being revealed as a webcam and appreciated the extra effort I put in to covering the webcam light as well. In the end, I was very satisfied with how this project turned out, I just wish I could show it…

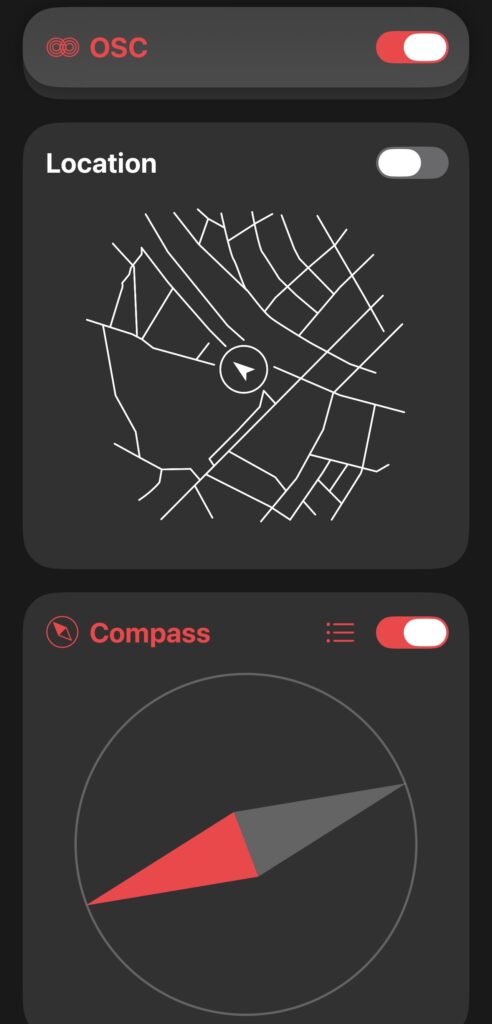

Cycle 3: Failure and Fun!

Posted: December 18, 2025 Filed under: Uncategorized Leave a comment »My plan for this cycle was simple: add phone OSC controls to the game I made in previous cycles. But this was anything but simple. The first guide I used ended up being a complete dead end! First, I used Isadora to ensure that my laptop could receive signals at all. After verifying this, I tried sending signals to Unity and nothing! I tried sending signals from Unity to Isadora and that worked(!) but wasn’t very useful… It’s possible that the guide I was following was designed for a very specific type of input which the phones from the motion lab were not giving, but I was unable to figure out a reason why. I even used Isadora to see what it was seeing as inputs and manually set the names in Unity to these signals.

After this, I took a break and worked on my capstone project class. I clearly should have been more worried about my initial struggles. I tried to get it working again at the last minute and found another guide specifically for getting phone OSC to work in Unity. I couldn’t get this to work either and I suspect this was because it was for touch OSC which I wasn’t using (and also didn’t need the type of functionality). I thought I could get it to work but I was getting tired and decided to sleep and figure it out in the morning. I set my alarm for 7 am knowing the presentation was at 2 pm (I got senioritis really bad give me a break!). So imagine my shock when I wake up, feeling rather too rested I should say, and see that it’s nearly noon! I had set my alarms for 7 pm not am…..

This was a major blow to what little motivation I had left. I knew there was no chance I could get OCS working in that time and especially no way I would get to make the physical cardboard props I was excited to create. I wanted to at least show my ideas to the class so I eventually collected myself and then quickly made a presentation to show at presentation time.

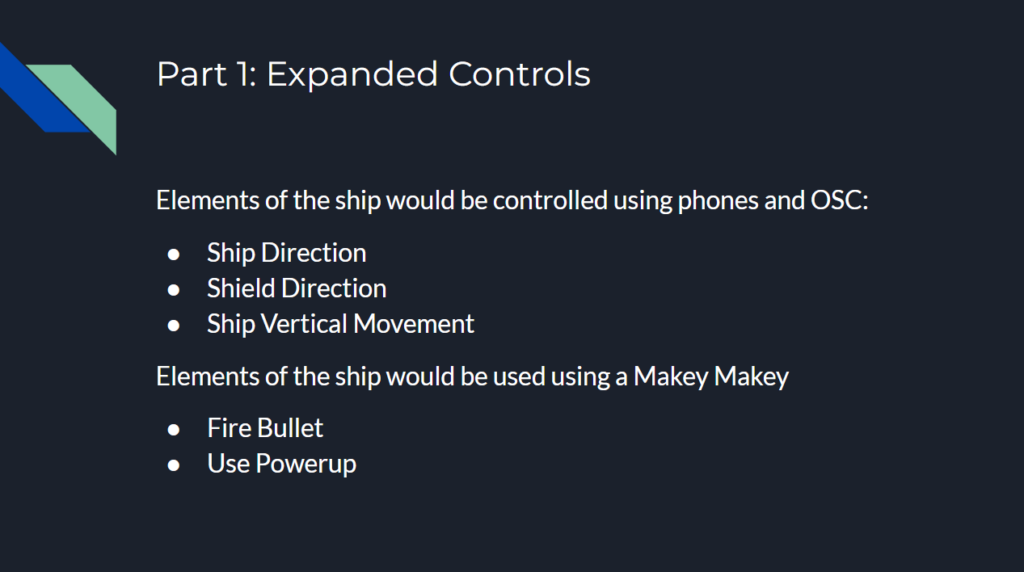

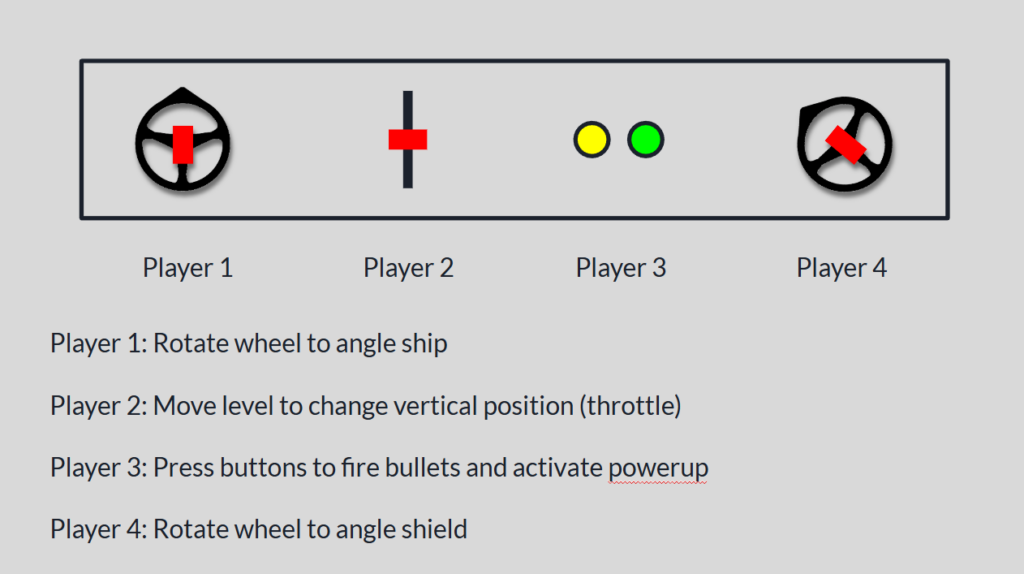

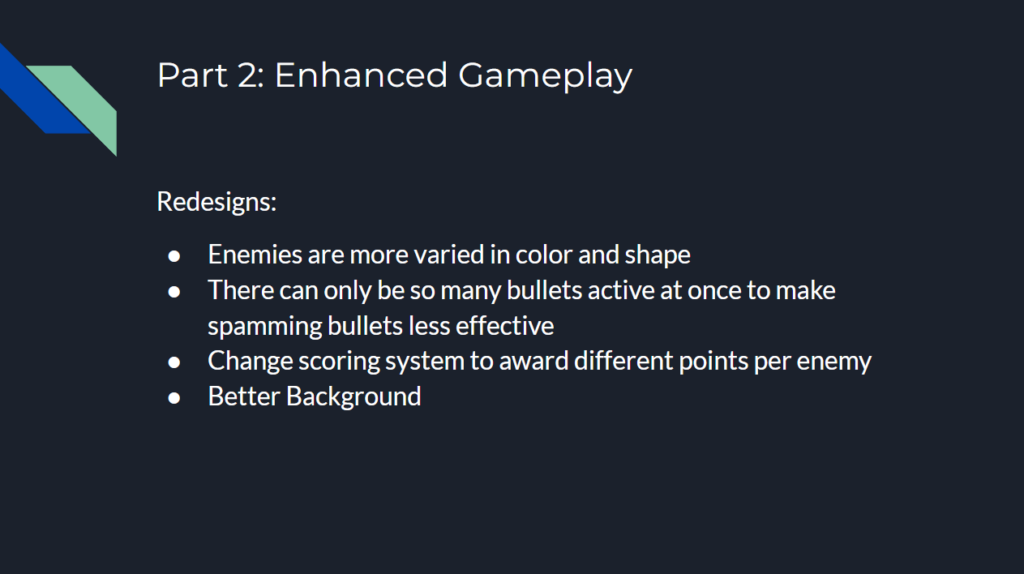

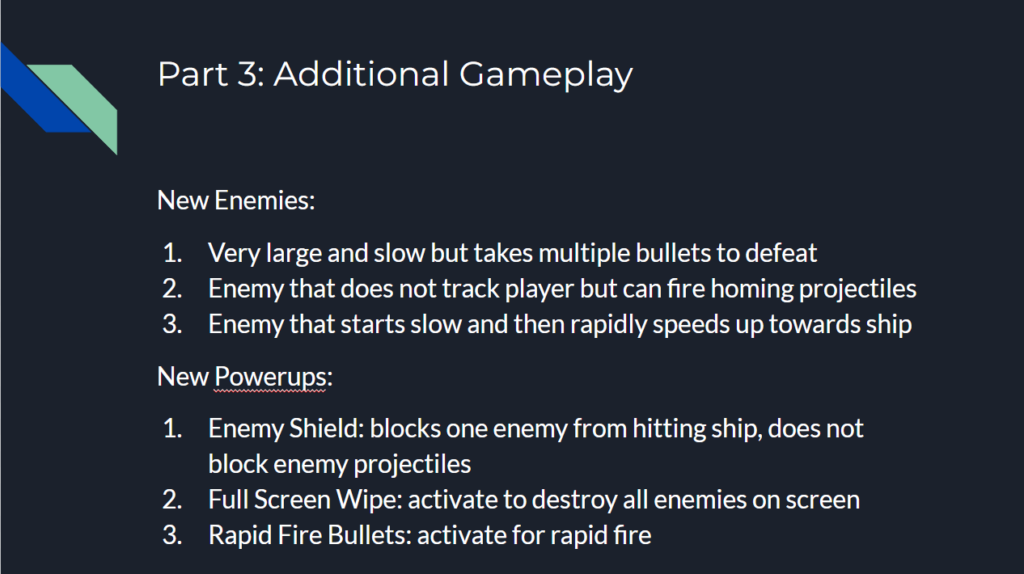

The presentation showed off the expanded control that I wanted to add had I been smarter in handling the end of the semester. The slides of the presentation can be seen below.

I then had an idea to at least have some fun with the presentation. Although I couldn’t implement true OSC controls, I could maybe try to fake some kind of physically controlled game by having people mime out actions and then use the controller and keyboard to make the actions happen in the game!

To my surprise, this actually worked really well! I had two people control the direction of the ship and shield by pointing with their arms, and one person in the middle control the vertical position of the ship with their body. I actually originally was going to have the person controlling the vertical position just point forward and backwards like the other two people, but Alex thought it would be more fun to physically run forwards and backwards. I’m glad he suggested this as it worked great! I used the controller which had the ship and shield direction, and someone else was on the laptop controlling the vertical position. The two of us would watch the people trying to control the ship with their bodies and tried to mimic them in game as accurately as we could. A view of the game as it was being played can be seen below.

I think everyone had fun participating to a degree although there was one major flaw. I originally had someone yell “shoot!” or “pew!” whenever they wanted a bullet to fire, but this ended up turning into one person just saying the command constantly and it didn’t add much of anything to the experience. I did originally have plans that would have helped in this aspect. For example, I was going to make it so there could only be 2 or 3 bullets on screen at once to make spamming them less effective, or maybe have a longer cooldown on missed shots.

In the end, I had a very good time making this game and I learned a lot in the process. Some of which was design wise but a lot was also planning and time estimating as well!

Cycle 3 – A Taste of Honey

Posted: December 18, 2025 Filed under: Uncategorized Leave a comment »For Cycle 3, I focused on improving the parts of my Cycle 2 that needed reframing. I started with buying a new bottle of honey so it wouldn’t take long for it to reach my mouth.

Product placement below is for documentation purposes. Not sponsored! :)) (Though I’m open for sponsorships.)

I edited the sound design, making the part of the song Honey by Kehlani that says “I like my girls just like I like my honey, sweet.” play 3 times so the lyrics wouldn’t be missed. In my cycle 2, the button that said “Dip me in honey and throw me to the lesbians” were missed by the experiencers. To make sure it catches attention, I colored it with yellow highlighter. To clarify and better explain the roles of the people holding the honey bottle and the book, I recorded a video of myself, trying to sound AI-like without the natural intonations in my regular speaking voice. I gave clear directions for two volunteers, named person 1 and person 2, inviting them into the space and telling them to do certain things in certain actions in my pre-recorded video. I also put two clean spoons in front of them, telling them that they could taste the honey if they wish. Both people tasted the honey and one of them started talking about personal connections with honey as the other joined. This conversation invited me to improvise within my score where is was structured enough due to having expected outcomes but fluid enough to be flexible along the way to accomplish the score. The choreography I composed didn’t happen the way I envisioned to happen and the experiencers didn’t end up completing the circuit to make the MakeyMakey trigger my Isadora patch. So I improvised and triggered the patch myself. This made the honey video appear on top of the swirly live video affected by the OSC in my pocket. I moved in changing angles and off-balances like I did in Cycle 2, with the recording of my noise effects also playing in the background.

I finished with dropping on the floor, and reaching the 7 women expressing one by one that they are lesbians.

My vision manifested much more clearly this time with the refinements. Though it was funny and unfortunate that the circuit wasn’t completed because the highlighted section of the book attracted to my attention that the instructions I wrote above it ended up being missed, which is a reversal of what happened in Cycle 2. I received feedback that the experience shifted through different modes of being a participant and observer, and shifting emotions between anticipation, anxiety and delight. The responses were affirming and encouraging, making me want to attempt Cycle 4 even outside the scope of this class. Throughout all 3 of my cycles and my pressure projects, I gained new useful skills to use in my larger research ideas. Besides the information on and space for interacting with technology, I am also very grateful for Alex, Michael, Rufus and my wonderful classmates Chris and Dee for creating a generative, meaningful, insightful and safe space that allowed me to not hold back on my ideas!

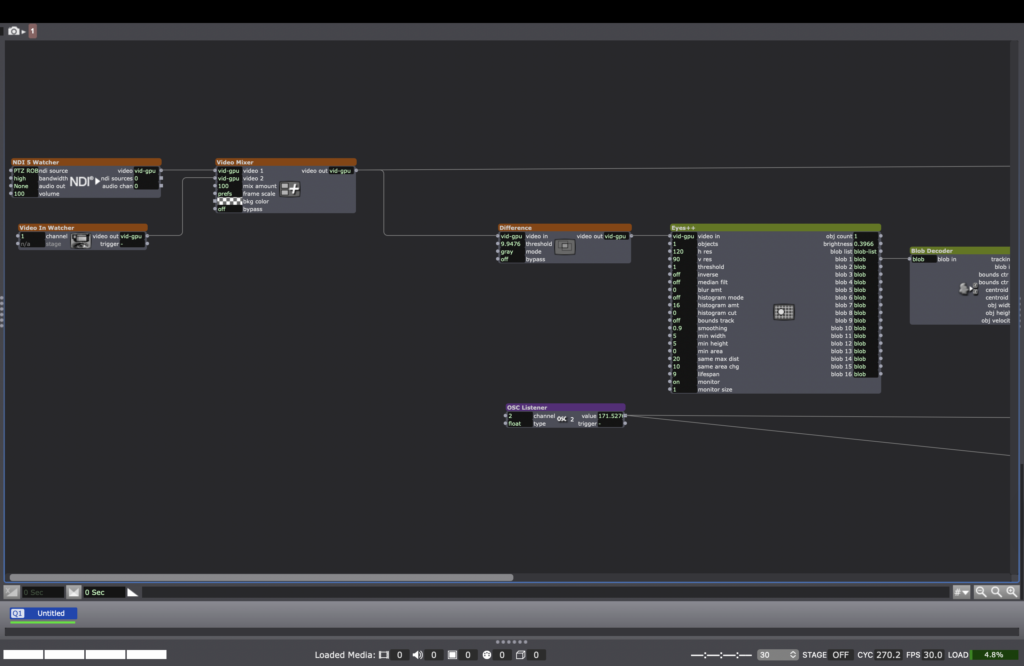

Cycle 2 – I Like My Girls Just Like I Like My Honey

Posted: December 18, 2025 Filed under: Uncategorized Leave a comment »For Cycle 2, I was more interested in going after my draft score I initially prepared for Cycle 3 rather than Cycle 2, so I followed my instinct and did not go through with my Cycle 2 draft score.

Below was my draft score for Cycle 3, which ended up being Cycle 2:

This was going to be the first time I would independently use OSC so I needed practice and experimentation.

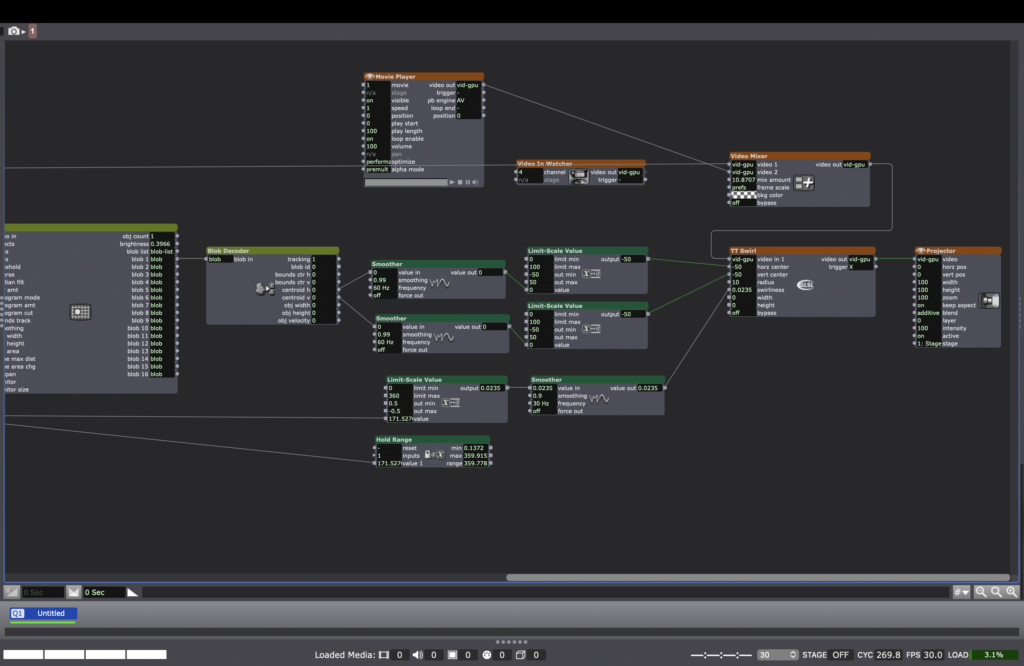

We connected an OSC listener to the patch and this way the swirl had the ability to speak to a phone with OSC and respond to it in real time. I put the phone in my pocket and let the movement of my body affect the phone’s orientation, affecting OSC, affecting the projected live video.

After being introduced to this tool, the external tools I needed to acquire to manifest my ideas into action were completed. My Cycle 1 carried heavy emotions within its world-making. I wanted my Cycle 2 to have a more positive tone. I flipped through the pages of my Color Me Queer coloring book to find something I could respond to. I saw a text in the book that said “Dip me in honey and throw me to the lesbians.” With my newfound liberation and desire to be experimental, I decided to make that prompt happen. I connected one end of a MakeyMakey cable to the conductive drawing on my coloring book in the page with this prompt. I connected the other end of a MakeyMakey cable to a paper I attached to the top of a honey bottle cap. This way it became possible for the honey bottle to open and the button on the coloring book to be pressed at the same time, triggering a video in Isadora. I found a video of honey dripping and layered it on top of the live video with the Swirl effect. I also included a part of the song Honey by Kehlani to the soundscape, which said “I like my girls just like I like my honey, sweet.” After those lyrics, I walked near the honey, grabbed it and tried to pour it in my mouth from the bottle. Because there wasn’t much honey left, it took a long time for it to reach my mouth. After I finally had honey in my mouth, I began moving in the space with my phone controlling the OSC in my pocket. It appeared like I was swirling through honey. I also recorded and used my own voice, making sound effects that went with the swirling actions, while also saying “Where are you?” Finally, I dropped my body on the floor, being thrown to the lesbians as a video of 7 women saying “I am a lesbian” one by one.

Due to the sound design and how I framed the experience, I got feedback that some of the elements I aimed for didn’t land fully. When I explained my intention, there were a-ha moments and great suggestions for Cycle 3. Even though I left Cyle 2 with room for improvement, I became ecstatic about having learned how to use OSC. Following this excitement, I decided to use the concepts I included in my Cycle 1 and Cycle 2 in a new-renewed-combined version in my 2nd year MFA showing before my Cycle 3.

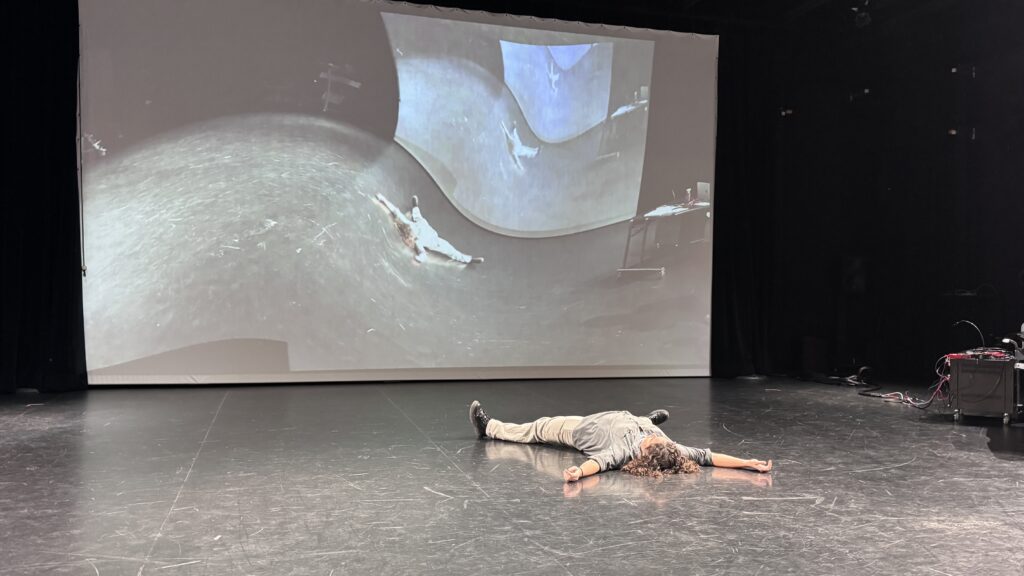

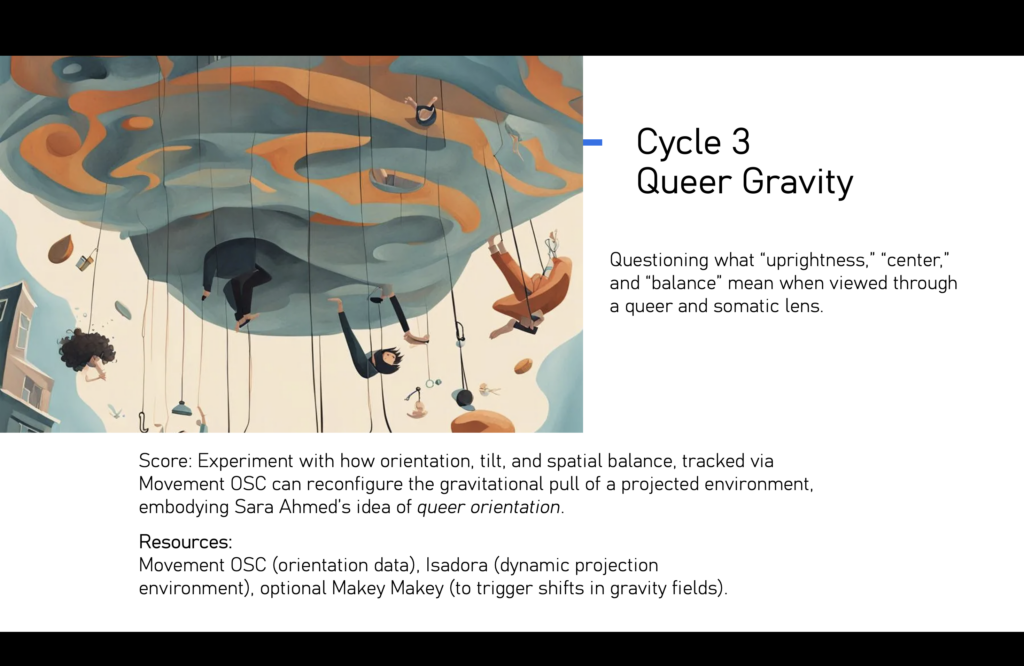

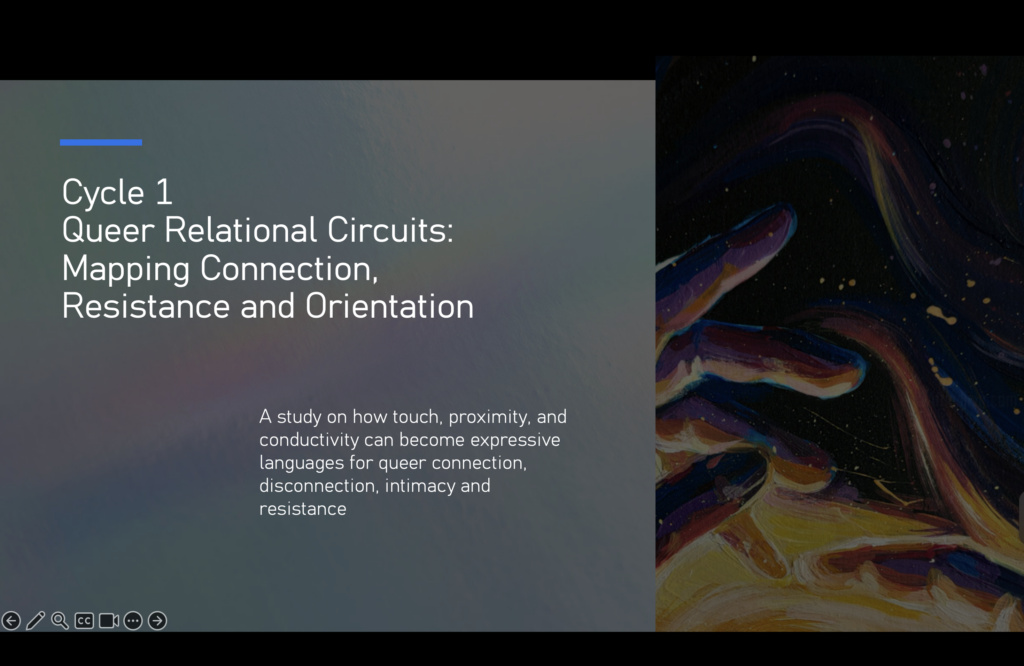

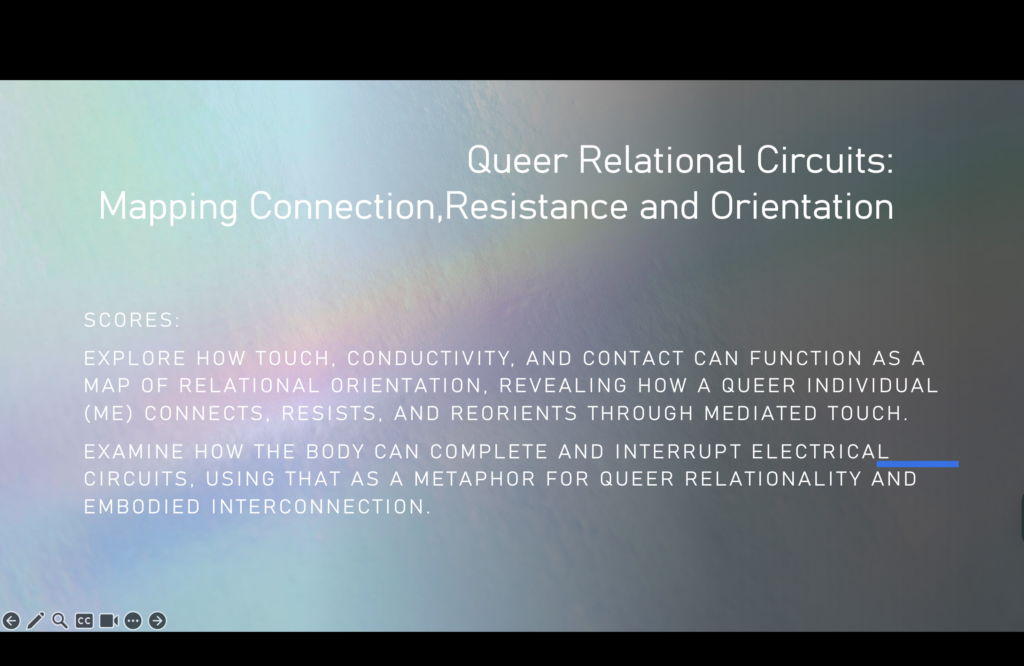

Cycle 1 – Queer Relational Circuits

Posted: December 18, 2025 Filed under: Uncategorized Leave a comment »For my cycles, I wanted to engage in play/exploration within my larger research interests in my MFA in Dance. My research includes a queer lens to creative expressions, and I find it very exciting to reach creative expressions through media systems and intermedia in addition to movement.

This was my draft score for Cycle 1:

A few days before this cycle, I had just recently bought a new coloring book without plannig of using it for any of my assignments.

The book includes blank drawings for coloring, questions, phrases, journal prompts, and some information on queer history. While flipping through its pages, I remembered that I could use the conductive pencil of MakeyMakey to attach the cable to the paper and make it trigger something in Isadora. So I programmed a simple patch with videos that would get triggered in response to me touching the page in the area where I drew a shape with the conductive pencil and or any other conductive material.

The sections of the book that I wanted to use were: “What is your favorite coming out story?” and Where is your rage? Act up!” I re-found videos on the internet that I had previous knowledge about, which corresponded to the questions I chose. I remembered being younger and watching a very emotional coming out video of one of the Youtubers who I frequently watched at the time. I remember it affecting me emotionally. I wanted to include her video. As a response for “Where is your rage? Act up!’ I remembered found a video of police being violent toward the public at a pride event in Turkey, where I’m from. As a queer-identifying person who was born and raised in Turkey, I was exposed to the inhumane behavior of the police toward people at Pride events, trying to stop Pride marches. This is one instance I feel rage so I included a video of an instance that happened some years ago.

In my possession, I also had a notebook entitled The Gay Agenda, which I had never used before. I thought this cycle was a good excuse to use it so I wrote curated diary pages for this cycle. I also drew on it with my conductive pencil so I could turn the page into a keyboard and activate a video by touching it.

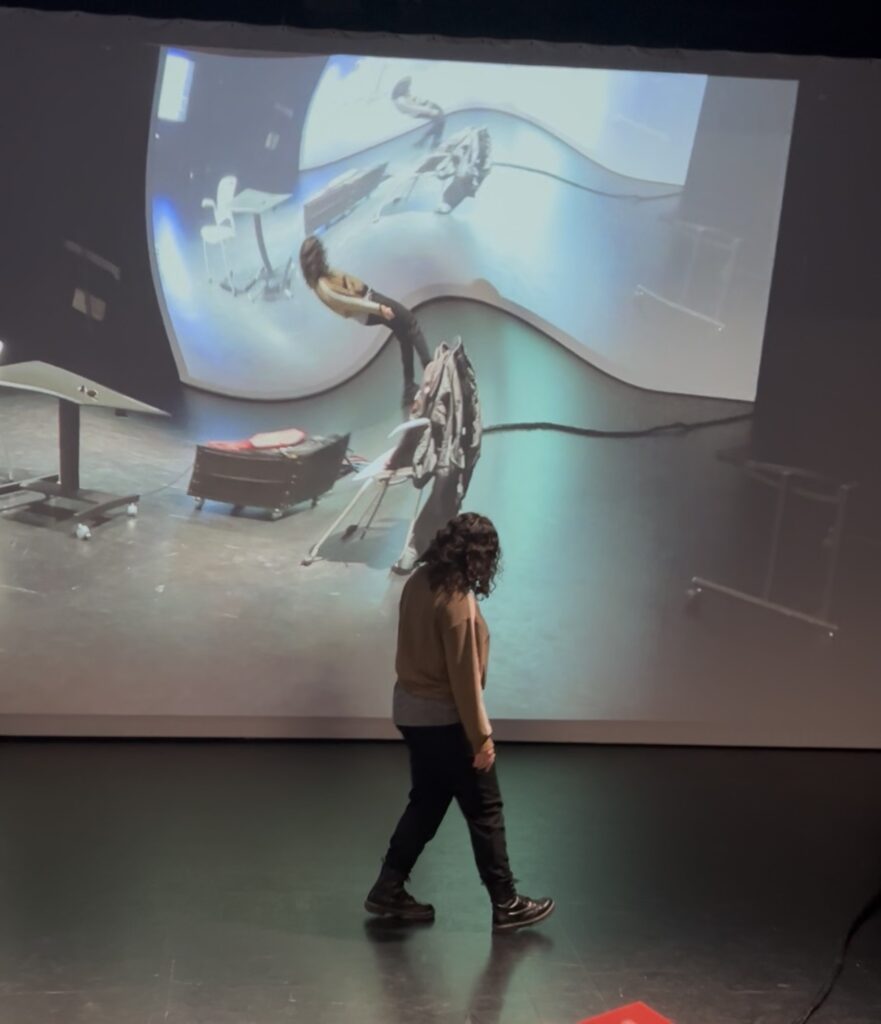

These photos show my setup while presenting:

I used an NDI camera to capture my live hand movements, tapping on the pages, and triggering the videos to appear on the projection. The live video was connected to the TV and the pre-recorded videos were connected to the main screen. I also used a pair of scissors as a conductive material and as symbolism. I received emotional and empathetic responses as feedback, as what I shared ended up journeying me and the audience through a wave of emotions and thoughts. I also received feedback about how my hand movements made the experience very embodied, in response to my question of “I am in the Dance Department, if I use this in my research, how will I make it embodied?” Receiving encouragement and emotional resonance about where I was headed with my cycle allowed me to make liberated and honest choices. Spoiler alert: When starting Cycle 1, I did not know that I was also beginning to plant the seeds to use this idea in my MFA research showing.