Pressure Project 3: Puzzle Box

Posted: April 2, 2024 Filed under: Uncategorized | Tags: Isadora, Pressure Project, Pressure Project 3 Leave a comment »Well uh the thing is is that the project didn’t work. The idea was for a box to have a puzzle entirely solvable without any outside information. Anyone with any background can have a fair chance at solving the puzzle. So, because I am not a puzzle making extraordinaire, I had to take inspiration from elsewhere. It just so happens that a puzzle with just those requirements was gifted to me as a gift from my Grandpa. It is called a Caesar’s Codex. The puzzle works by presenting the user with a four rows of symbols that can slide up and down then right next to it is a single column of symbols. Then on the back is a grid full of very similar symbols to the ones with the four rows. Finally, their are symbols on the four sides that are similar to the ones on the column next to the four rows.

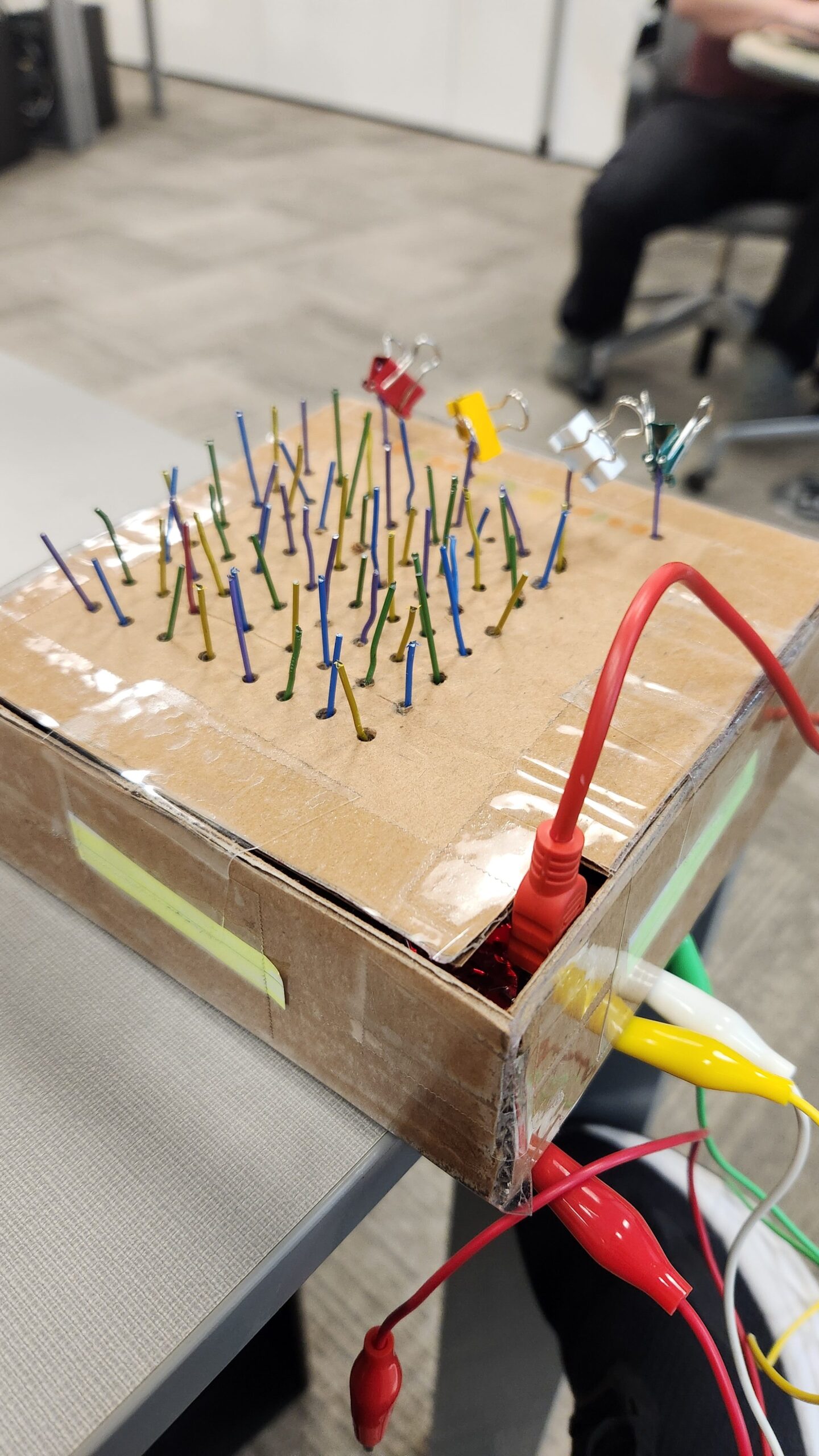

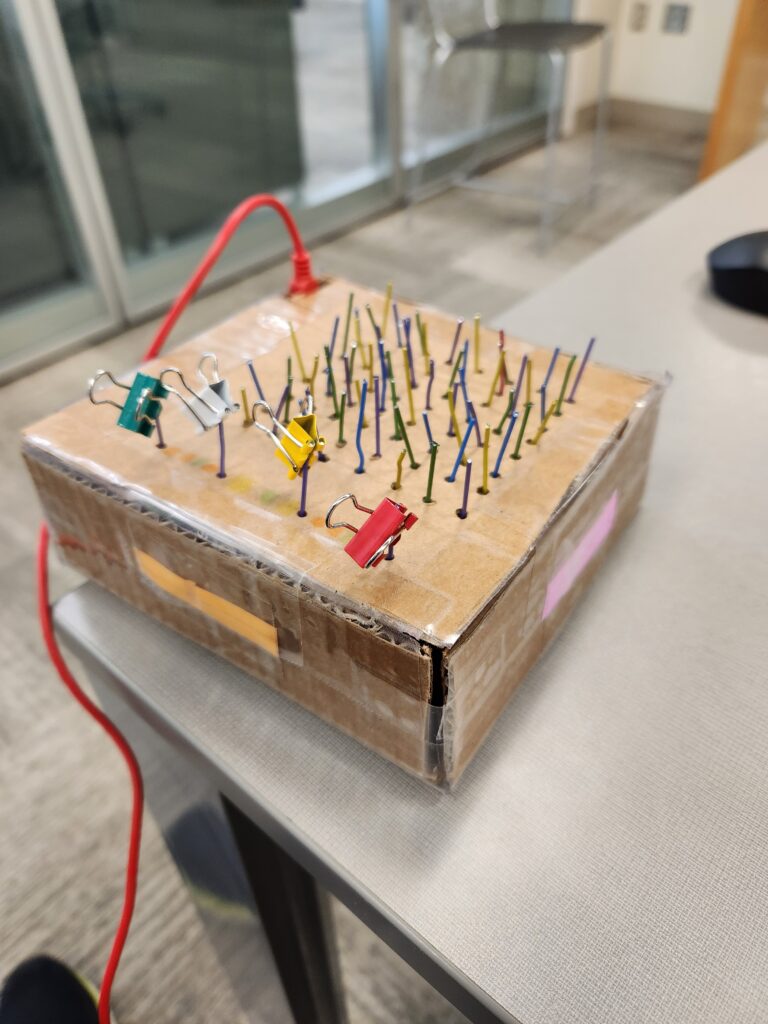

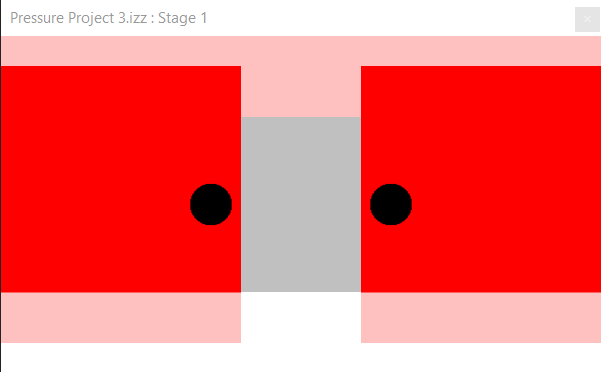

Now the challenge is to get this fully mechanical system to interact with the user interface created in Isadora. The solution was to use a Makey Makey kit. So the wat the user moves the pieces to solve the puzzle needed to change, but the hints to solve the puzzle needed to stay the same. The mechanical puzzle requires flipping the box over constantly to find the right symbol on the grid and then flip it over again to move the row to the right position. I opted to just have the grid portion be set up to directly solve the puzzle.

The paperclips are aligned in a grid like pattern for the users to follow. There is one unique paperclip color to indicate the start. The binder clips are used to indicate when an alligator clip needs to be attached to the paperclip. When the alligator clip is attached to the right paper clip, the screen shown on Isadora will change. Unfortunately, I never tested whether or not the paperclips were conductive enough. I assumed they would, but the coating on the paper clips was resistive enough to not allow a current to flow through them. So, lesson learned, always test everything before it is too late to make any changes, or you make your entire design based on it.

Pressure Project 3

Posted: March 28, 2024 Filed under: Uncategorized | Tags: Pressure Project Leave a comment »A Mystery is Revealed | Color By Numbers

Initially, I began this project with uncertainty about the mystery I wanted to explore. Reviewing past student work, I stumbled upon a personality test concept that intrigued me, expanding my understanding of what constitutes as a mystery.

Following my usual design process, I sought inspiration from Pinterest and various design platforms. A comic called “Polychromie” by artist Pierre Jeanneau caught my attention, particularly its use of anaglyph—a technique where stereo images are superimposed and printed in different colors, creating a 3D effect when viewed through corresponding filters. I was intrigued by how the mystery unfolded depending on the filter used.

While exploring the feasibility of implementing anaglyph on web screens, I encountered challenges due to my unfamiliarity with the term. Nonetheless, I pivoted towards a “color by numbers” concept, drawing inspiration from “Querkle,” a coloring activity based on circular designs developed by graphic designer Thomas Pavitte, who drew inspiration from logos designed using circles.

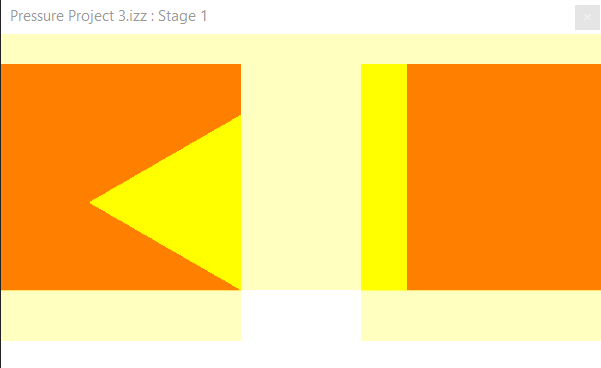

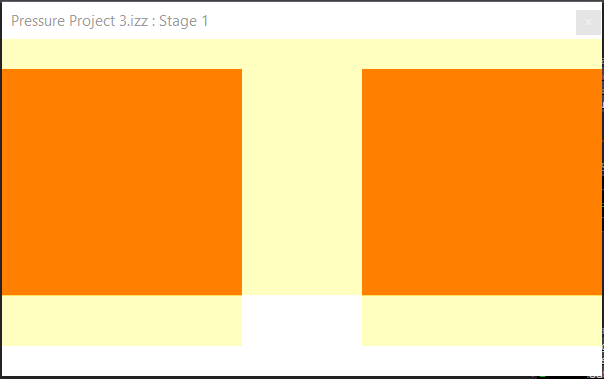

For practicality and time constraints, I chose simple images and creted them using Adobe Illustrator, layering the circles into colorable sections. Using MakeyMakey for interaction and Isadora’s Live Capture for audio cues, I facilitated engagement. Additionally, I devised a method using MakeyMakey and alligator clips for answering multiple-choice questions.

Implementing keyboard watchers, trigger values, and jump++ actors, I orchestrated transitions between scenes in the patch, ultimately unveiling the mysteries.

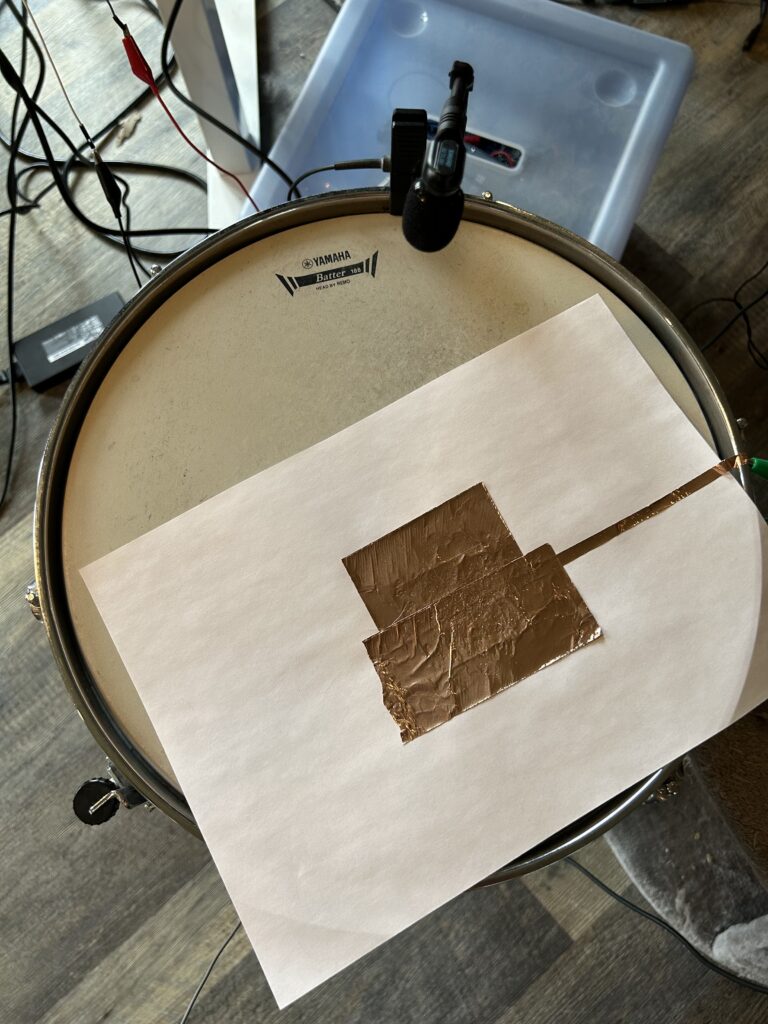

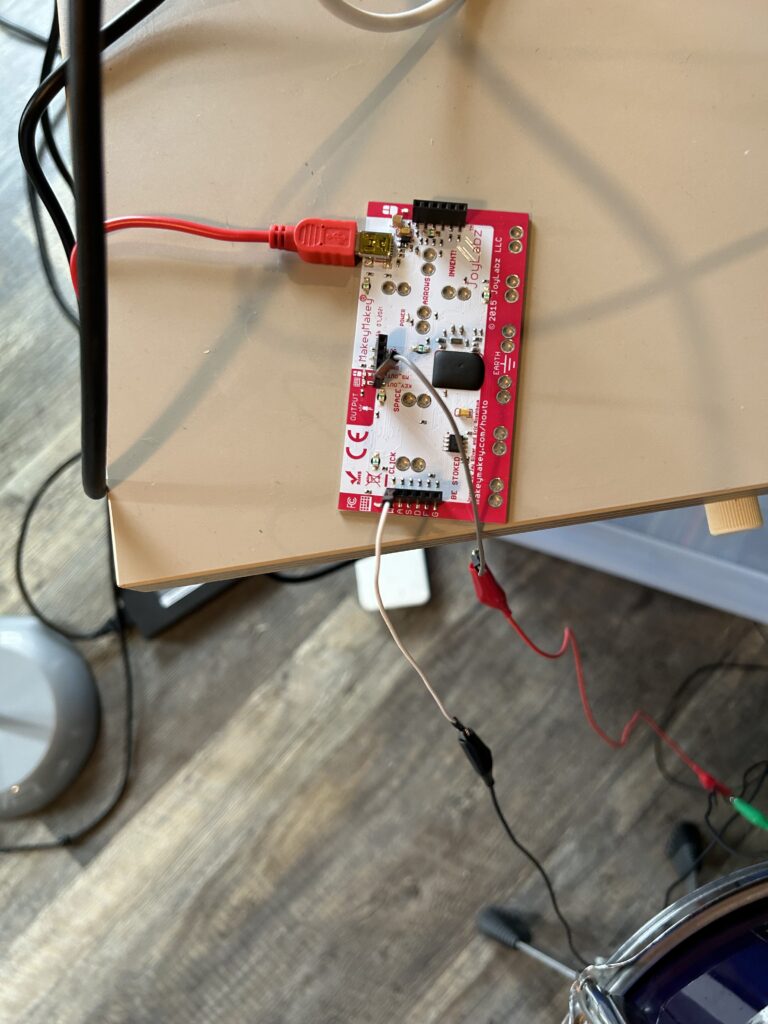

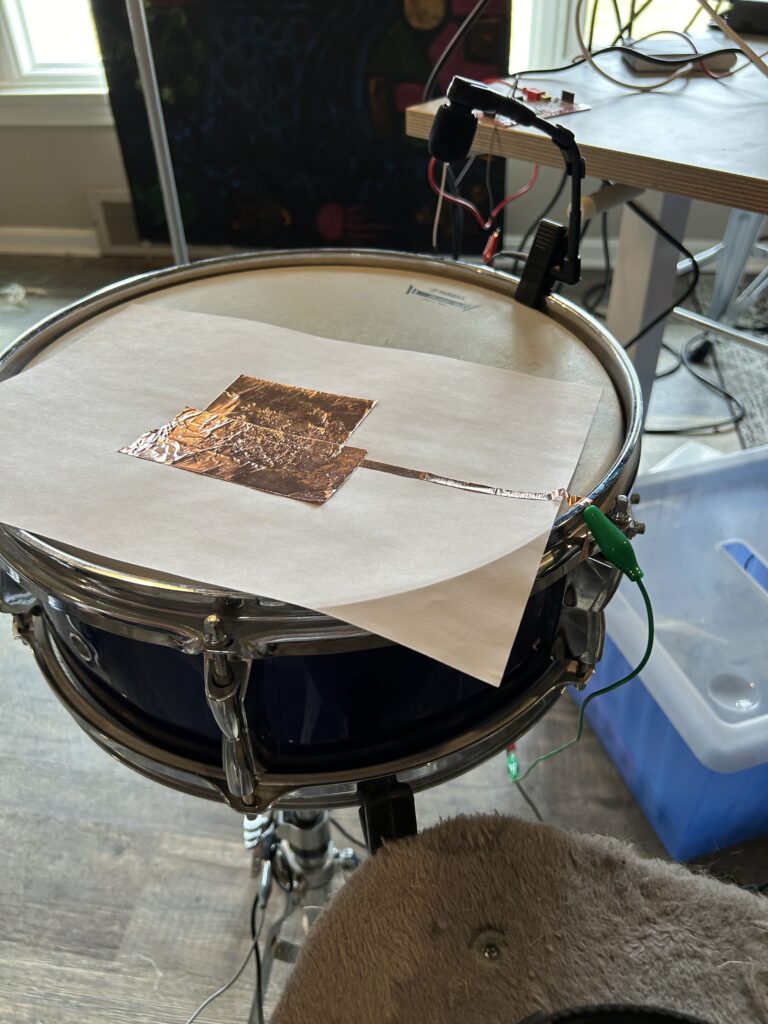

PP3 – Scarcity & Abundance

Posted: March 4, 2024 Filed under: Uncategorized | Tags: Pressure Project Leave a comment »This project began with the idea to use a snare drum as a control event in relation to the Makey Makey device and Isadora. With the prompt of ‘a surprise is revealed’, I wanted to explore user experiences involving sharp contrasts in perspective through two parallel narratives. One narrative (‘clean path’) contains imagery suggestive of positive experiences within a culture of abundant resources. The second narrative (‘noisy path’) includes imagery often filtered out of mainline consciousness as it is a failure byproduct within the cultures of abundant resources. By striking the snare drum in accordance with an audible metronome, the user traverses a deliberate set of media objects. In photo based scenes, there is a continuum of images scaled from most to least ‘noisy’. Hitting the drum in synchronous with the metronome will enhance the apparent cleanliness of the image. While hitting off beat renders the reverse effect giving more noisy or distasteful images. In video based scenes, two videos were chosen which illustrate opposing viewpoints and similarly, the timing of drum beats alters the display of positive or negative imagery.

The first images are of random noise added to a sinusoid. A Python script was written to generate these images and an array of noise thresholds were selected to cover the variation from a pure sinusoid to absolute random noise. This serves as a symbol for the entire piece as Fourier mathematics form the basis for electrical communication systems. This theory supports that all analog and digital waveforms can be characterized by sums of sinusoids of varying frequency and amplitude. As such, all digital information (video, image, audio…) can be represented digitally in the form of these sinusoids for efficient capture, transmission, and reception. In the digital domain, often unwanted signal artifacts are captured during this process, so digital signal processing (filtering) mechanisms are incorporated to clean up the signal content. Just as filtering adjusts signal content from the binary level of media objects, it exists at higher computing levels, most notably algorithmic filtering in search engine recommendation, social media feeds, and spam detection in email inbox to name a few. Further, on the human cognitive level, societies with abundance of resources may be subjected to the filtering out of undesirable realities.

One such undesirable reality is that of consumer waste which is conceptually filtered through the out of sight, out of mind tactic of landfills. Even when in sight, such as in a litter prevalent city, the trash may be filtered out cognitively just as is done by audible ambient noise. The grocery store shopping and landfill scenes serve to illustrate this concept. The timelapse style emphasizes the speed and mechanicalness at which the actions of buying colorful rectangular food items and compressing massive trash piles occur. Within the system of food consumption, this video pair the familiar experience of filling up a cart at the grocery store with the unfamiliar afterthought of where those consumables are disposed.

The third scene takes influence from Edward Burtynsky’s photography of shipbreaking in countries like Pakistan, India, and Bangladesh in which a majority of the worlds ships and oil tankers are beached for material stripping. These countries are not abundant in metal mines and their economies are dependent on reception of ships for recyclability of iron and steel. The working conditions are highly dangerous involving toxic material exposure and regular demolition of heavy equipment which dehumanize workers and cause environmental damage. This video is contrasted by an advertisement for Carnival cruise line and a family enjoying the luxury of vacationing at sea on a massive boat containing a waterpark and small rollercoaster. This scene reveals an excessive leisure experience available to those in areas of abundance and the disposal process when these ships are no longer of use. Further emphasizing the sentiment of ‘what is one man’s trash is another man’s treasure’.

The last scene includes a series of screenshots taken from Adobe photoshop showing the digital transformation of a pregnant woman into a slim figure. Considering the human body as having an ideal form, akin to that of the pure sinusoid, manual and automated photoshop tools provide ways to ‘cleanup’ individual appearance to a desired form. With body image insecurity, obesity, and prevalence of cosmetic surgeries pervading the social consciousness of abundance societies, this scene registers the ease at which these problems can be filtered away.

The choice of the drum and metronome as a control interface is designed to reconstruct the role that conformity plays in decision making and exposure to alternate perspectives. It is suspected that most users will hit the drum on beat because that is what sounds appealing and natural. Here the metronome represents the social systems underpinning our formation of narratives around consumption and self identity. With the design of this media system, it is possible for the user to only experience positive imagery so long as they strike in phase with the metronome. But for those daring to go against the grain and strike off beat, they are greeted by a multitude of undesirable realities. It is my hope that in participating with this media system, that users realize the role that digital systems play in shaping perceptions as well as how our the style of our interactions alter the possibilities on what can be seen.

Resources used in this project:

- Makey Makey

- Snare Drum

- Copper Tape

- Drum Stick

- Isadora

- 10 Hours of time

Media Artifacts Used:

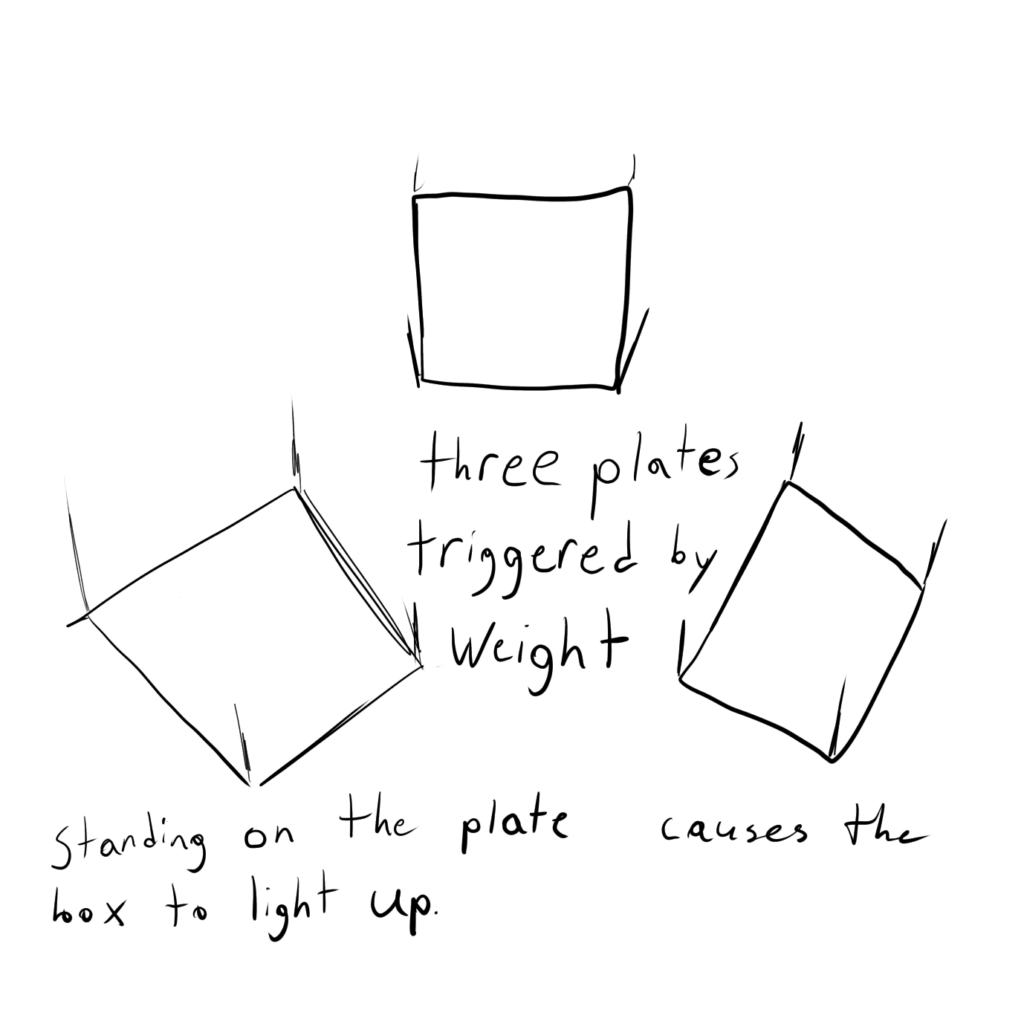

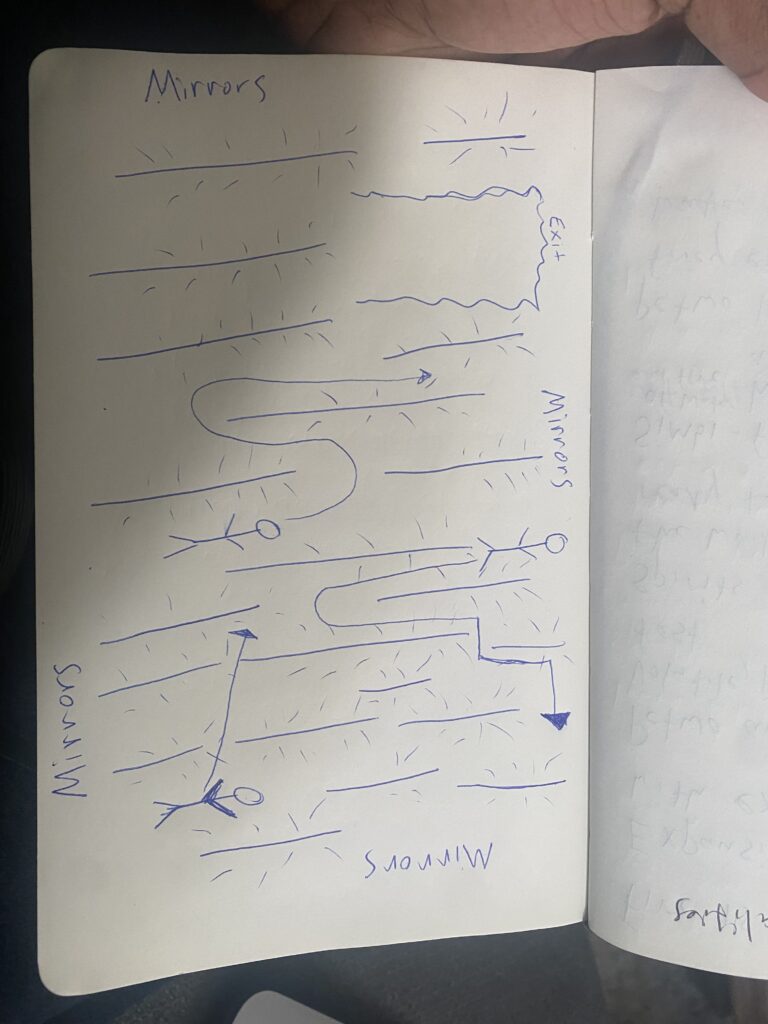

Pressure Project 2 – Escape Otherworld

Posted: February 8, 2024 Filed under: Uncategorized Leave a comment »I’ve been to Otherworld some years ago so I was excited to go back. It’s a really strange space, but that’s what’s interesting about it, especially when I was able to find patterns or “figure out” a room. There were a few rooms that drew my attention at first. One was the mush-room (really funny joke). What I found interesting in it was the cow room, which had a very Legend of Zelda puzzle in it. Basically, there are six colored levers attached to a machine that itself is attached to the cow’s utters, and there is an order in which you need to pull the levers in order to get some effect. It was interesting to see how people would solve this puzzle, if they did at all (and a lot didn’t, mostly because they didn’t stay in the room long enough to realize that anything was afoot), but there were two notable instances. Sometimes what would happen is someone would be pulling random levers and then they would pull the correct one and something different would happen. In a few cases, people just started pulling levers to see if anything would happen, which lead to finding out that if you pull the levers in a certain order they don’t make the cow angry. Through trial and error they figured out the combination and the cow did fun things.

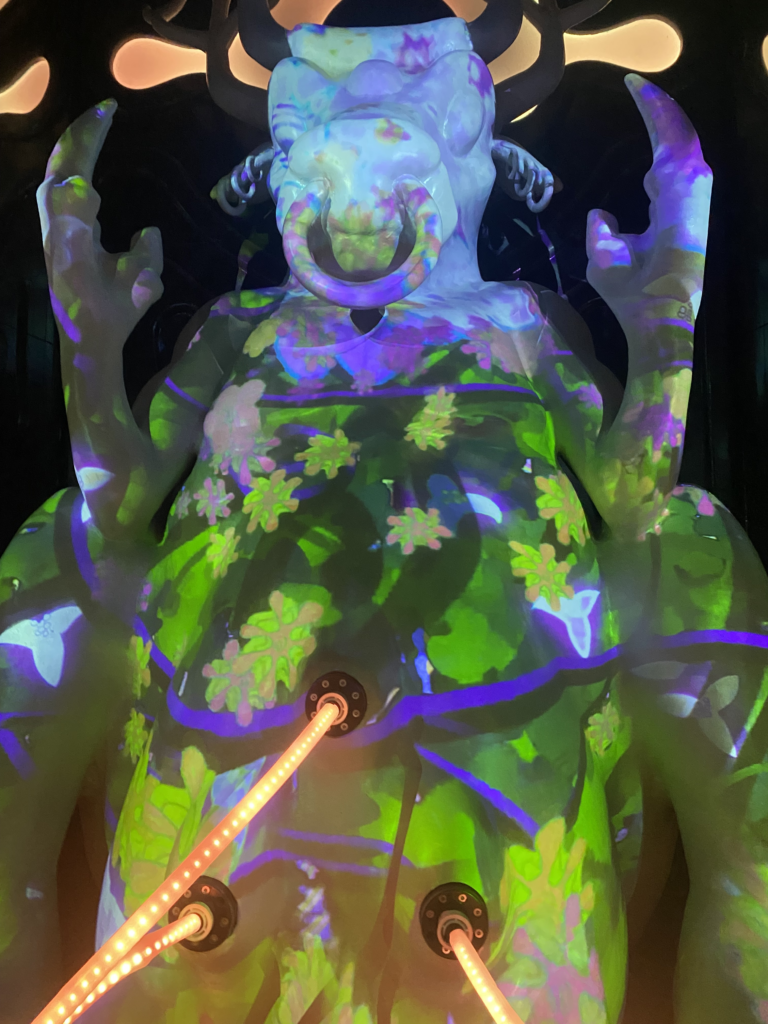

An image of the cow doing fun things!

However, some people also noticed this panel on the back wall with lights of different colors that would come on in a certain pattern. Someone then put together that the color of the lights was the same as the color of the levers and from there they found out that the light pattern was the order of the levers, which is not something I noticed at all but was really neat.

The interesting hint light panel that I didn’t notice because I’m not a true gamer

The room I actually want to talk about though because I can’t stop thinking about it is one of the first ones that people encounter. It’s right in the beginning and it’s this plainly sci-fi looking room with a bunch of panels and buttons.

This is the panel room, I stole this image because I didn’t have any good photos of this room and I didn’t expect to be talking about it but here we are: https://www.citybeat.com/cincinnati/inside-columbus-otherworld-an-immersive-choose-your-own-adventure-sci-fi-art-installation/Slideshow/12234210

At first, I wasn’t really interested in this room. For starters, the first button I pressed made all the screens display a rick roll, and I almost cried. In general, most of the panels weren’t related from what I could tell. Some of them had small games, like one had a game where you have to time pressing a button to cause a system overload. Some had videos that showed a scientist talking about Otherworld itself, which are interesting from a lore perspective but weren’t super interesting from the experience side (most people just watched them, which isn’t very interesting to describe haha). There was one panel that I saw a kid playing with that had a camera view of another room, specifically the mouth cave room, and when the button was pressed a sound effect would play like a burp. That I thought was very interesting. I tried playing with the panel myself, apparently you can change the sound effect played by turning this knob. What I wanted to know is if those sounds were being played to people inside the mouth room. Anytime one of the sound effects was played I stared intently at the panel to see if anyone would react. Unfortunately, basically no one had any sort of interesting reaction, so I couldn’t really tell if the sounds were going through. I went to the mouth cave room myself to see if I could hear anything (I probably sat in that room for like 10 minutes too), but I didn’t hear any noises. I don’t know if this was because no one was playing sounds or because the sounds aren’t being projected, but I’m like 90% certain that the sounds matter. Even if it doesn’t, people that I saw using the panel had sort of a devious way of playing, like they were messing with others in the experience.

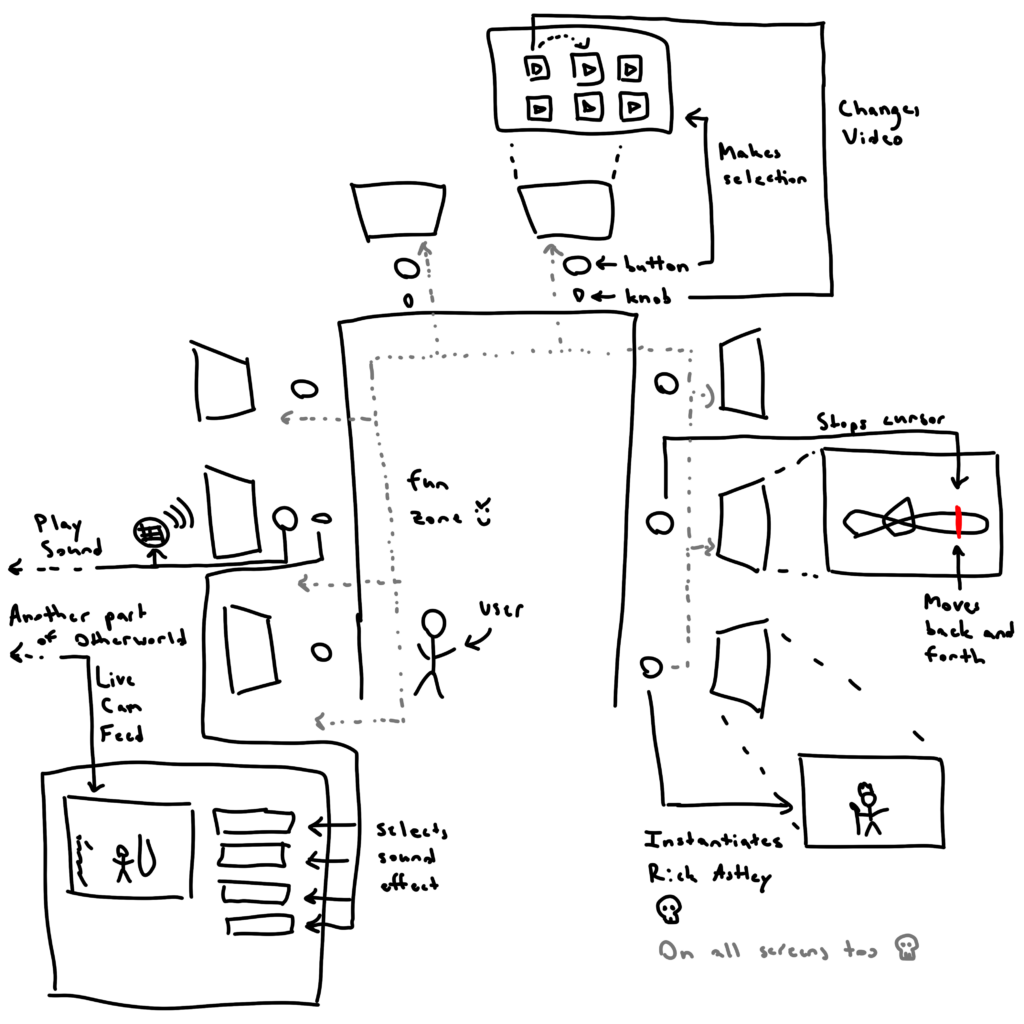

The original room, and a little about how it works. Not super complicated all things considered.

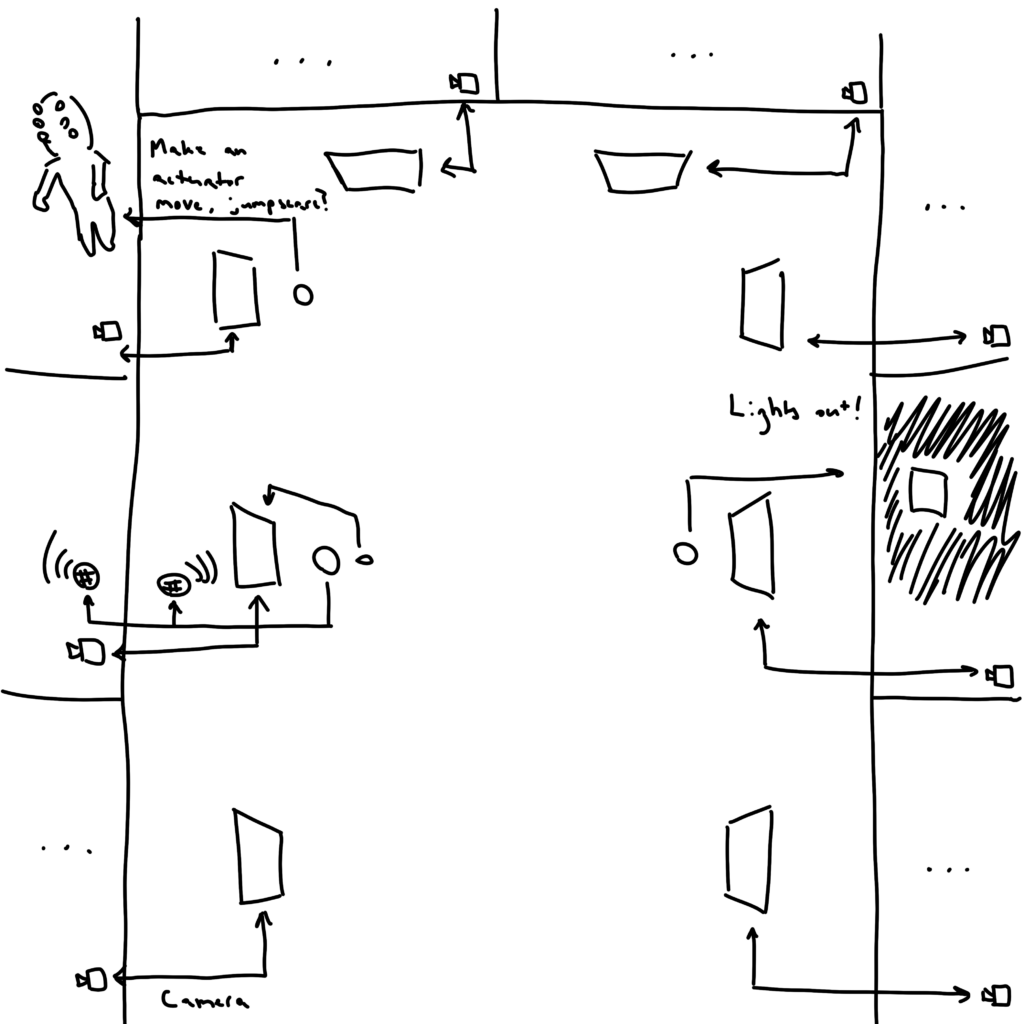

Regardless, I started to think about this room as a sort of control room, for the whole experience really. I didn’t see any other panels in this control room that linked to other rooms, but I started to think about what it would be like if this room was connected to all the other ones and in a reverse Five Nights at Freddy’s or regular Hunger Games sort of way, if you could make different things happen in other rooms. As I was just exploring on my own, I started to see places where the room could definitely have jump-scared me in a haunted house sort of way. As I was looking through drawers in this room, I felt like this guy could have jumped out and grabbed me.

Similarly, while I was in this gothic/lovecraftian spider room, I had a chilling moment where I thought I remembered this bloke being an animatronic that moves around, but it was still. Then, when I turned around to focus on a different part of the room, I heard it move, and just as a turned around I could just barely see it move before it stopped again and never moved the entire time I was there. What if people could mess with others in these really minute ways? It would be kind of horrible, but it would be fun

it looks like a Bloodborne boss ngl, this thing scared me

The devious room where you play nasty pranks on people

I also started thinking about some of the puzzles I saw and some of the ways I saw couples collaborating in these rooms, and then a different idea came into my head. It happened while I was trying to explain what Otherworld was and one of my friends said: “It’s like an escape room except the goal isn’t to escape.” What would Otherworld function like as an escape room of some sort? The person in the control room is almost like the game master. I also remembered this strange moment where in the aforementioned spider room, the antique phone started ringing, some random guy came in, answered it (as if he knew this phone would ring), and then left. Aside from the fact that it was weird, it could be interesting to have connections between the rooms; maybe the phone is connected to the room with the bats, and you have to speak into one of their ears to communicate with the spider room phone? What if Otherworld was this Toolmaker’s paradigm fever dream where each person was put in a different room, and they had to figure out how to communicate with each other? It would be interesting to see a place like Otherworld or Meow Wolf with this alien aesthetic, but the goal is the solve some greater puzzle instead of the puzzle being an optional thing or the room interactions being disconnected.

Otherworldly Experience

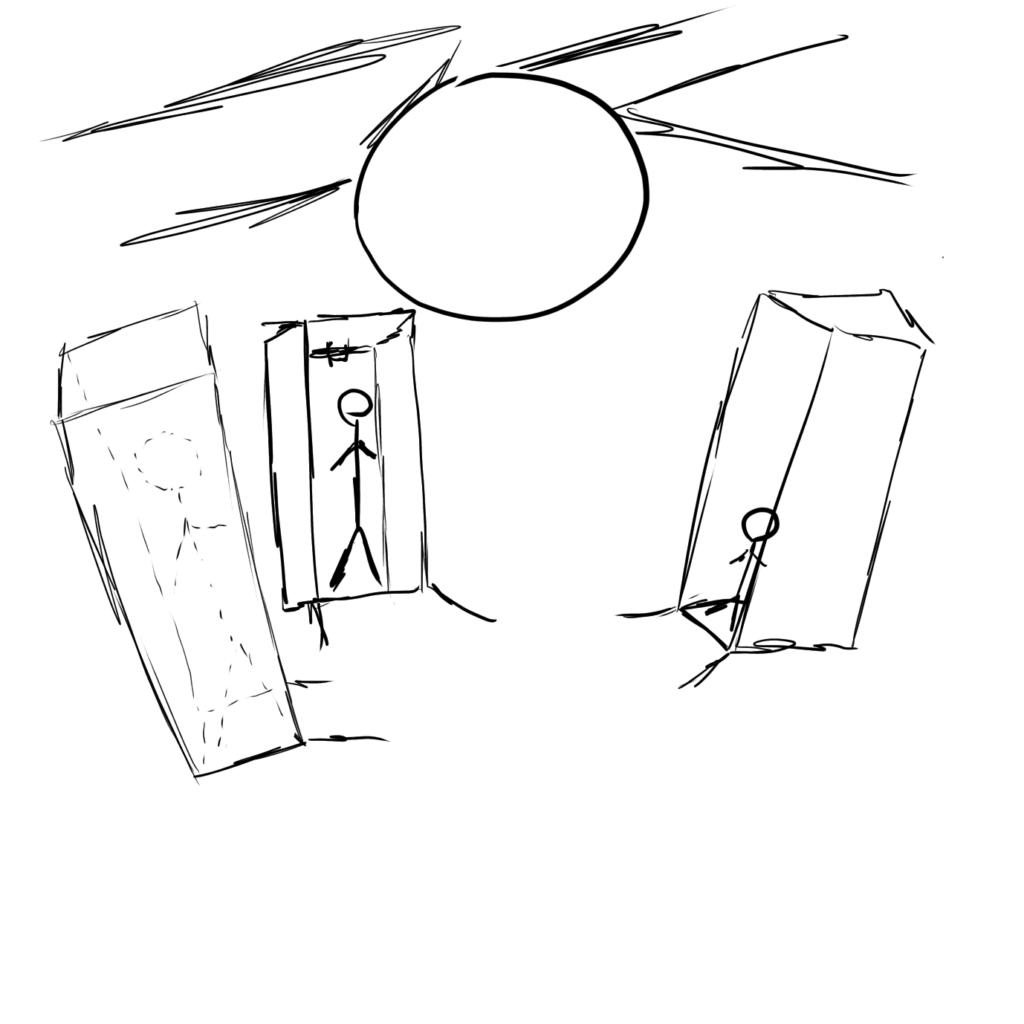

Posted: February 8, 2024 Filed under: Uncategorized | Tags: Pressure Project, Pressure Project 2 Leave a comment »This room is one of the first rooms I experienced. It is immediately after the room that Rick rolls guests and allows them to mess with other guests throughout the facility. It is a dimly lit room with three ominous human sized capsules. They all surround a giant sphere suspended in the air with what looks like wires from each of the capsules leading into it.

The capsules are really eye catching so many guests that entered the room immediately walked into them to check it out. Then they hear a noise in the pod and can see the side light up. This prompted nearly all of them to go looking for other people nearby to fill the other two pods to see what may happen. Once three people are standing in the pods They begin to flash black and white as well as the lights leading to the orb. At the same time a noise that sounds like static electricity is whirling around the room. Finally, the room goes dark and all is quiet then the guests standing in the pods are sprayed with gusts of air. Caught off guard, the guests let out a scream or flee from the container.

There is not much I would want to redesign with how the system is able to capture people’s attention and clearly convey what needs to be done to activate. So, what I would add would be some sort of fog like effect that is also ejected with the puff of air as well as maybe tone down the air pressure, but keep it spraying for longer. This would hopefully make it a bit less of a jump scare and make it seem cooler when the guest leaves the pod.

Pressure Project 2

Posted: February 7, 2024 Filed under: Uncategorized Leave a comment »Other World was quite an experience, I was intrigued from the start, there was a fractal projection surface outside of the exhibit spaces that I didn’t notice until light hit the surface and revealed the perfectly projection mapped textures on each triangular piece. I knew I was in for a treat.

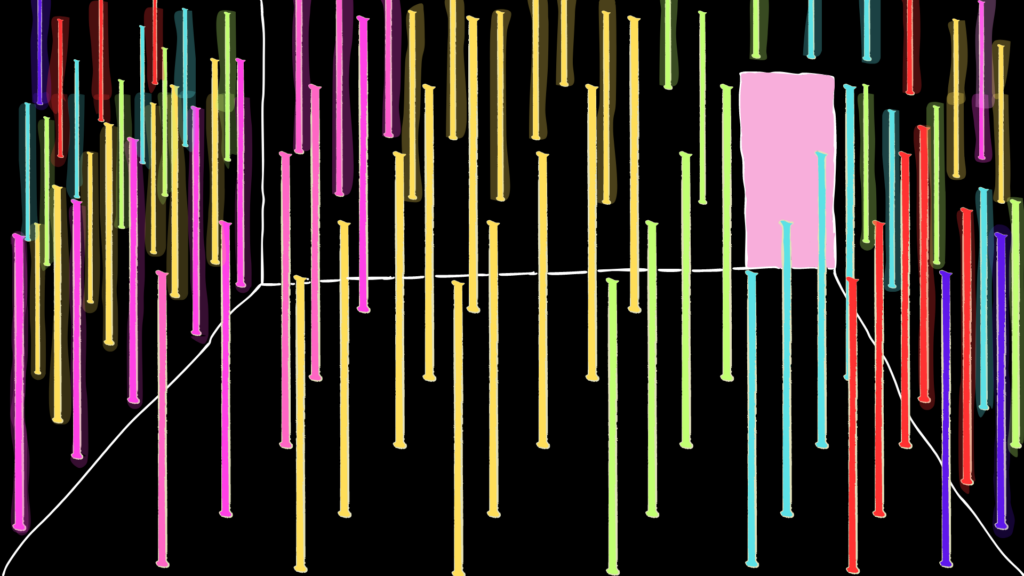

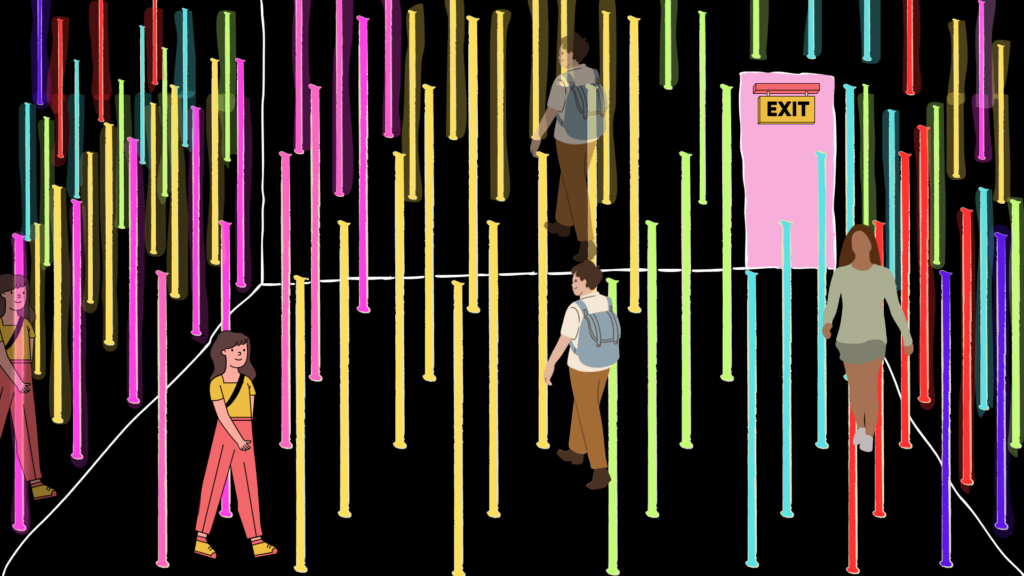

I was particularly interested in the spaces that felt like a portal and infinite almost like a forest. The room that caught my attention was what I would call the infinite light forest. There were mirrors on all of the walls in the room with poles of light that were about 8 to 10 feet tall and shifted colors. There was also an ambient space like musical track playing from hidden speakers, that sparked a sense of curiosity As I took a closer look there were individual wires above connected to each light for power and signaling the light change. When I first entered the room I thought touching the poles initiated some part of the experience, as I observed, I noticed other folx thought the same. This exhibit turned out to be an ongoing light show that could seemingly go on forever.

People moved in-between the lanes of light and around the light pole fixtures. There was no particular pattern or path for one to take within this experience. I enjoyed how you were able to journey at your own pace to take in the vastness of the moody space.

I started to think about how I would reimagine this space if it were my project. I would shift the space into a digital tree forest.

First, I would change all of the light fixtures to trees and keep the mirrors for the infinite effect and add an interactive touch element to the bark that you must stay connected to, to learn facts about native trees in the state. In constructing the space I would source the energy and wires from below like a root system verses above. Second, I would shift the sound in the space to wind blowing through trees and sounds of leaves crunching under your feet. For my last augmentation of the space I would add a motion sensor to active wind/a fan as people move through the space to feel as if they are in a surreal natural environment.

Pressure Project 2

Posted: February 5, 2024 Filed under: Uncategorized Leave a comment »For Pressure Project 2 the assignment was to go to Other World or some place where you could interact with something. I decided to go to Other World because i had never been and was intrigued with what I was going to experience. The instructions were:

– Choose one of those rooms and take notes/ pics

– Evaluate how the interaction is happening. How do I think it works?

– Observe what people are doing and how they are interacting with it/ experiencing it

– Suggest a an EVIL or GOOD option to it (tweak the system/ twist their design)

I chose one of the first rooms I walked into and that was a room full of colorful light poles with mirrors on each wall. There may have been 50 poles in the room, all the same size that gradually changed colors. The wall being a mirror made it look like there was an infinite amount of light poles in the room. I couldn’t quite figure out the interactivity of the light poles other than people walking through the space. At first I thought there was a correlation between walking past the light poles and the colors change, but it wasn’t obvious enough for me to believe in that theory. This is my drawing of the room.

As a suggestion for this room, I have a few things.

- I would add a reactive device to the poles that can detect body heat or movement. If someone walks past that pole the color of the pole drastically changes and/or changes to white when you’re near it.

- It would be cool to add a fan to the light poles so that when you pass through a lane of poles, you feel a gust of air on your skin (I wold do this similarly with a light mist too!)

- Lastly I would add a heat and/or touch reactor to the light poles, so if you touch the light pole it gradually changes color. However when you let go of the pole it goes back to its originally light.

PP2 | Otherworld Luminous Mushroom Garden

Posted: February 5, 2024 Filed under: Uncategorized Leave a comment »Of the numerous interactive environments at Otherworld, the exhibit which caught my interest most was what I interpreted to be a mushroom garden. This occurs prior to entering the exhibit with the mechanical feeding room for the bull with pyramid shaped utters and groans at you when you enter the wrong feeding sequence. This room consists of an elegant three starred archway with three groupings of mushroom capped surfaces sitting above planters. All of these illuminated surfaces are animated by overhead projectors carefully placed, angled and programmed to giving the environment a feeling that it is alive and in motion. This is not unique as most of the exhibits at otherworld rely on projection mapping techniques; however, what stood out to me was its interactivity.

As demonstrated in the videos below, there are spinning colored discs mapped outside each mushroom cap, which when stepped on, cause a flood of color to spill outwards onto the floor. The structure reminds more of gas diffusion such as smoke, flames, or fog due to the speed of expansion, geometric structure and color. Although I could not tell precisely how the system worked, I imagine there was some element of camera or other sensing which could detect the area of color detected on those spinning disks. When your foot covers the disc, it triggers the color to flow – so I presume the system has a threshold at which it detects shadows present on the disc spot. Further, each flow pattern was unique meaning that no two times that you stepped on the disk would there be the same structure / flow observed. To me this means there must be some random variable detected from the environment which governs how the light will flow. I am familiar with these types of flow generation techniques such as this reaction-diffusion generator made by Karl Sims https://www.karlsims.com/rdtool.html based on mathematical models of physical phenomena https://en.wikipedia.org/wiki/Reaction%E2%80%93diffusion_system. While they probably don’t have as complex of a mathematical model as described above, it still serves to suggest that there is some math involved to compute how the light will flow across a surface based on some initial random variable.

As a study in natural interaction, I observed several people as they entered the room and played around. For most, they seemed impressed by the scenery and were engaged visually by the Moire like pattens visible on the mushrooms. I could tell this by how the setting drew in their attention. However, most people didn’t notice the interactive element as they didn’t step on the spinning light discs. There was one person who did realize the hidden interactivity and chose to step on all of the discs. I found it interesting that she chose to jump from one disc to the next as they were spaced within hopping distance. This reminded me of games I’d play with my sister as a child which we called the floor is lava and we would try to move through a room only stepping on furniture and not the carpeting. As a benevolent alteration to this interactive design, it’d be interesting to account for tracking of human movement from one disc to the next. Perhaps if the person can jump from one end of the room to the next while triggering each of the discs, that the room will acknowledge this accomplishment as is done in the next room when the right sequence is fed to the bull utters. This may be technically challenging given the short detection time allowed for a sensor when someone is hopping quickly through an environment. Secondly, there would be a challenge in synchronizing detection of events from multiple cameras; yet, I bet there is already a centralized media server acting as the master to its various child projectors and sensors.

Pressure Project 1 – Fireworks

Posted: January 30, 2024 Filed under: Uncategorized | Tags: Interactive Media, Isadora, Pressure Project, Pressure Project One Leave a comment »When given the prompt, “Retain someone’s attention for as long as possible” I begin thinking about all of the experiences that have held my attention for a long time. Some would be a bit hard to replicate such as a conversation or a full length movie. Other experiences would be easier as I think interacting with something could retain attention and be a bit easier to implement. Now what does that something do so people would want to repeat the experience again and again and again? Some sort of grand spectacle that is really shiny and eye catching. A fireworks display!

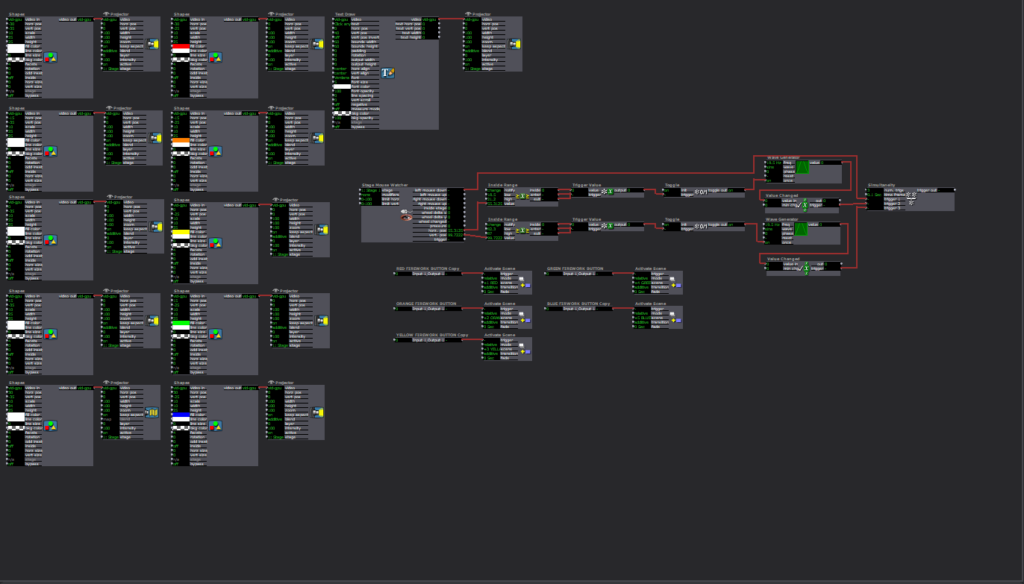

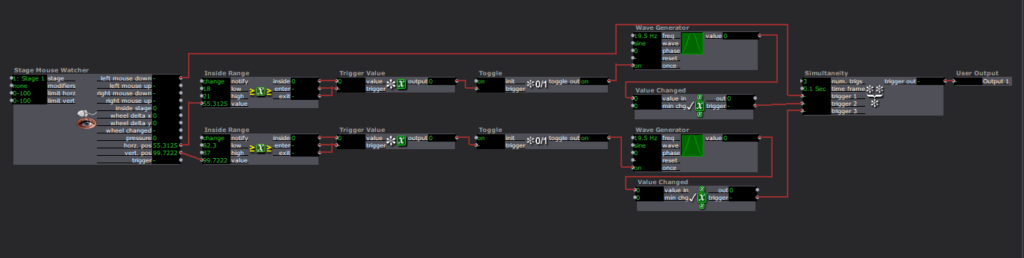

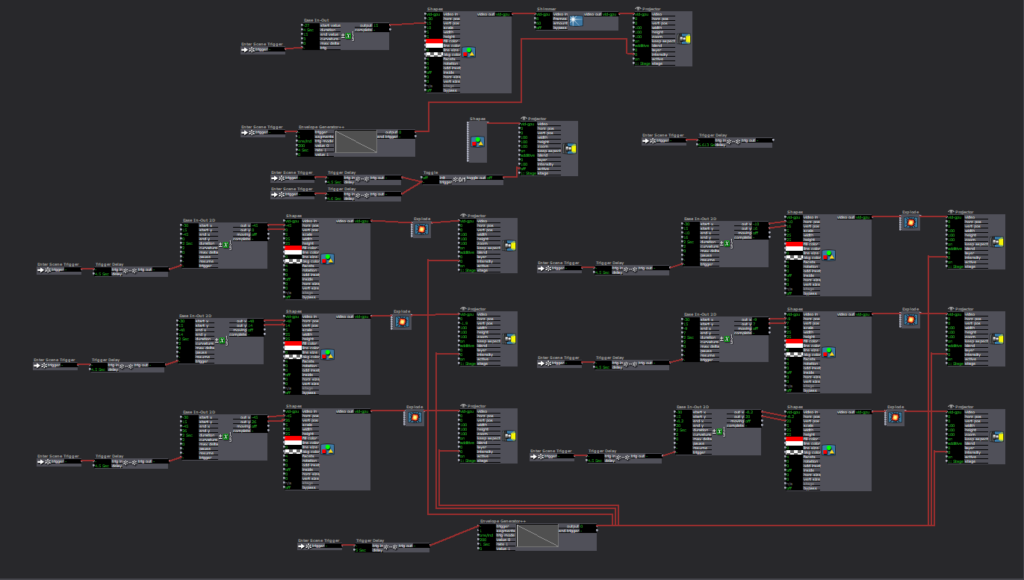

The Program

The first scene is setting up the image that the user always see. This is the firework “barrels” and buttons to launch the fire works.

The buttons were made as a custom user input function. I did not know there was a control panel that already has preset buttons programed. If I had known that I could have saved myself 2 hours of experimenting. So how each button works is the Stage Mouse Watcher checks the location of the mouse in the stage and if the mouse clicks. Then two inside range actors are used to check where the mouse is in the x and y axis. If the mouse is in preset range it triggers a Trigger value actor that goes to a Toggle actor. The toggle actor then turns a wave generator on and off. The wave generator then sends its value to a Value Changed actor. If the in the x bounds, the y bounds, and the mouse clicks triggers activate all at once, then the scene is moved to launch a firework.

The scenes that the buttons jump to is set up to be a unique firework pattern. The box will launch a firework to a set location and after a timer the sparkle after effect will show. Then after it ends the scene ends.

Upon reflection, one part that could have helped retain attention even longer would have been to randomize the fireworks explosion pattern. This could have been done with the random number generator and the value scalar actors to change the location of where the sparkle explosion effect ends up and with how long they last in the air.

Pressure Project 1 | Neon Fragmentation

Posted: January 30, 2024 Filed under: Uncategorized Leave a comment »This piece was inspired by my personal interests in jazz, feedback systems, and media archeology. I’ve played saxophone for 10+ years and look highly upon jazz musicians as fearless explorers of sonic harmony, melody, and rhythm. Their artform requires a fine-tuned motor pathway system connecting the brain and fingers to enable quick musical decisions within an improvisatory ensemble of horns, drums, pianos, and strings. The interactions between musicians in an ensemble are unique and require a auditory feedback to create an artform bigger than any one contributor. In addition to jazz, I’ve worked with electronic feedback systems and learned to respect the impact their discovery has had on much of modern technology. These technological systems form an ecological relationship with human culture which too is receptive to feedback. The process of media archeology can help to scrutinize and analyze dominant narratives about technology and their relationship to culture.

For this project, a computational multimedia software called Isadora was used to devise an audiovisual feedback loop. This system inputs a video file undergoing several stages of video mixing and feature analysis to synchronize low and high frequency audio tone generation with video splitting. Computer vision plugins were used to detect relative motion and color within the inputted video frame. The intensity of motion detected was used to drive the quantity and frequency of video splitting in the XY directions. Additionally, the RGB color proportionality drove emission of audio tones where red produced frequencies between 30 – 400 Hz and green and blue produced frequencies between 1-2kHz.

This piece takes influence from early 1920s Montage film technique including Fernand Leger’s Ballet Mecanique. The affects of the industrial revolution are visualized through mechanical systems held at the same level of authorship as humans. Humans are depicted with cyclic motions such as a woman on a swing evoking the image of a pendulum. The rapid frame changes are also accompanied by a frenetic sound track which is synchronized to the rhythm of industry. The rapid montage clips remind me of jazz because of its quick delivery of stimulus transcending the traditional mental faculties of comprehension. It seems to me that Leger is playing around with visual Jazz in the form of semiotics. When passed through my generative media system, the quick color and shape changes lend themselves well when converted into high and low frequency tones. To the right set of ears, its difficult to tell the difference between abstract jazz and machine noises.

The assembly line style of interaction between humans and machines during and after WWII, is then expressed by a 1950s supermarket film. The women in the store is surrounded by an array of homogenous consumables which funnel smoothly down a conveyor belt, into her car, and then presumably into the home. There is an element of time ticking in the store from the machine noise pressing of keys on the cash-register to the conducting of cars via neon traffic light systems. This exchange of information and household goods all flows to the beat of machine inputs and outputs.

Human-machine stimulus and interaction is even more pronounced in the situation of the 1970s Las Vegas strip. Suburban tourists have gathered in neon wild west in search of cowboys and gold nuggets. Immersed in an ecosystem of electric light and casinos, tourists can at a safe distance engage in a symbolic exchange of money and neon. Grande advertising spectacles erected in the desert now being closer in association to sinful redlight districts than with their initial connotation of technological optimism.

I hope that this piece brings attention to interrelations of human and machines in the 20th century. The aesthetic of multivid splitting by computer vision and foreign sounds helps bring these media artifacts into the vantage of the modern algorithmic viewer. Just as machines influenced human perception and behavior, so too are algorithms.

Media artifacts: