Bumping up Scrying- Cycle 3 post

Posted: August 28, 2024 Filed under: Uncategorized Leave a comment »Came for the Bowie Vibes, Stayed for the Lauper

Posted: August 27, 2024 Filed under: Uncategorized Leave a comment »Duque bumping old discussion

Posted: August 26, 2024 Filed under: Uncategorized Leave a comment »https://dems.asc.ohio-state.edu/wp-admin/post.php?post=3321&action=edit

https://dems.asc.ohio-state.edu/?m=201911

Posted: August 22, 2024 Filed under: Uncategorized Leave a comment »Cycle 3 | 400m Dash | MakeyMakey

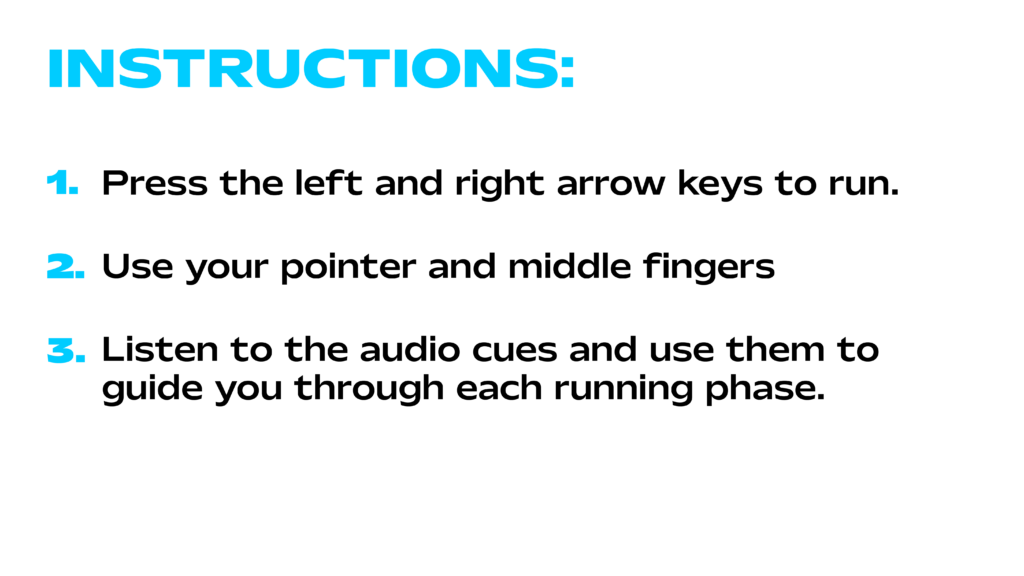

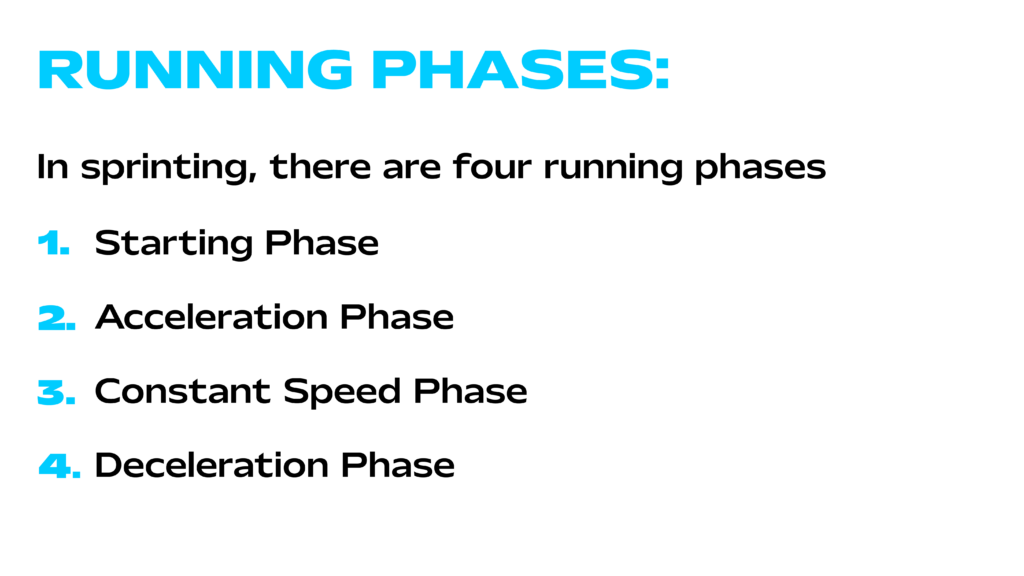

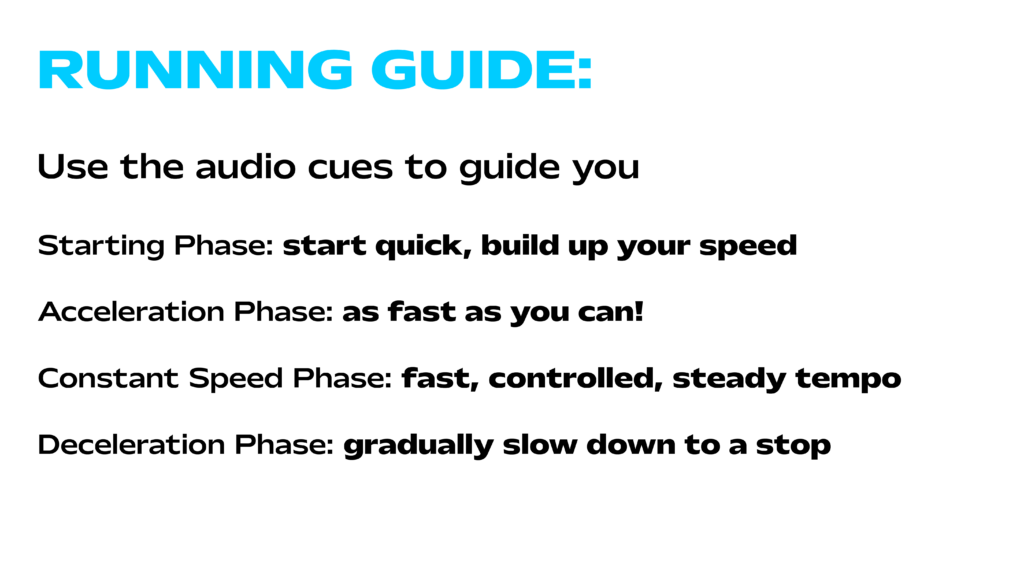

Posted: May 6, 2024 Filed under: Uncategorized Leave a comment »To continue with the cycles, for cycle 3, I chose to incorporate a MakeyMakey and foil to create a running surface for participants, replacing the laptop’s arrow keys. I expected the setup to be relatively straightforward. In previous cycles, I had trouble with automatic image playback, so I decided to make short videos on Adobe Express (which is free). Using this platform, I created the starting video, the audio cue video, and the 400m dash video with the audio cues.

After finalizing my videos and audio cues to my satisfaction, I encountered difficulties getting the MakeyMakey foil to function properly. Through various tests, troubleshooting, and help from Alex, I discovered that participants needed to hold the “Earth” cord while stepping on the foil. Additionally, they either needed sweaty socks or bare feet to activate the MakeyMakey controls. I copied the 400m dash race onto two separate screens and arranged two running areas for my participants. For the two screens and separate runs to work I had to devise a race logic with user actors.

During the presentation, I encountered technical difficulties again. It became apparent that because the participants had possibly sweaty feet, the foil was sticking to them, keeping the MakeyMakey control stay activated. Which caused issues with the race. We quickly realized that I needed to tape down the foil for the race to function properly.

If I were to work on another cycle, I would prioritize ensuring that the running setup functions smoothly and reliably, with both participants able to hear audio from their devices. Additionally, I would expand the project by incorporating race times, a running clock, and possibly personalized race plans tailored to participants’ goal race times or their best race times.

Cycle 2 | 100m Dash

Posted: May 5, 2024 Filed under: Uncategorized Leave a comment »I found myself lacking motivation for my cycle 2 idea, feeling that sticking with my cycle 1 concept was becoming forced. After a discussion with Alex about my thesis interest, we explored some ideas I had been considering. We thought it might be engaging to develop a running simulation where participants experience a first-person sprint, aided by audio cues for speed adjustments. For cycle 2, we decided participants could use their middle and pointer fingers along with the arrow keys to simulate the run, with each button press incrementally advancing the video.

During the presentation, I encountered some technical issues. I realized I needed a better method for sound implementation since I was relying on GarageBand on my phone, which was not effective because the first-person POV 100m dash video to progressed too rapidly. This led to my first feedback suggestion. It was proposed that instead of a 100m dash, a longer race would better showcase the audio cues, allowing participants more time to hear them. Overall, I was pleased with the feedback. Hearing my classmates’ responses to the experience, I decided that for cycle 3, I would incorporate a MakeyMakey and foil to create a running surface for participants, replacing the laptop’s arrow keys.

Cycle 3 – Unknown Creators

Posted: May 5, 2024 Filed under: Uncategorized Leave a comment »For this cycle I had a few ideas. My first one was that I wanted to add some amount of interactivity, as the project felt stagnant. I wasn’t sure how I wanted to do this though. At first I thought I could go back and use some of that tech I made from Pressure Project 3 that I didn’t end up using. Maybe the panels could have knobs on them or something? After talking about where I wanted the project to go with my mentor, it seemed like maybe interactivity wasn’t the way to go. Adding layers of interactivity could potentially confuse people as to what the project is about, and instead Scott emphasized expanding scope to talk more broadly about the subject outside of game development (he gave an example about how one side could be a politician talking about the problems a certain indigenous group and then footage of that group and what they actually had issues with). There are certainly ways of adding interactivity but I did want to expand towards media in general since who don’t know about the games industry can’t meaningfully interact with the piece anyways

Oftentimes, when I would talk about the project, I would reference many different kinds of media, like film or theater, and I wanted to incorporate examples of what I was talking about from these areas too. I ended up pulling examples from two people who I think are better known than Todd Howard: Guillermo Del Toro, Academy Award-winning director, and Michael Jackson, who uhh… is Michael Jackson. I went and collected footage from the making of Pinocchio, a relatively recent film of Del Toro’s that I knew had a ton of talented stop-motion people working on. This is the video that I used for that: https://www.youtube.com/watch?v=LWZ_K7oKu-o

I pulled an example of a well-regarded stop motion animator and puppeteer, Georgina Hayns. She is better known among stop-motion enthusiasts and creators but most lei people probably don’t know who she is, including me. I also pulled an interview of Del Toro from CBS: https://www.youtube.com/watch?v=_7xcED5GoaA

For Michael Jackson, I pulled some old interview footage from 1978: https://www.youtube.com/watch?v=fTTl4Vaow5Y

For the person behind the scenes, I decided to go with Brad Buxer, who I know worked with Michael Jackson and other famous creators like Stevie Wonder from my own personal research and intrigue (I was first told about this via Harry Brewis’s video about the origins of the Roblox oof: https://www.youtube.com/watch?v=0twDETh6QaI). Typically in the music industry there are creators that write lyrics or melodies that don’t get credited to the same degree, and Brad talks about how it’s easy for big creators to pawn off creation to people who work under them. This is a whole different issue, but I think this example is really good since Michael Jackson is a really well known celebrity. Here is Brad Buxer’s Masterclass course that I used for footage: https://www.youtube.com/watch?v=qlYQooIyCAI. What’s funny is that it was actually speculated that Michael Jackson did the music for Sonic 3, but what’s interesting is that Brad Buxer actually is credited for Sonic 3 music and because he is known for working with Michael Jackson, credit is usually give to Jackson. This isn’t completely relevant to the project, I just thought it was interesting that there is a tie back to the games industry. Come to think of it Guillermo Del Toro is also really good friends with another well known game developer, Hideo Kojima, small world I guess.

Anyways, same as before, I had to take the footage and throw it into Premiere Pro to splice it and edit in names and such.

Once again I displayed the project and people felt like I was getting even closer to properly conveying intent. Orlando hadn’t seen any iterations of this project and his interpretation was very close to what I had intended. There was a lot of great conversation sparked too, which was great to see! Overall I’m very happy with this iteration of the project, I’m thinking about applying for a motion lab residency to continue work on this, but for now I’m done.

Cycle 2 – Unknown Creators

Posted: May 5, 2024 Filed under: Uncategorized Leave a comment »For this cycle I decided that I wanted to take feedback from the previous cycle and try and incorporate it into this next one.

For starters, Jiara had mentioned that the wrinkle and material of the fabric felt meaningful. I hadn’t thought about this but I think she’s right, and I wanted to use these ideas. I wasn’t going to change the material, but I did end up making the backsides of the panels more wrinkly and the front more clean. The clean side would be the side with Todd and the messy backside would be the unknown people. I felt this worked really nicely because not only was it conveying metaphorically that these are people who are behind the veil, that they typically deal with messiness that we don’t see, but also it made the images and text harder to see, which I think was in line with my message about how hard it is to find these people.

I also went into premiere pro and edited the footage. I needed the footage of the unknown developers to be arranged so that there were two different people that I could put on the panels. I also added names job titles under all the developers, which I would use within the project. I wanted to express who these people are more directly while still allowing for ambiguity. I remapped the projections, putting the people on their respective panels. I also took Todd’s name and stretched it along the ground to better emphasize the perspective puzzle.

The final thing I did was inspired by talking with my mentor, Scott Swearingen. As I described the project to him he thought it would be interesting if the unknown people were hard to hear in the some way, the maybe the footage was jumbled or disjointed. I liked this idea, but I didn’t want to manually splice the footage. I figured I could have the video jump to random positions while playing, but I didn’t want it to be completely random, and I was trying to figure out how long I wanted footage to play for. I remembered something that Afure and others had said about how it felt like the two pieces of footage were talking to each other, like one was the interviewer and the other was the interviewee. As I was thinking about this, I thought it could be interesting to use the audio data from Todd’s clip and use it to jumble the other footage.

At first I thought I could just get the audio frequency bands from the Movie Player actor, but for some reason I couldn’t do that. I’m not entirely sure why and I tried looking into it (main forum post: https://community.troikatronix.com/topic/6262/answered-using-frequency-monitoring-in-isadora-3-movie-player), it seemed like it wasn’t possible within the Movie Player in this version of Isadora. After talking with Alex though I really just needed to route the audio through Blackhole on the motion lab mac and then use live capture to get the audio data. We created a custom audio configuration that would play to both Blackhole and the motion lab speakers, and after getting the data I simply compared the bands with a threshold. If the band values went above that threshold, the unknown creator footage would jump to a random position.

In terms of setup, everything stayed mostly the same, the only difference this time was the inclusion of a bench on the side of the unknown creators. I wanted people to linger in this area, and I hoped that providing seating would accommodate this (it didn’t but it was worth a shot).

Here is the final video:

After everyone saw it they noticed the things that I had changed and it seemed that I was moving in the right direction. The changes this time were small, but even those small changes seemed to make a difference. The piece felt a little more cohesive, which is good, and the most validating thing for me was Nathan’s reaction. He hadn’t seen the first version of this project and within the span of around 2 or 3 minutes I heard him say “Oh I get it”. He caught that you could only hear the audio from the unknown people when Todd stopped talking and immediately got what the project was about, and I was really happy to hear this. He knew who these people are so it seems that people with prior knowledge could potentially get ahold of the intended message. Still, people without prior knowledge are left alienated, and I wanted to address this going forward.